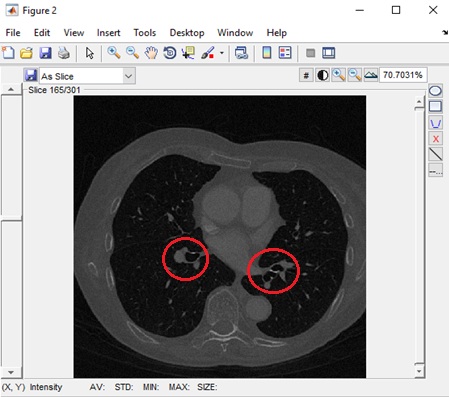

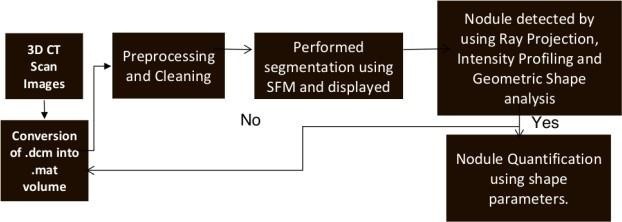

Accurate Lungs Nodule identification is critical for early Lungs Nodule detection. Thus this study

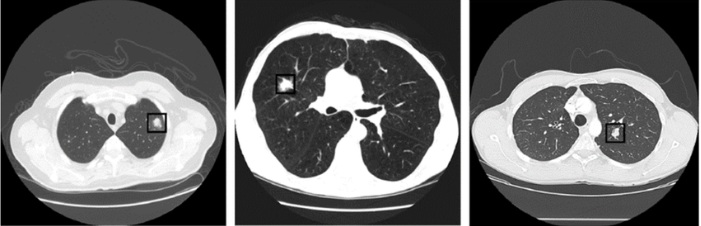

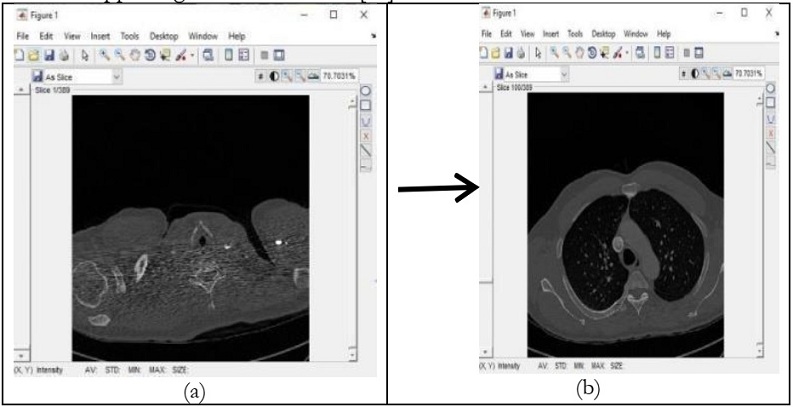

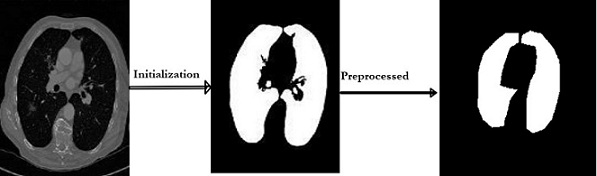

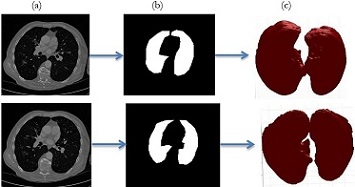

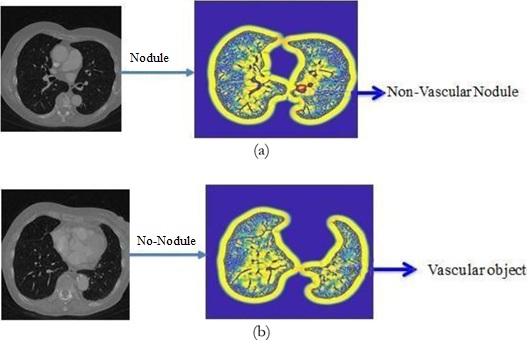

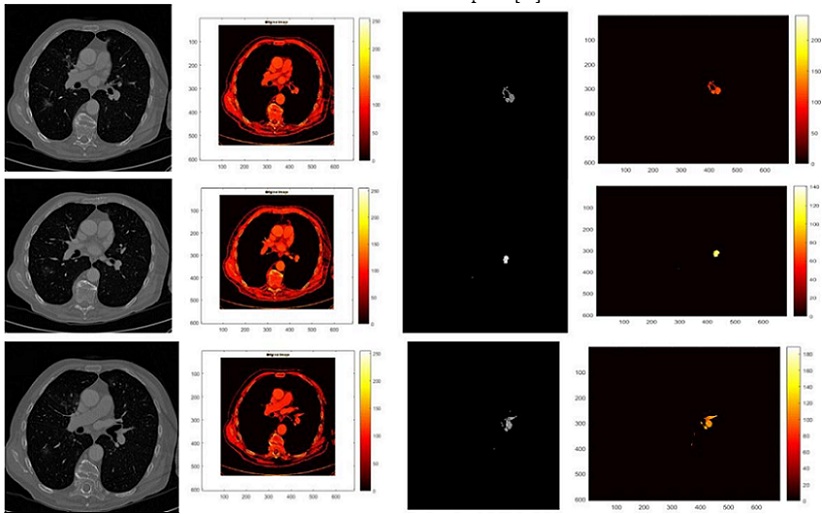

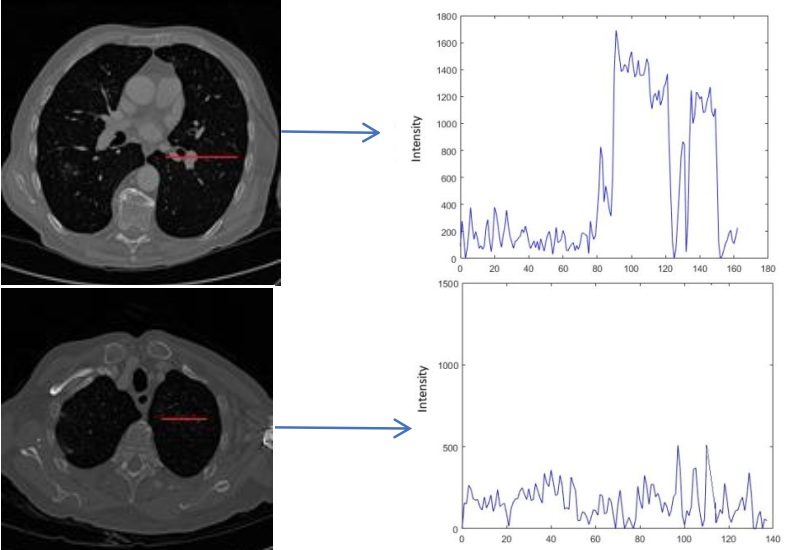

proposed a model to address nodules present in 3D CT Scan images. The proposed model preprocessed

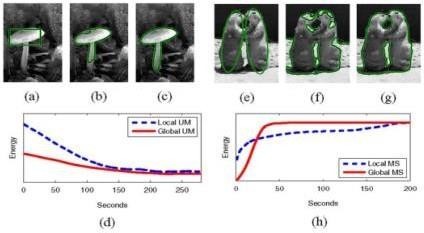

the image by removing unwanted data and segmented the data by using SFM (sparse field method) then

give its geometric shape and in the end detect nodules. Quantification shows the number of pixels

nodule covered in the image. In the past few years, the use of computer systems and image

processing to analyze medical CT images has come a long way, and many published works could be

used in medical practice. In this situation, doctors need to learn more about how computer systems

work in medical image processing. This will allow them to use these systems to find lung cancer

early. But for these systems to be used and accepted, their flaws must be fixed. Because of this,

developers and analysts need to work closely with the medical community. This way, the specific

needs of CAD systems can be used to make them better. Doctors, patients, engineers, and scientists

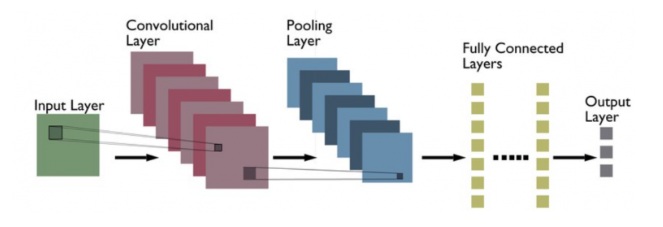

will all work together to make this happen. In this work, the use of CAD systems in processing CT

images of the lungs has been looked into, and the stages of processing that are needed to make

diagnoses and find lung nodules have been shown. Researchers in this field should find this

information useful, and it should also encourage doctors to use these systems.

[1] Y. Tadavarthi et al., “The state of radiology AI: Considerations for purchase decisions and current market

offerings,” Radiol. Artif. Intell., vol. 2, no. 6, pp. 1–9, 2020, doi: 10.1148/ryai.2020200004.

[2] G. Chassagnon, M. Vakalopoulou, N. Paragios, and M. P. Revel, “Artificial intelligence applications for thoracic

imaging,” Eur. J. Radiol., vol. 123, no. June 2019, 2020, doi: 10.1016/j.ejrad.2019.108774.

[3] G. Chassagnon et al., “Elastic registration-driven deep learning for longitudinal assessment of systemic sclerosis

interstitial lung disease at CT,” Radiology, vol. 298, no. 1, pp. 189–198, 2020, doi: 10.1148/RADIOL.2020200319.

[4] G. Chassagnon et al., “Quantification of cystic fibrosis lung disease with radiomics-based ct scores,” Radiol.

Cardiothorac. Imaging, vol. 2, no. 6, 2020, doi: 10.1148/ryct.2020200022.

[5] G. Chassagnon et al., “Deep learning–based approach for automated assessment of interstitial lung disease in systemic

sclerosis on ct images,” Radiol. Artif. Intell., vol. 2, no. 4, pp. 1–10, 2020, doi: 10.1148/ryai.2020190006.

[6] G. Chassagnon et al., “AI-driven quantification, staging and outcome prediction of COVID-19 pneumonia,” Med. Image

Anal., vol. 67, p. 101860, 2021, doi: 10.1016/j.media.2020.101860.

[7] G. Chassagnon, M. Vakalopolou, N. Paragios, and M. P. Revel, “Deep learning: definition and perspectives for thoracic

imaging,” Eur. Radiol., vol. 30, no. 4, pp. 2021–2030, 2020, doi: 10.1007/s00330-019-06564-3.

[8] Q. Wu and W. Zhao, “Small-Cell Lung Cancer Detection Using a Supervised Machine Learning Algorithm,” Proc. - 2017

Int. Symp. Comput. Sci. Intell. Control. ISCSIC 2017, vol. 2018-February, pp. 88–91, 2018, doi: 10.1109/ISCSIC.2017.22.

[9] L. Böröczky, L. Zhao, and K. P. Lee, “Feature subset selection for improving the performance of false positive

reduction in lung nodule CAD,” IEEE Trans. Inf. Technol. Biomed., vol. 10, no. 3, pp. 504–511, 2006, doi:

10.1109/TITB.2006.872063.

[10] M. Schultheiss et al., “Lung nodule detection in chest X-rays using synthetic ground-truth data comparing CNN-based

diagnosis to human performance,” Sci. Rep., vol. 11, no. 1, pp. 1–10, 2021, doi: 10.1038/s41598-021-94750-z.

[11] R. Sammouda, “Segmentation and analysis of CT chest images for early lung cancer detection,” Proc. - 2016 Glob.

Summit Comput. Inf. Technol. GSCIT 2016, pp. 120–126, 2017, doi: 10.1109/GSCIT.2016.29.

[12] F. Homayounieh et al., “An Artificial Intelligence-Based Chest X-ray Model on Human Nodule Detection Accuracy from a

Multicenter Study,” JAMA Netw. Open, vol. 4, no. 12, pp. 1–11, 2021, doi: 10.1001/jamanetworkopen.2021.41096.

[13] N. Khehrah, M. S. Farid, S. Bilal, and M. H. Khan, “Lung nodule detection in CT images using statistical and

shape-based features,” J. Imaging, vol. 6, no. 2, 2020, doi: 10.3390/jimaging6020006.

[14] R. Gruetzemacher, A. Gupta, and D. Paradice, “3D deep learning for detecting pulmonary nodules in CT scans,” J. Am.

Med. Informatics Assoc., vol. 25, no. 10, pp. 1301–1310, 2018, doi: 10.1093/jamia/ocy098.

[15] E. E. Nithila and S. S. Kumar, “Segmentation of lung nodule in CT data using active contour model and Fuzzy C-mean

clustering,” Alexandria Eng. J., vol. 55, no. 3, pp. 2583–2588, 2016, doi: 10.1016/j.aej.2016.06.002.

[16] D. Riquelme and M. Akhloufi, “Deep Learning for Lung Cancer Nodules Detection and Classification in CT Scans,” Ai,

vol. 1, no. 1, pp. 28–67, 2020, doi: 10.3390/ai1010003.

[17] A. C. M. et al. MacMahon, Heber, David P. Naidich, Jin Mo Goo, Kyung Soo Lee, Ann NC Leung, John R. Mayo, “Guidelines

for management of incidental pulmonary nodules detected on CT images: from the Fleischner Society 2017,” Radiology, vol. 284,

no. 1, pp. 228–243, 2017.

[18] H. Cao et al., “A Two-Stage Convolutional Neural Networks for Lung Nodule Detection,” IEEE J. Biomed. Heal.

Informatics, vol. 24, no. 7, pp. 2006–2015, 2020, doi: 10.1109/JBHI.2019.2963720.

[19] M. S. A. Dwivedi, M. R. P. Borse, and M. A. M. Yametkar, “Lung Cancer detection and Classification by using Machine

Learning & Multinomial Bayesian,” IOSR J. Electron. Commun. Eng., vol. 9, no. 1, pp. 69–75, 2014, doi: 10.9790/2834-09136975.

[20] X. X. Jiang, Beibei, Nianyun Li, Xiaomeng Shi, Shuai Zhang, Jianying Li, Geertruida H. de Bock, Rozemarijn

Vliegenthart, “Deep learning reconstruction shows better lung nodule detection for ultra–low-dose chest CT,” Radiology, vol.

303, no. 1, pp. 202–212, 2022.

[21] S. M. Naqi, M. Sharif, and M. Yasmin, “Multistage segmentation model and SVM-ensemble for precise lung nodule

detection,” Int. J. Comput. Assist. Radiol. Surg., vol. 13, no. 7, pp. 1083–1095, 2018, doi: 10.1007/s11548-018-1715-9.

[22] X. Li et al., “Multi-resolution convolutional networks for chest X-ray radiograph based lung nodule detection,”

Artif. Intell. Med., vol. 103, p. 101744, 2020, doi: 10.1016/j.artmed.2019.101744.

[23] S. Kido et al., “Segmentation of Lung Nodules on CT Images Using a Nested Three-Dimensional Fully Connected

Convolutional Network,” Front. Artif. Intell., vol. 5, no. February, pp. 1–9, 2022, doi: 10.3389/frai.2022.782225.

[24] M. Schultheiss et al., “A robust convolutional neural network for lung nodule detection in the presence of foreign

bodies,” Sci. Rep., vol. 10, no. 1, pp. 1–9, 2020, doi: 10.1038/s41598-020-69789-z.

[25] S. H. Rehman, Muhammad Zubair, Nazri Mohd Nawi, Aisha Tanveer, Hassan Zafar, Hamza Munir, “Lungs cancer nodules

detection from CT scan images with convolutional neural networks,” Int. Conf. Soft Comput. Data Min., pp. 382–391, 2020.

[26] N. Sourlos, J. Wang, Y. Nagaraj, P. van Ooijen, and R. Vliegenthart, “Possible Bias in Supervised Deep Learning

Algorithms for CT Lung Nodule Detection and Classification,” Cancers (Basel)., vol. 14, no. 16, pp. 1–15, 2022, doi:

10.3390/cancers14163867.

[27] S. Fernandes et al., “Solitary pulmonary nodule imaging approaches and the role of optical fibre-based technologies,”

Eur. Respir. J., vol. 57, no. 3, 2021, doi: 10.1183/13993003.02537-2020.

[28] Q. W. Tao, Haoyi, Yuanfang Qiao, Lichi Zhang, Yiqiang Zhan, Zhong Xue, “Anatomical Structure-Aware Pulmonary

Nodule Detection via Parallel Multi-task RoI Head.,” Int. Work. Predict. Intell. Med., pp. 212–220, 2021.

[29] X. F. Lu, Yu, Huanwen Liang, Shijie Shi, “Lung Cancer Detection using a Dilated CNN with VGG16,” 2021 4th Int.

Conf. Signal Process. Mach. Learn., pp. 45–51, 2021.

[30] J. Zhang, K. Xia, Z. He, Z. Yin, and S. Wang, “Semi-Supervised Ensemble Classifier with Improved Sparrow Search

Algorithm and Its Application in Pulmonary Nodule Detection,” Math. Probl. Eng., vol. 2021, 2021, doi: 10.1155/2021/6622935.

[31] A. Naik and D. R. Edla, Lung Nodule Classification on Computed Tomography Images Using Deep Learning, vol. 116, no.

1. Springer US, 2021. doi: 10.1007/s11277-020-07732-1.