Our experiments unveiled the performance of RLE, DPCM, and BWT through the

application of diverse metrics. PSNR and SSIM scores provided insights into the preservation

of image quality, where higher scores indicated superior performance. Lower MSE values

denoted fewer errors, which is a favorable outcome. Bitrate offered insights into the

compression levels, while computational complexity shed light on the algorithms' efficiency in

utilizing processing time and resources. These measurements furnish a clear and direct means

of evaluating the effectiveness of the algorithms.

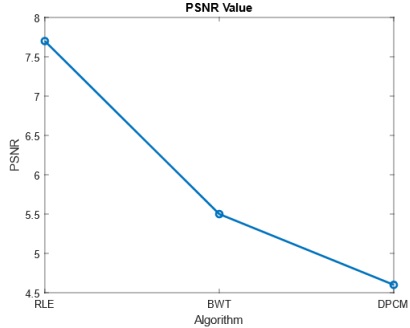

The PSNR chart (in Figure 4) is a useful tool for evaluating lossless image compression

algorithms. The y-axis measures PSNR values, quantifying compressed image quality, while the

x-axis lists the algorithms under examination. RLE achieves an impressive PSNR of 7.7,

indicating excellent image quality preservation and effective compression. Following closely,

BWT achieves a PSNR of 5.5, indicating slightly lower image quality than RLE but still offering

reasonable compression without significant detail loss. DPCM records the lowest PSNR at 4.6,

suggesting it introduces more noticeable artifacts and compromises image quality. In summary,

RLE excels with the highest image quality, making it suitable for applications prioritizing image

fidelity. BWT, while slightly lower in PSNR compared to RLE, maintains acceptable image

quality and is suitable when balancing compression and quality is important. DPCM, with the lowest PSNR, should be chosen carefully, especially in applications where preserving image

quality is paramount.

The SSIM comparison chart (Figure 5) offers crucial insights into the performance of

various lossless image compression algorithms. On this graph, the y-axis represents SSIM values,

which measure the structural similarity between the original and compressed images. Meanwhile,

the x-axis lists the algorithms being assessed. RLE obtains the lowest SSIM value among the

algorithms at 0.005. This signifies a substantial structural dissimilarity between the compressed

and original images when using RLE, indicating a significant loss of image fidelity. When we

consider SSIM, we observe that BWT outperforms RLE with a score of 0.3, indicating a higher

degree of structural similarity, though not an exact match to the original image. DPCM also

achieves a score of 0.29, which is close to BWT, suggesting comparable structural likeness but

still some variance from the original. In summary, BWT attains the highest SSIM score,

indicating that it preserves structural details better than RLE and DPCM. However, it is crucial

to acknowledge that even with BWT and DPCM, there remains a significant structural difference

from the original image. This underscores the challenge of retaining fine structural details in

lossless compression techniques.

When we delve into the MSE comparison chart (Figure 6), it proves to be a robust tool

for assessing different lossless image compression methods. The y-axis displays MSE values,

representing the average squared difference between original and compressed images, while the

x-axis identifies the algorithms under scrutiny. RLE shows the lowest MSE value among the

algorithms, scoring 1.5 x 104. This indicates minimal distortion between the compressed and

original images when using RLE, making it excel in preserving image quality compared to the

others. BWT follows RLE with an MSE of 1.85 x 104, suggesting slightly higher distortion but

still within an acceptable range for maintaining image quality. DPCM records the highest MSE

value among the algorithms, at 2.55 x 104. This signifies greater distortion when using DPCM

for compression, implying a lower image quality after compression compared to RLE and BWT.

In summary, RLE stands out as the best performer in minimizing image distortion, boasting the

lowest MSE value. BWT closely follows, introducing slightly more distortion but still preserving

image quality well. On the other hand, DPCM introduces a higher degree of distortion,

indicating comparatively lower image quality post-compression.

The bit rate comparison chart (in Figure 7) is a valuable tool for assessing various lossless

image compression algorithms. On this chart, the y-axis measures bit rate in bytes, while the xaxis lists the algorithms under consideration. RLE displays a bit rate of 1.12 bytes, indicating it

requires slightly more storage space, on average, to represent compressed images. While still

effective, this higher bit rate suggests it may not achieve compression levels as impressive as

some other algorithms. However, BWT shines with a 1-byte Bit Rate, highlighting superior compression efficiency compared to RLE. This suggests that BWT can offer better compression

ratios while preserving image quality. Similarly, DPCM also achieves a 1-byte Bit Rate,

demonstrating efficient compression with minimal storage requirements. Both BWT and DPCM

excel in terms of bit rate, demanding minimal storage space for compressed images.

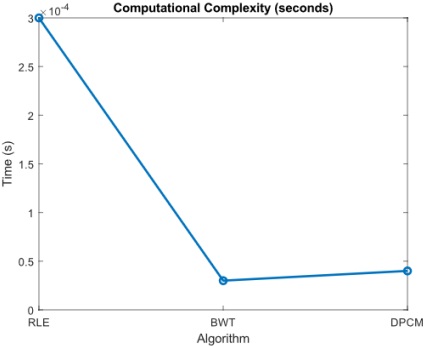

Now, turning to the computational complexity comparison chart (in Figure 8), it

becomes a valuable tool for evaluating the efficiency of various lossless image compression

algorithms. The y-axis quantifies computational complexity in seconds or related units, while the

x-axis lists the algorithms under examination. RLE stands out as a top performer with a low

computational complexity of 3 x 10-4 seconds, highlighting its efficiency in swiftly compressing

or decompressing images, making it an excellent choice for scenarios with limited computational

resources, even though it may not achieve the highest compression ratios. BWT closely follows

with a slightly higher computational complexity of 0.3 x 10-4 seconds. While it demands a bit

more computational effort than RLE, this trade-off is justified by the improved compression

ratios it offers. DPCM presents a computational complexity of 0.4 x 10-4 seconds, slightly higher

than both RLE and BWT. Much like the BWT, DPCM achieves a harmonious equilibrium

between computational requirements and compression efficiency, presenting a beneficial

compromise that optimizes resource utilization while maintaining compression performance. In

summary, RLE emerges as the most computationally efficient algorithm, making it well-suited

for scenarios with strict computational constraints, even though it may not achieve the highest

compression ratios. BWT and DPCM, while slightly more computationally demanding, provide

improved compression efficiency

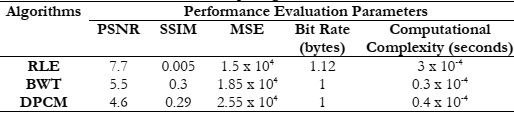

Table 1 shows the consolidated table comprising values of RLE, BWT and DPCM. The

RLE algorithm exhibits moderate PSNR, indicating a reasonable level of image quality

preservation. However, its SSIM score is low, suggesting poor structural similarity. The MSE

value is high, implying a substantial error in pixel value prediction. On the positive side, RLE achieves a low bit rate, making it efficient in terms of compression. Its computational complexity

is also notably low, making it suitable for real-time applications.

The BWT algorithm offers a lower PSNR compared to RLE, indicating a degradation

in image quality. However, it exhibits a higher SSIM score, suggesting better structural similarity.

The MSE value remains high, signifying pixel value prediction errors. BWT excels in terms of

bit rate, achieving a highly efficient compression. The computational complexity is low, making

it suitable for applications with modest time constraints. Among the three algorithms, DPCM

records the lowest PSNR, signaling a notable decline in image quality. The low SSIM score

indicates suboptimal structural similarity, and the highest MSE value underscores substantial

errors in pixel prediction. Similar to BWT, DPCM attains an efficient bit rate. Its computational

complexity is reasonable, albeit slightly higher than that of BWT.

[1] M. A. Rahman, M. Hamada, and M. A. Rahman, “A comparative analysis of the state-ofthe-art lossless image compression techniques,” SHS Web Conf., vol. 139, p. 03001, 2022,

doi: 10.1051/SHSCONF/202213903001.

[2] N. A. N. Azman, S. Ali, R. A. Rashid, F. A. Saparudin, and M. A. Sarijari, “A hybrid

predictive technique for lossless image compression,” Bull. Electr. Eng. Informatics, vol. 8,

no. 4, pp. 1289–1296, Dec. 2019, doi: 10.11591/EEI.V8I4.1612.

[3] R. Naveen Kumar, B. N. Jagadale, and J. S. Bhat, “A lossless image compression

algorithm using wavelets and fractional Fourier transform,” SN Appl. Sci., vol. 1, no. 3,

pp. 1–8, Mar. 2019, doi: 10.1007/S42452-019-0276-Z/FIGURES/8.

[4] M. A. Rahman, M. Hamada, and J. Shin, “The Impact of State-of-the-Art Techniques

for Lossless Still Image Compression,” Electron. 2021, Vol. 10, Page 360, vol. 10, no. 3, p.

360, Feb. 2021, doi: 10.3390/ELECTRONICS10030360.

[5] M. A. Rahman and M. Hamada, “PCBMS: A Model to Select an Optimal Lossless

Image Compression Technique,” IEEE Access, vol. 9, pp. 167426–167433, 2021, doi:

10.1109/ACCESS.2021.3137345.

[6] H. Zhang, F. Cricri, H. R. Tavakoli, N. Zou, E. Aksu, and M. M. Hannuksela, “Lossless

Image Compression Using a Multi-scale Progressive Statistical Model,” Lect. Notes

Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12624

LNCS, pp. 609–622, 2021, doi: 10.1007/978-3-030-69535-4_37/COVER.

[7] R. Suresh Kumar and P. Manimegalai, “Near lossless image compression using parallel

fractal texture identification,” Biomed. Signal Process. Control, vol. 58, p. 101862, Apr.2020, doi: 10.1016/J.BSPC.2020.101862.

[8] M. Otair, L. Abualigah, and M. K. Qawaqzeh, “Improved near-lossless technique using

the Huffman coding for enhancing the quality of image compression,” Multimed. Tools

Appl., vol. 81, no. 20, pp. 28509–28529, Aug. 2022, doi: 10.1007/S11042-022-12846-

8/METRICS.

[9] M. A. Rahman and M. Hamada, “A prediction-based lossless image compression

procedure using dimension reduction and Huffman coding,” Multimed. Tools Appl., vol.

82, no. 3, pp. 4081–4105, Jan. 2023, doi: 10.1007/S11042-022-13283-3/METRICS.

[10] M. A. Rahman and M. Hamada, “A Semi-lossless image compression procedure using a

lossless mode of jpeg,” Proc. - 2019 IEEE 13th Int. Symp. Embed. Multicore/Many-Core

Syst. MCSoC 2019, pp. 143–148, Oct. 2019, doi: 10.1109/MCSOC.2019.00028.

[11] S. C. Satapathy, V. Bhateja, M. Ramakrishna Murty, N. Gia Nhu, and Jayasri Kotti,

Eds., “Communication Software and Networks,” vol. 134, 2021, doi: 10.1007/978-981-

15-5397-4.

[12] M. A. Rahman and M. Hamada, “Lossless Image Compression Techniques: A State-ofthe-Art Survey,” Symmetry 2019, Vol. 11, Page 1274, vol. 11, no. 10, p. 1274, Oct. 2019,

doi: 10.3390/SYM11101274.

[13] M. A. Al-jawaherry and S. Y. Hamid, “Image Compression Techniques: Literature

Review,” J. Al-Qadisiyah Comput. Sci. Math., vol. 13, no. 4, p. Page 10-21-Page 10 – 21,

Dec. 2021, doi: 10.29304/JQCM.2021.13.4.860.

[14] L. S. S. P. Amandeep Kaur, Sonali Gupta, “COMPREHENSIVE STUDY OF IMAGE

COMPRESSION TECHNIQUES,” J. Crit. Rev, vol. 7, no. 17, pp. 2382–2388, 2020.

[15] Y. L. Prasanna, Y. Tarakaram, Y. Mounika, and R. Subramani, “Comparison of

Different Lossy Image Compression Techniques,” Proc. 2021 IEEE Int. Conf. Innov.

Comput. Intell. Commun. Smart Electr. Syst. ICSES 2021, 2021, doi:

10.1109/ICSES52305.2021.9633800.

[16] A. K. Singh, S. Bhushan, and S. Vij, “A Brief Analysis and Comparison of DCT- and

DWT-Based Image Compression Techniques,” pp. 45–55, 2021, doi: 10.1007/978-981-

15-4936-6_5.

[17] A. Birajdar, H. Agarwal, M. Bolia, and V. Gupte, “Image Compression using Run

Length Encoding and its Optimisation,” 2019 Glob. Conf. Adv. Technol. GCAT 2019,

Oct. 2019, doi: 10.1109/GCAT47503.2019.8978464.

[18] K. L. Precious, G.B. and Giok, “A COMPARATIVE ANALYSIS OF IMAGE

COMPRESSION USING PCM AND DPCM,” Inf. Technol., vol. 4, no. 1, pp. 60–67,

2020.

[19] G. A. Haidar, R. Achkar, and H. Dourgham, “A comparative simulation study of the

real effect of PCM, DM and DPCM systems on audio and image modulation,” 2016

IEEE Int. Multidiscip. Conf. Eng. Technol. IMCET 2016, pp. 144–149, Dec. 2016, doi:

10.1109/IMCET.2016.7777442.

[20] Z. H. Abeda and G. K. AL-Khafaji, “Pixel Based Techniques for Gray Image

Compression: A review,” J. Al-Qadisiyah Comput. Sci. Math., vol. 14, no. 2, p. Page 59-70,

Jul. 2022, doi: 10.29304/JQCM.2022.14.2.967.

[21] A. Shalayiding, Z. Arnavut, B. Koc, and H. Kocak, “Burrows-Wheeler Transformation

for Medical Image Compression,” 11th Annu. IEEE Inf. Technol. Electron. Mob. Commun.

Conf. IEMCON 2020, pp. 723–727, Nov. 2020, doi:

10.1109/IEMCON51383.2020.9284917.

[22] “Burrows Wheeler transform - Wikipedia.” Accessed: Nov. 06, 2023. [Online].

Available: https://en.wikipedia.org/wiki/Burrows_Wheeler_transform

[23] M. B. Begum, N. Deepa, M. Uddin, R. Kaluri, M. Abdelhaq, and R. Alsaqour, “An efficient and secure compression technique for data protection using burrows-wheeler

transform algorithm,” Heliyon, vol. 9, no. 6, Jun. 2023, doi:

10.1016/j.heliyon.2023.e17602.

[24] G. Devika, R. Sandha, S. Shaik Parveen, and P. Hemavathy, “BURROWS WHEELER

TRANSFORM FOR SATELLITE IMAGE COMPRESSION USING WHALE

OPTIMIZATION ALGORITHM,” Adv. Appl. Math. Sci., vol. 20, no. 11, pp. 2627–

2634, 2021.

[25] Č. Livada, T. Horvat, and A. Baumgartner, “Novel Block Sorting and Symbol

Prediction Algorithm for PDE-Based Lossless Image Compression: A Comparative

Study with JPEG and JPEG 2000,” Appl. Sci. 2023, Vol. 13, Page 3152, vol. 13, no. 5, p.

3152, Feb. 2023, doi: 10.3390/APP13053152.

[26] “SIPI Image Database - Misc.” Accessed: Nov. 06, 2023. [Online]. Available:

https://sipi.usc.edu/database/database.php?volume=misc

[27] H. Choi and I. V. Bajic, “Scalable Image Coding for Humans and Machines,” IEEE

Trans. Image Process., vol. 31, pp. 2739–2754, 2022, doi: 10.1109/TIP.2022.3160602.

[28] N. Le, H. Zhang, F. Cricri, R. Ghaznavi-Youvalari, H. R. Tavakoli, and E. Rahtu,

“LEARNED IMAGE CODING FOR MACHINES: A CONTENT-ADAPTIVE

APPROACH,” Proc. - IEEE Int. Conf. Multimed. Expo, 2021, doi:

10.1109/ICME51207.2021.9428224.

[29] T. Chen, H. Liu, Z. Ma, Q. Shen, X. Cao, and Y. Wang, “End-to-End Learnt Image

Compression via Non-Local Attention Optimization and Improved Context

Modeling,” IEEE Trans. Image Process., vol. 30, pp. 3179–3191, 2021, doi:

10.1109/TIP.2021.3058615.

[30] F. Yuan, L. Zhan, P. Pan, and E. Cheng, “Low bit-rate compression of underwater

image based on human visual system,” Signal Process. Image Commun., vol. 91, p. 116082,

Feb. 2021, doi: 10.1016/J.IMAGE.2020.116082.

[31] S. Cho et al., “Low Bit-rate Image Compression based on Post-processing with

Grouped Residual Dense Network”.

[32] A. Lin, B. Chen, J. Xu, Z. Zhang, G. Lu, and D. Zhang, “DS-TransUNet: Dual Swin

Transformer U-Net for Medical Image Segmentation,” IEEE Trans. Instrum. Meas., vol.

71, 2022, doi: 10.1109/TIM.2022.3178991.

[33] W. Wang, C. Chen, M. Ding, H. Yu, S. Zha, and J. Li, “TransBTS: Multimodal Brain

Tumor Segmentation Using Transformer,” Lect. Notes Comput. Sci. (including Subser. Lect.

Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12901 LNCS, pp. 109–119, 2021, doi:

10.1007/978-3-030-87193-2_11/COVER.

[34] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, and M. H. Yang, “Restormer:

Efficient Transformer for High-Resolution Image Restoration,” Proc. IEEE Comput. Soc.

Conf. Comput. Vis. Pattern Recognit., vol. 2022-June, pp. 5718–5729, 2022, doi:

10.1109/CVPR52688.2022.00564.

[35] A. Hatamizadeh et al., “UNETR: Transformers for 3D Medical Image Segmentation,”

Proc. - 2022 IEEE/CVF Winter Conf. Appl. Comput. Vision, WACV 2022, pp. 1748–

1758, 2022, doi: 10.1109/WACV51458.2022.00181.

[36] J. M. J. Valanarasu, P. Oza, I. Hacihaliloglu, and V. M. Patel, “Medical Transformer:

Gated Axial-Attention for Medical Image Segmentation,” Lect. Notes Comput. Sci.

(including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12901 LNCS, pp.

36–46, 2021, doi: 10.1007/978-3-030-87193-2_4/COVER.

[37] D. Chicco, M. J. Warrens, and G. Jurman, “The coefficient of determination R-squared

is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression

analysis evaluation,” PeerJ Comput. Sci., vol. 7, pp. 1–24, Jul. 2021, doi: 10.7717/PEERJ CS.623/SUPP-1.

[38] H. Singh, A. S. Ahmed, F. Melandsø, and A. Habib, “Ultrasonic image denoising using

machine learning in point contact excitation and detection method,” Ultrasonics, vol.

127, p. 106834, Jan. 2023, doi: 10.1016/J.ULTRAS.2022.106834.

[39] A. Kumar and M. Dua, “Image encryption using a novel hybrid chaotic map and

dynamic permutation−diffusion,” Multimed. Tools Appl., pp. 1–24, Sep. 2023, doi:

10.1007/S11042-023-16817-5/METRICS.

[40] Y. Lu, M. Gong, L. Cao, Z. Gan, X. Chai, and A. Li, “Exploiting 3D fractal cube and

chaos for effective multi-image compression and encryption,” J. King Saud Univ. -

Comput. Inf. Sci., vol. 35, no. 3, pp. 37–58, Mar. 2023, doi:

10.1016/J.JKSUCI.2023.02.004.

[41] O. Rashid, A. Amin, and M. R. Lone, “Performance analysis of DWT families,” Proc.

3rd Int. Conf. Intell. Sustain. Syst. ICISS 2020, pp. 1457–1463, Dec. 2020, doi:

10.1109/ICISS49785.2020.9315960.

[42] U. Sara, M. Akter, M. S. Uddin, U. Sara, M. Akter, and M. S. Uddin, “Image Quality

Assessment through FSIM, SSIM, MSE and PSNR—A Comparative Study,” J. Comput.

Commun., vol. 7, no. 3, pp. 8–18, Mar. 2019, doi: 10.4236/JCC.2019.73002.

[43] Y. Huang, B. Niu, H. Guan, and S. Zhang, “Enhancing Image Watermarking with

Adaptive Embedding Parameter and PSNR Guarantee,” IEEE Trans. Multimed., vol. 21,

no. 10, pp. 2447–2460, Oct. 2019, doi: 10.1109/TMM.2019.2907475.

[44] U. Erkan, D. N. H. Thanh, L. M. Hieu, and S. Enginoglu, “An iterative mean filter for

image denoising,” IEEE Access, vol. 7, pp. 167847–167859, 2019, doi:

10.1109/ACCESS.2019.2953924.

[45] A. Elhadad, A. Ghareeb, and S. Abbas, “A blind and high-capacity data hiding of

DICOM medical images based on fuzzification concepts,” Alexandria Eng. J., vol. 60,

no. 2, pp. 2471–2482, Apr. 2021, doi: 10.1016/J.AEJ.2020.12.050.

[46] W. Chen, B. Qi, X. Liu, H. Li, X. Hao, and Y. Peng, “Temperature-Robust Learned

Image Recovery for Shallow-Designed Imaging Systems,” Adv. Intell. Syst., vol. 4, no.

10, p. 2200149, Oct. 2022, doi: 10.1002/AISY.202200149.

[47] W. Y. Juan, “Generating Synthesized Computed Tomography (CT) from Magnetic

Resonance Imaging Using Cycle-Consistent Generative Adversarial Network for Brain

Tumor Radiation Therapy,” Int. J. Radiat. Oncol. Biol. Physics, vol. 111, no. 3, pp. e111–

e11, 2021.

[48] D. R. I. M. Setiadi, “PSNR vs SSIM: imperceptibility quality assessment for image

steganography,” Multimed. Tools Appl., vol. 80, no. 6, pp. 8423–8444, Mar. 2021, doi:

10.1007/S11042-020-10035-Z/METRICS.

[49] J. Nilsson and T. Akenine-Möller, “Understanding SSIM,” Jun. 2020, Accessed: Nov.

06, 2023. [Online]. Available: https://arxiv.org/abs/2006.13846v2

[50] V. V. Starovoitov, E. E. Eldarova, and K. T. Iskakov, “Comparative analysis of the

ssim index and the pearson coefficient as a criterion for image similarity,” Eurasian J.

Math. Comput. Appl., vol. 8, no. 1, pp. 76–90, 2020, doi: 10.32523/2306-6172-2020-8-1-

76-90.

[51] J. Peng et al., “Implementation of the structural SIMilarity (SSIM) index as a quantitative

evaluation tool for dose distribution error detection,” Med. Phys., vol. 47, no. 4, pp.

1907–1919, Apr. 2020, doi: 10.1002/MP.14010.