Body Movement:

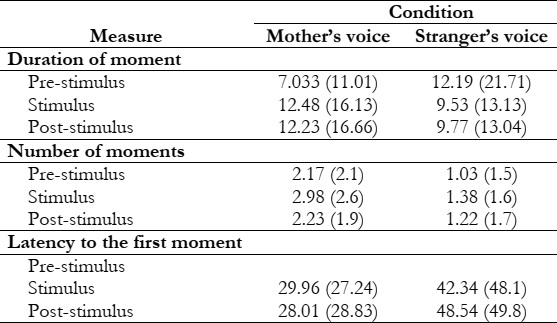

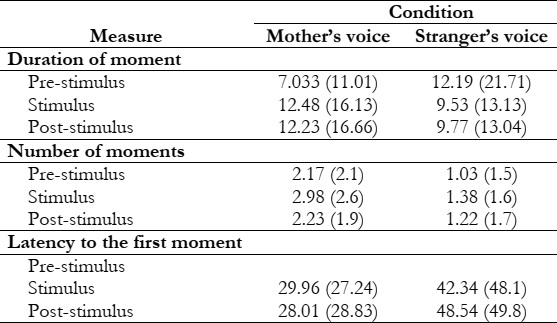

To re-evaluate the analysis of body movements in earlier research using short-term stimuli, we compared the existence of body movements 5 s before the commencement of the sound to those within 5 s after the sound began. There is no distinction between the two periods on short-term measurements. This study's prolonged stimulation allows for many additional tools over the long haul. Table 1 displays the average and standard deviation of results for body motion. Repeated measures analysis of variance (ANOVA) was used to examine the overall time spent moving across all three intervals, the number of movements in each interval, and the time it took for the first movement to occur across all three intervals. There was no discernible effect.

This study's "Body Movement" portion is to reevaluate and broaden the examination of fetal body movements, focusing on those movements' effects on responsiveness to auditory stimuli. This study compares bodily movements that occur during a 5-second timeframe before and after the arrival of sound. Short-term indicators show no significant difference between the two time periods, which is the study's principal finding.

The focus on sustained stimulation is what makes this research stand out. By allowing for more extended periods of stimulation, more options for analysis and understanding are made available. Table 1 displays the average and standard deviation of body motion data and is included in the study's results section to emphasize the thoroughness of the investigation into fetal body motions in response to auditory stimulation.

In addition, the study uses a robust statistical method known as repeated measures analysis of variance (ANOVA) to go even further into the numbers. This method helps calculate how long it takes to move across several time intervals, how many times you move throughout each interval, and how long it takes for your first movement to occur. The most crucial point from this section is that no significant change in fetal body movements is seen despite the prolonged stimulation duration and thorough analysis. This finding provides essential evidence of the potential impact of the studied auditory stimuli on fetal mobility.

FHR:

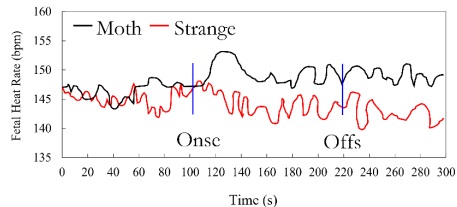

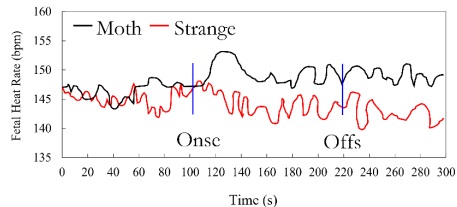

Figure 1 depicts all of the collected FHR readings every 6 minutes. We analyzed heart rate acceleration in the first 10 seconds after introducing stimuli to replicate earlier work using short-term stimuli, but we did not find any significant impacts. We examine the long-term effects of speech on FHR by separating them into three distinct 90-second intervals: (a) 90 seconds before the commencement of speech, (b) 90 seconds during speaking, and (c) 90 seconds after the speech shift. Since the stimulus's on-and-off times are fixed, we focus on these intervals rather than the full 6 minutes.

As a result, the information is shown in a dual-stream format, first showing the speech offset and then each item's speech concurrently. Using an ANOVA with 1 (condition: mother and stranger; between subjects) _1 (time: 1-90 s; within the subject), we discovered that there was no time Significant change, F (89, 5162) 0.319, p .05, or condition F (89, 5162) _0.882, p .05, in the FHR data collected 90 seconds before the commencement of the sound. However, data analysis within the first 90 seconds after the speech began revealed a significant voice interaction (F (89, 5162) _.31, p_.05) between the mother and the stranger's conditions. Analysis of post-stimulus data, which only reveals the significant effect of speech, confirms that the difference observed in Figure 1 persists until the end of the recording time. The average FHR of the fetus hearing the mother's voice was consistently higher than the baseline. Still, the FHR of the unfamiliar fetus was consistently lower (F(1,58)_ 4.635, P.05. After the stimulus began, the average difference in FHR between the mother speech group and the stranger speech group widened from 2.25 bpm to 8.02 bpm. In contrast, it had been 3.06 bpm to 3.16 bpm before the stimulus began.

Table 1: Mean body moment score in the two conditions

Figure 1: Average fetal heart rate for the 2 min before voice onset, 2 min mother’s or stranger’s voice, and 2 min following voice offset

We also looked at the fetus's maximum and minimum heart rates before and after stimulation to verify the reliability of the findings. Twenty-one out of thirty fetuses stimulated in a maternal environment reached a higher peak than the control group. This value is significantly above the chance expectation of 2 (1, N 30) 4.80, p.05. Also, like the control group, 21 out of 30 fetuses in the unfamiliar state showed a lower minimum value during the stimulation (i.e., 2 (1, N 30) 4.80, p). The findings of this study lend credence to the idea that prenatal exposure to language occurs throughout gestation. When a fetus hears its mother's voice through a speaker, its heart rate typically increases by five bpm within the first 20 seconds after the sound begins and maintains that level for the duration of the recording. The fetal heart rate decreases by four beats per minute (bpm) when exposed to the female stranger's voice presented in the same way. At 26 seconds after the stimulus began, post hoc analysis revealed a statistically significant difference in FHR between the two groups. Since this is not a universal response to noise, it rules out the possibility that hearing the sound was responsible for the finding.

The acoustic qualities of the channel are not to blame here, either, as the same channel is used for both sets of unborn children. Different fetuses have different voices, yet each woman's voice is heard twice. The unborn babies in both groups hear the same sounds, but one group hears its mother's voice while the other hears an unfamiliar voice. That the fetus reacts differently to its mother's voice than to the voice of a stranger suggests that it is capable of remembering and recognizing the unique qualities of that voice.

We discovered no difference in how the subject moved when exposed to either the mother's or the stranger's speech, but there was a significant difference in how their heart rate responded. Even when merely using measures of body movements as a response, [8] found that a fetus's behavior did not change in response to hearing either the mother's familiar voice or the voices of unfamiliar people. These contrasting results show that various approaches are required to understand the fetal sensory system fully.

Our findings also differ from those from studies that employed vibratory acoustic stimuli or short-term bursts of noise at 105 or 110 dB [9]. According to research on human fetal and neonatal behavior, within 20 s of r behavior (for example, heart rate changes), the fetus exhibits immediate short-term physical activity and heart rate [10][11]. Discrimination against habituation increased intensity shifts in stimulation [12].

Preferences for the maturation of the brain in the fetus [13]. He hypothesized that they are forebrain-independent reflexes originating in the brainstem. Reflexes and discrimination that occur within a few seconds of stimulus are known to be mediated by the brainstem, and it is widely believed that these responses are also rigid. This study looked at fetal voice recognition rather than fetal speech discrimination, which contradicts earlier research on fetal perception. According to Joseph's observations, it is not known whether the brainstem or higher-order structures are responsible for mediating the speech recognition response we have confirmed in this investigation.

Our findings provide credence to a theoretical model of speech perception that heavily emphasizes the prenatal environment in laying the groundwork for later language development. In particular, the findings are well-suited to epigenetic models in light of recent advances in mammalian brain development [14]. In [2], we postulate that a species' unique experience interacts with the genetic expression of brain development. Werker and Tees hypothesize that shifts in the "expected experience" account for infants' observable biases towards certain types of speech. The change from "independent to experience" (i.e., gene expression) to expected experience would have to occur before birth if this were the case.

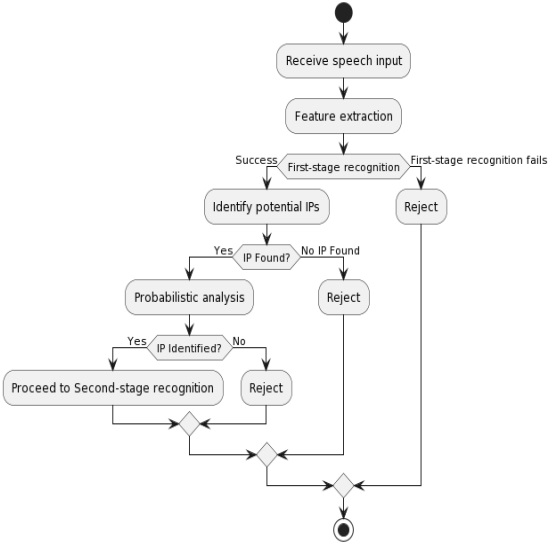

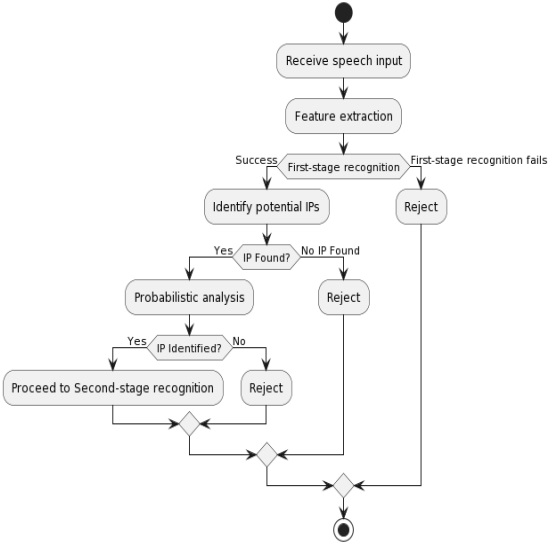

Figure 2: A process diagram of probabilistic IP identification in a two-stage speech recognition system.

Greenough, [13] suggest that broad inputs in the external environment are crucial for regulating cortical circuits and that events at critical periods constitute mechanisms of predicted experience. By removing unneeded neurons with a dendritic abrasion-based neural network [13], the resulting shape can be used as a foundation for future constructions. The ability of a person's own unique set of biological facts is referred to as an "experience-dependent" handle in distinguishing. A synaptic connection not being used is formed in response to a situation in which information can be stored. Changes in the future may affect this knowledge. Since both sets of fetuses appear to make identical noise, and the only apparent difference between the two sets is the nature or peculiarity of the boost, involvement must play a role within the differential behavior observed in this scenario. We cannot be sure that the experienced learning in this scenario is predicted or based on involvement because experience desire and experience-dependent forms are unlikely to occur automatically. Repeated exposure to the mother's voice creates a tool that leads to persistent memories of specific sounds that emerge from pre-birth, experienced forms. Although Joseph [15] acknowledges that reflex brainstem action intervenes and rules human fetal behavior, the hypothesis of Greenough [16] suggests otherwise. Linking shifts in brain activity to improvements in discernment. In the experimental development phase, visual framework research is heavily supported.[17].

However, the visual framework cannot undergo the expected formative development before birth because of the necessity for visual jolts within the uterus. Contrarily, the acoustic stimulation experienced by an unborn child includes the mother's heartbeat, intestine sounds, ambient sounds, and the mother's voice. There are external supports available during pregnancy. A unique neural circuit associated with jolts within the uterus can be shaped by sounds beginning about GA at 30 weeks. A predisposition towards the mother's voice and the ability to distinguish between jarring dialect shifts are present from birth. Their skill set also includes the ability to identify phonemes in languages other than their own. However, with exposure to other languages in the first year of life, children gain the ability to use a second language [17]. Unborn newborns can recognize phonemes in conversation, full-term fetuses have varying responses to mum and stranger voices (discussed in detail here), and preterm fetuses can recognize consonant vowels. Alter. Neurological development in sound-related handling appears to have shifted from experience-independent to expected experience during the final three months of the first year of life while using a foreign dialect once hearing occurs [18]. Wanting new things is a learned behavior that begins in early elementary school.

Throughout the original text, the word "familiar" indicates that a palpable improvement is experienced frequently over time to become known (i.e., no longer novel). Learning and memory are prerequisites for distinguishing between entities. The fetus appears to memorize information about the mother's voice's prosody upon repeated introduction, suggesting brain changes based on maternal discourse if the infant's recognition of the maternal sound depends on prosodic cues [19]. The time of mother sound is during embryonic development. In the context of the present inquiry, differences in heart rate indicate memory and learning due to familiar mother voices and underutilized female voices. The fetus may be able to pay attention to external stimuli for extended periods, as shown by the supported variations in heart rate in response to speech jolts [13] works are silent on the neural substrates underlying memory and attention, and it is currently unknown where these processes occur. We speculate that such a feat may require more than mental prowess and instead point to a few critical drills at the next level. Preliminary imaging studies of the brain provide some support for this hypothesis. Attractive electroencephalography (EEG) is used to consider cortical actuation in the fetal brain, and [20] discovered that the fetal brain's global flap actuation responded to the mother's piggyback virginity tape.[21] duplicated and augmented the work, paying particular attention to the role played by the prefrontal and temporal regions. Our findings thus imply that later-onset fetal discourse processing is influenced by experience and may demonstrate cooperation in fundamental advanced brain work.

Our findings have significant implications for language learning, how first speech perception is theorized, and future studies in these areas. First, it is possible that the groundwork for voice perception and language acquisition was established even before birth, as theorists like [22] and [23] have hypothesized. Second, [24] proposed that the advanced language skills displayed by infants and toddlers might not result from preexisting, inborn speech processing modules in the brain. Contrarily, prenatal exposures may have a significant impact. Finally, at least in the area of speech processing, prenatal involvement of a higher brain structure may be reflected in symptoms of perception, memory, and attention.

Conclusion

This study found that a mother's voice could stimulate fetal heart rate. An unfamiliar voice was associated with a drop in fetal frequency. The experiment was done to influence fetal sound processing since different behaviors have been discovered in reaction to familiar and unfamiliar sounds. It lends credence to the idea that there is some interplay between the genetic expression of brain development and the experience of a given species, which is central to the epigenetic model of speech perception. The results of this study show that a mother's voice has a considerable, stimulating effect on her baby's heart rate. Fetuses showed different physiological reactions to familiar and novel auditory stimuli, including a drop in heart rate when exposed to an unfamiliar voice. These findings show that different behavioral responses to familiar and unexpected sounds provide evidence of the importance of experience in fetal sound processing. The study supports the idea that genetic influences and experiences within a species play essential roles in shaping the brain. The epigenetic model of speech perception relies heavily on this dynamic interaction to explain how genetic predisposition interacts with environmental effects to shape perceptual processes.

Acknowledgment:

This work was conducted with support from the Mir Chakar Khan Rind University of Technology, Dera Ghazi Khan.

Author’s Contribution:

The corresponding author should explain the contribution of each co-author thoroughly.

Reference

[1] A. Taghipour, A. Gisladottir, F. Aletta, M. Bürgin, M. Rezaei, and U. Sturm, “Improved acoustics for semi-enclosed spaces in the proximity of residential buildings,” Internoise 2022 - 51st Int. Congr. Expo. Noise Control Eng., 2022, doi: 10.3397/IN_2022_0158.

[2] K. K. Ubrangala, “Short-term impact of anthropogenic environment on neuroplasticity: A study among humans and animals,” 2023, doi: 10.26481/DIS.20230705KK.

[3] D. Johnston, H. Egermann, and G. Kearney, “The Use of Binaural Based Spatial Audio in the Reduction of Auditory Hypersensitivity in Autistic Young People,” Int. J. Environ. Res. Public Heal. 2022, Vol. 19, Page 12474, vol. 19, no. 19, p. 12474, Sep. 2022, doi: 10.3390/IJERPH191912474.

[4] M. A. Lehloenya, “FOURTH SPACE: Sonic &/aural dimensions of Cape Town’s historic urban landscape,” MS thesis. Fac. Eng. Built Environ., 2022.

[5] M. White, N. Langenheim, T. Yang, and J. Paay, “Informing Streetscape Design with Citizen Perceptions of Safety and Place: An Immersive Virtual Environment E-Participation Method,” Int. J. Environ. Res. Public Heal. 2023, Vol. 20, Page 1341, vol. 20, no. 2, p. 1341, Jan. 2023, doi: 10.3390/IJERPH20021341.

[6] C. Velasco et al., “Perspectives on Multisensory Human-Food Interaction,” Front. Comput. Sci., vol. 3, Dec. 2021, doi: 10.3389/FCOMP.2021.811311.

[7] and E. R. Miller, Chase, “Translating Emotions from Sight to Sound,” 2023.

[8] J. P. M. A. de S. Cardielos, “Sound Designing Brands and Establishing Sonic Identities: the Sons Em Trânsito Music Agency,” Oct. 2023, Accessed: Dec. 25, 2023. [Online]. Available: https://repositorio-aberto.up.pt/handle/10216/153884

[9] “Building Sensory Sound Worlds at the Intersection of Music and Architecture - ProQuest.” Accessed: Dec. 25, 2023. [Online]. Available: https://www.proquest.com/openview/933f632eec3205f6de2d597b232dd137/1?pq-origsite=gscholar&cbl=18750&diss=y

[10] F. J. Seubert, “Music Theatre without Voice Facilitating and directing diverse participation for opera, musical, and pantomime”.

[11] B. Fritzsch, K. L. Elliott, and E. N. Yamoah, “Neurosensory development of the four brainstem-projecting sensory systems and their integration in the telencephalon,” Front. Neural Circuits, vol. 16, p. 913480, Sep. 2022, doi: 10.3389/FNCIR.2022.913480/BIBTEX.

[12] V. Rosi, “The Metaphors of Sound: from Semantics to Acoustics - A Study of Brightness, Warmth, Roundness, and Roughness,” HAL Thesis, 2022, [Online]. Available: https://theses.hal.science/tel-03994903v1

[13] J. O’Hagan, “Dynamic awareness techniques for VR user interactions with bystanders,” Diss. Univ. Glas., 2023.

[14] “Mad auralities : sound and sense in contemporary performance - UBC Library Open Collections.” Accessed: Dec. 25, 2023. [Online]. Available: https://open.library.ubc.ca/soa/cIRcle/collections/ubctheses/24/items/1.0417588

[15] K. E. Primeau, “A GIS Approach to Landscape Scale Archaeoacoustics,” Peoquest, [Online]. Available: https://www.proquest.com/openview/1a935d9ea5e463d4be15d55303103b17/1?pq-origsite=gscholar&cbl=18750&diss=y

[16] T. Porcello and J. Patch, “Re-making sound : an experiential approach to sound studies”.

[17] J. Popham, “Sonorous Movement: Cellistic Corporealities in Works by Helmut Lachenmann, Simon Steen-Andersen, and Johan Svensson,” Diss. Theses, Capstone Proj., Sep. 2023, Accessed: Dec. 25, 2023. [Online]. Available: https://academicworks.cuny.edu/gc_etds/5425

[18] N. Bax, “Concrete, Space and Time: Mixed Reality and Nonlinear Narratives,” 2022.

[19] C. Tornatzky and B. Kelley, “An Artistic Approach to Virtual Reality,” An Artist. Approach to Virtual Real., pp. 1–208, Jan. 2023, doi: 10.1201/9781003363729/ARTISTIC-APPROACH-VIRTUAL-REALITY-CYANE-TORNATZKY-BRENDAN-KELLEY.

[20] J. P. Monger, “Attuning to Multimodal Literacies in Teacher Education: A Case Study Analysis of Preservice Teachers’ Aesthetic Reader Responses to a Wordless Picture Book,” Diss. Indiana Univ., 2023.

[21] P. Rodgers, Design for people living with dementia. Accessed: Dec. 25, 2023. [Online]. Available: https://www.routledge.com/Design-for-People-Living-with-Dementia/A-Rodgers/p/book/9780367554750

[22] “Interactive Museum Tours: A Guide to In-Person and Virtual Experiences - 9781538167403.” Accessed: Dec. 25, 2023. [Online]. Available: https://rowman.com/ISBN/9781538167403/Interactive-Museum-Tours-A-Guide-to-In-Person-and-Virtual-Experiences

[23] “ Lakewood Megachurch: Bodily Dis/Orientations in a Climate of Belief | Kate Pickering - Academia.edu.” Accessed: Dec. 25, 2023. [Online]. Available: https://www.academia.edu/106603353/Lakewood_Megachurch_Bodily_Dis_Orientations_in_a_Climate_of_Belief

[24] N. Mirzoeff, “White Sight: Visual Politics and Practices of Whiteness,” White Sight, Feb. 2023, doi: 10.7551/MITPRESS/14620.001.0001.