Various software applications were utilized throughout this investigation on Lenovo Legion 5 with 16GB RAM and an RTX 2060 processor. Initially, the MATLAB software was employed to convert the original CT scans, which were in DICOM format, into PNG to conduct image processing on the raw data. In contrast, Visual Studio Code was utilized for the examination of the annotations provided, which were in XML format. All of the models were implemented using the Python programming language within the Google Colaboratory (COLAB) environment. Various libraries were employed, including numpy, keras, tensorflow, and computer vision. The dataset was partitioned into training and testing subsets using the split method. Before this, image augmentation techniques were used for the entire dataset to augment the number of scans, hence enhancing the accuracy of the findings obtained.

During the initial phase, a dataset consisting of approximately 1780 CT scans of patients was utilized, exhibiting a lower level of accuracy. However, as a result of employing data augmentation techniques, we were able to not only expand our dataset but also improve accuracy. To evaluate the efficiency of the proposed approach, this study utilizes the publicly accessible TCIA Database as a source of data [37][18]. At first, the dataset was in the DCM format which was viewed in Radiant DICOM Viewer [38] as it was in 3D format. After that the. dcm format was viewed in MATLAB to convert it into. mat format for further processing.

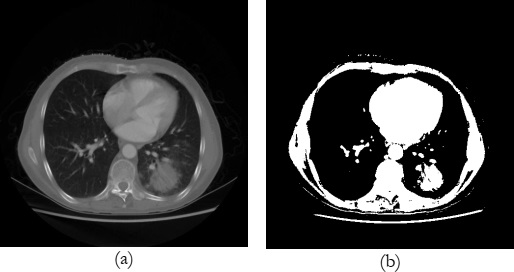

An example of the original and its preprocessed image is shown in Figure 7 where, figure 7(a) shows the original image of the randomly selected patient’s lungs and Figure 7(b) exhibits the scan of the same patient after applying thresholding to separate the lungs region with cancer from other things such as bones, muscles, etc.

After thresholding, region-based segmentation has been applied to the image shown in Figure 7(b) which is obtained after thresholding to extract out the region of interest (ROI) i.e., lungs. The extracted region of the lungs is shown in Figure 8(a) by purple bounding box.

However, Figure 8(b) shows the zoomed and cropped version of the highlighted area specified by the purple bounding box in Figure 8(a). To enhance the lung region more clearly, this study employs the Frangi filter which has been discussed in detail in the methodology’s section, to extract only the lung region as shown in Figure 9.

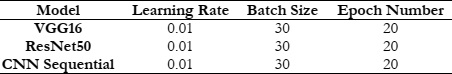

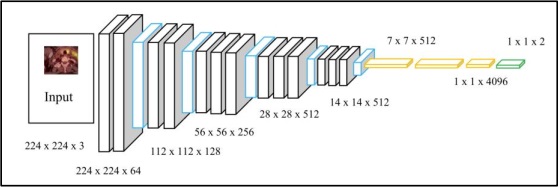

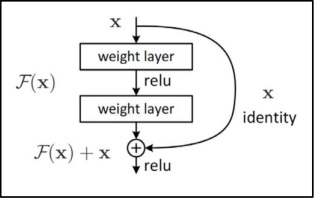

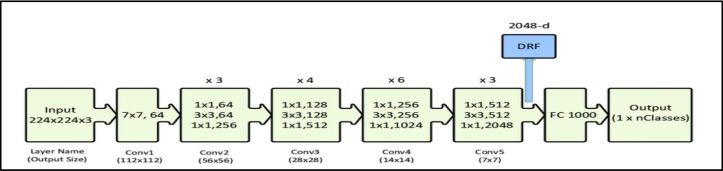

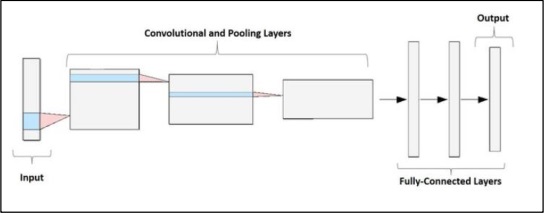

Once the region of interest (ROI) has been identified, they are further divided into two cases i.e., normal, and cancerous on the basis of the information provided by the ground truths inside the publicly available dataset. After the division of the dataset, three tuned deep learning models including VGG16, ResNet50, and CNN Sequential as described in the methodology’s section are applied to the extracted lungs portion one after another. To check the performance of these models, the performance parameters, accuracy, loss, precision, f1 score, and recall were calculated using eq (2), (3), (4), and (5) and are plotted separately for all of the three models and discussed according in the upcoming section.

Figure 10 graphically represents the performance of VGG16 in terms of Loss and accuracy. However, figure 10(a) specifically shows the training loss and accuracy on the TCIA dataset. Whereas Figure 10(b) shows the training accuracy and validation accuracy on the same dataset. It can be observed from Figure 10(a) that VGG16 obtains a higher training accuracy of 98.02% and a training loss of 20.0%. Figure 10(b) clearly shows that the model VGG16 obtains training and testing accuracy of 98.0% and 95.0%, respectively.

Similarly, figure 11 graphically represents the performance of ResNet50 in terms of loss and accuracy. However, figure 11(a) specifically shows the training loss and accuracy on the TCIA dataset. Whereas Figure 11(b) shows the training accuracy and validation accuracy on the same dataset. It can be observed from Figure 11(a) that ResNet50 obtains a training accuracy of 96.4% and a training loss of 13.8%. Figure 12(b) clearly shows that the model VGG16 obtains training and testing accuracy of 96.4% and 93.0%, respectively.

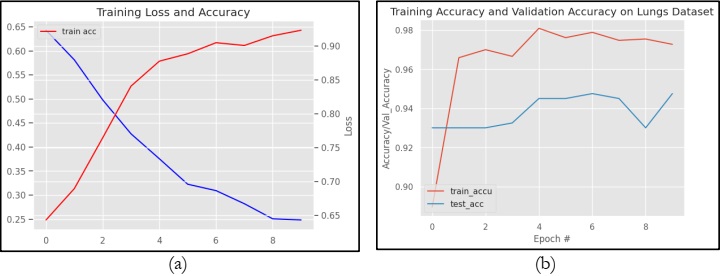

Likewise, figure 12 graphically represents the performance of the CNN Sequential Model in terms of loss and accuracy. However, figure 12(a) specifically shows the training loss and accuracy on the TCIA dataset. Whereas Figure 12(b) shows the training accuracy and validation accuracy on the same dataset. It can be observed from Figure 12(a) that the CNN Sequential model obtains a training accuracy of 97.5% and a training loss of 65%. Figure 12(b) clearly shows that the model VGG16 obtains training and testing accuracy of 97.5% and 94.1%, respectively.

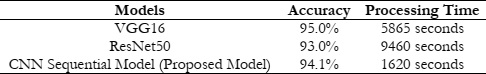

After performing training and testing of the dataset on three different models VGG16, ResNet50, and CNN Sequential, the tuned models are implemented on the testing datasets with fine-tuned parameters as described in Table 1. For testing, the performance measures including accuracy, precision, recall, and f1 score are computed using eq (2), (3), (4), and (5), and a pictorial representation of these measures is displayed in Figure 13.

In Figure 13, three different colors blue, orange, and gray are used to describe the performance of the three models, VGG16, ResNet50, and CNN Sequential, respectively. By looking at the graphical result exhibited in Figure 13, it can be observed clearly that the model VGG16 has obtained a higher precision rate as compared to ResNet50 and CNN Sequential. However, the f1 score is comparative for VGG16 and CNN Sequential, whereas ResNet50 has quite a low f1 score as compared to that of the other two models namely, VGG16 and CNN Sequential. For recall, it can be observed that the models VGG16 and CNN Sequential are exhibiting comparative performance, whereas ResNet50 has quite low recall as compared to VGG16 and CNN Sequential. Similarly, for accuracy, it is quite clear from Figure 13 that VGG16 has higher accuracy among all. Although this accuracy seems a little higher than that of the CNN Sequential but may be considered high when it comes to performance comparison.

It can be concluded from the overall experimentation that VGG16 performs almost similar to that of the CNN Sequential model. However, the model RestNet50 shows lower performance on the TCIA dataset. This is because VGG16 is very slow to train as compared to CNN Sequential model and ResNet50 is slow to train because of its complexity as compared to other models such as VGG16 and CNN Sequential model.

To further analyze the performance of the three models VGG16, ResNet50, and CNN Sequential this study also provides the comparison in terms of processing time which is shown in Table 2. It can be observed from Table 2, that VGG16 has having highest accuracy at the cost of more processing time of 5865 seconds. However, the CNN Sequential Model obtains a similar accuracy with less processing time of 1620 seconds as shown in Table 2 with a little difference in accuracy as compared to VGG16 which is acceptable. This is because of its ability to learn its distinctive features by itself also this model is computationally efficient. Hence, it may be concluded that the overall CNN Sequential model is performing best in terms of accuracy and processing time specifically for the classification of lung adenocarcinoma disease in the TCIA dataset.

This study mainly focuses on analyzing the deep learning models on a single dataset. In the future, it may be implemented on more challenging multiple datasets and the real dataset as well to check its efficiency, accuracy, and robustness on the other dataset as well.

[1] “What Is Cancer? - NCI.” Accessed: Dec. 18, 2023. [Online]. Available: https://www.cancer.gov/about-cancer/understanding/what-is-cancer

[2] “Lung Cancer Facts 2023 - Lung Cancer Research Foundation.” Accessed: Dec. 18, 2023. [Online]. Available: https://www.lungcancerresearchfoundation.org/lung-cancer-facts/

[3] “Automatic nodule detection for lung cancer in CT images: A review. Computers in Biology and Medicine | 10.1016/j.compbiomed.2018.10.033.” Accessed: Dec. 18, 2023. [Online]. Available: https://sci-hub.se/10.1016/j.compbiomed.2018.10.033

[4] S. Makaju, P. W. C. Prasad, A. Alsadoon, A. K. Singh, and A. Elchouemi, “Lung Cancer Detection using CT Scan Images,” Procedia Comput. Sci., vol. 125, pp. 107–114, Jan. 2018, doi: 10.1016/J.PROCS.2017.12.016.

[5] L. Wang, “Deep Learning Techniques to Diagnose Lung Cancer,” Cancers 2022, Vol. 14, Page 5569, vol. 14, no. 22, p. 5569, Nov. 2022, doi: 10.3390/CANCERS14225569.

[6] S. S. Bhimji and J. M. Wallen, “Lung Adenocarcinoma,” StatPearls, Jun. 2023, Accessed: Dec. 18, 2023. [Online]. Available: https://www.ncbi.nlm.nih.gov/books/NBK519578/

[7] Y. Feng, P. Hao, P. Zhang, X. Liu, F. Wu, and H. Wang, “Supervoxel based weakly-supervised multi-level 3D CNNs for lung nodule detection and segmentation,” J. Ambient Intell. Humaniz. Comput., pp. 1–11, Mar. 2019, doi: 10.1007/S12652-018-01170-5/METRICS.

[8] S. Balannolla, A. Kousar Nikhath, and S. Yeruva, “Detection and Classification of Lung Carcinoma using CT scans,” J. Phys. Conf. Ser., vol. 2286, no. 1, p. 012011, Jul. 2022, doi: 10.1088/1742-6596/2286/1/012011.

[9] P. Tripathi, S. Tyagi, and M. Nath, “A Comparative Analysis of Segmentation Techniques for Lung Cancer Detection,” Pattern Recognit. Image Anal., vol. 29, no. 1, pp. 167–173, Jan. 2019, doi: 10.1134/S105466181901019X/METRICS.

[10] A. Kumar, M. Fulham, D. Feng, and J. Kim, “Co-Learning Feature Fusion Maps from PET-CT Images of Lung Cancer,” IEEE Trans. Med. Imaging, vol. 39, no. 1, pp. 204–217, Jan. 2020, doi: 10.1109/TMI.2019.2923601.

[11] M. K. N. R. A. Aenney, G. Bishwabidyalay, M. A. Rahman, “Detection of Lung Nodules using Image Processing Techniques,” 2019.

[12] R. Gasparri et al., “Detection of Lung cancer using Digital image Processing techniques and Artificial Neural Networks,” J. Phys. Conf. Ser., vol. 2327, no. 1, p. 012078, Aug. 2022, doi: 10.1088/1742-6596/2327/1/012078.

[13] Tasmim et al., “A Lightweight-CNN Model for Efficient Lung Cancer Detection and Grad-CAM Visualization,” pp. 254–258, Nov. 2023, doi: 10.1109/ICICT4SD59951.2023.10303569.

[14] A. K. Swain, A. Swetapadma, J. K. Rout, and B. K. Balabantaray, “Classification of NSCLC types using sparse deep neural network features,” Biomed. Signal Process. Control, vol. 87, p. 105485, Jan. 2024, doi: 10.1016/J.BSPC.2023.105485.

[15] V. Kapoor, A. Mittal, S. Garg, M. Diwakar, A. K. Mishra, and P. Singh, “Lung Cancer Detection Using VGG16 and CNN,” Proc. - 2023 IEEE World Conf. Appl. Intell. Comput. AIC 2023, pp. 758–762, 2023, doi: 10.1109/AIC57670.2023.10263901.

[16] P. M. Bruntha, S. I. A. Pandian, J. Anitha, S. S. Abraham, and S. N. Kumar, “A novel hybridized feature extraction approach for lung nodule classification based on transfer learning technique,” J. Med. Phys., vol. 47, no. 1, pp. 1–9, Jan. 2022, doi: 10.4103/JMP.JMP_61_21.

[17] Q. Zhang, H. Wang, S. W. Yoon, D. Won, and K. Srihari, “Lung Nodule Diagnosis on 3D Computed Tomography Images Using Deep Convolutional Neural Networks,” Procedia Manuf., vol. 39, pp. 363–370, Jan. 2019, doi: 10.1016/J.PROMFG.2020.01.375.

[18] K. Clark et al., “The cancer imaging archive (TCIA): Maintaining and operating a public information repository,” J. Digit. Imaging, vol. 26, no. 6, pp. 1045–1057, Dec. 2013, doi: 10.1007/S10278-013-9622-7/METRICS.

[19] “An Efficient Approach Towards Delineation of Lungs and Pulmonary Nodule Detection | Request PDF.” Accessed: Dec. 18, 2023. [Online]. Available: https://www.researchgate.net/publication/348447874_An_Efficient_Approach_Towards_Delineation_of_Lungs_and_Pulmonary_Nodule_Detection

[20] “Lung Cancer Detection of CT Lung Images.” Accessed: Dec. 18, 2023. [Online]. Available: https://www.researchgate.net/publication/333666243_Lung_Cancer_Detection_of_CT_Lung_Images

[21] “Lung Cancer Detection Based on CT Scan Images by Using Deep Transfer Learning | IIETA.” Accessed: Dec. 18, 2023. [Online]. Available: https://www.iieta.org/journals/ts/paper/10.18280/ts.360406

[22] T. Hachaj and M. Piekarczyk, “High-Level Hessian-Based Image Processing with the Frangi Neuron,” Electron. 2023, Vol. 12, Page 4159, vol. 12, no. 19, p. 4159, Oct. 2023, doi: 10.3390/ELECTRONICS12194159.

[23] M. Alamgeer et al., “Deep Learning Enabled Computer Aided Diagnosis Model for Lung Cancer using Biomedical CT Images,” Comput. Mater. Contin., vol. 73, no. 1, pp. 1437–1448, May 2022, doi: 10.32604/CMC.2022.027896.

[24] M. Sharma, J. Bhatt, M. Joshi Manu Sharma, J. S. Bhatt, and M. V Joshi, “Early detection of lung cancer from CT images: nodule segmentation and classification using deep learning,” https://doi.org/10.1117/12.2309530, vol. 10696, no. 13, pp. 226–233, Apr. 2018, doi: 10.1117/12.2309530.

[25] M. Jasmine Pemeena Priyadarsini et al., “Lung Diseases Detection Using Various Deep Learning Algorithms,” J. Healthc. Eng., vol. 2023, 2023, doi: 10.1155/2023/3563696.

[26] D. Z. Karim and T. A. Bushra, “Detecting Lung Cancer from Histopathological Images using Convolution Neural Network,” IEEE Reg. 10 Annu. Int. Conf. Proceedings/TENCON, vol. 2021-December, pp. 626–631, 2021, doi: 10.1109/TENCON54134.2021.9707242.

[27] W. Alakwaa, M. Nassef, and A. Badr, “Lung Cancer Detection and Classification with 3D Convolutional Neural Network (3D-CNN),” Int. J. Adv. Comput. Sci. Appl., vol. 8, no. 8, pp. 66–73, 2017, doi: 10.14569/IJACSA.2017.080853.

[28] M. Praveena, A. Ravi, T. Srikanth, B. H. Praveen, B. S. Krishna, and A. S. Mallik, “Lung Cancer Detection using Deep Learning Approach CNN,” 7th Int. Conf. Commun. Electron. Syst. ICCES 2022 - Proc., pp. 1418–1423, 2022, doi: 10.1109/ICCES54183.2022.9835794.

[29] V. G. Biradar, P. K. Pareek, K. S. Vani, and P. Nagarathna, “Lung Cancer Detection and Classification using 2D Convolutional Neural Network,” MysuruCon 2022 - 2022 IEEE 2nd Mysore Sub Sect. Int. Conf., 2022, doi: 10.1109/MYSURUCON55714.2022.9972595.

[30] T. T. Al-Shouka and K. M. A. Alheeti, “A Transfer Learning for Intelligent Prediction of Lung Cancer Detection,” AICCIT 2023 - Al-Sadiq Int. Conf. Commun. Inf. Technol., pp. 54–59, 2023, doi: 10.1109/AICCIT57614.2023.10217967.

[31] P. Ardimento, L. Aversano, M. L. Bernardi, and M. Cimitile, “Deep Neural Networks Ensemble for Lung Nodule Detection on Chest CT Scans,” Proc. Int. Jt. Conf. Neural Networks, vol. 2021-July, Jul. 2021, doi: 10.1109/IJCNN52387.2021.9534176.

[32] R. V. M. da Nóbrega, P. P. Rebouças Filho, M. B. Rodrigues, S. P. P. da Silva, C. M. J. M. Dourado Júnior, and V. H. C. de Albuquerque, “Lung nodule malignancy classification in chest computed tomography images using transfer learning and convolutional neural networks,” Neural Comput. Appl., vol. 32, no. 15, pp. 11065–11082, Aug. 2020, doi: 10.1007/S00521-018-3895-1/METRICS.

[33] I. D. Apostolopoulos et al., “Automatic classification of solitary pulmonary nodules in PET/CT imaging employing transfer learning techniques,” Med. Biol. Eng. Comput., vol. 59, no. 6, pp. 1299–1310, Jun. 2021, doi: 10.1007/S11517-021-02378-Y/METRICS.

[34] R. Indumathi and R. Vasuki, “Segmentation and Feature Extraction in Lung CT Images with Deep Learning Model Architecture,” SN Comput. Sci., vol. 4, no. 5, pp. 1–14, Sep. 2023, doi: 10.1007/S42979-023-01892-0/METRICS.

[35] P. Yuvarani, S. Hemalatha, S. Kaviya Sri, and P. Madhubala, “Analysis of Lungs CT Image Using Deep Learning Techniques,” 2023 9th Int. Conf. Adv. Comput. Commun. Syst. ICACCS 2023, pp. 1347–1352, 2023, doi: 10.1109/ICACCS57279.2023.10113003.

[36] R. V. M. Da Nóbrega, S. A. Peixoto, S. P. P. Da Silva, and P. P. R. Filho, “Lung Nodule Classification via Deep Transfer Learning in CT Lung Images,” Proc. - IEEE Symp. Comput. Med. Syst., vol. 2018-June, pp. 244–249, Jul. 2018, doi: 10.1109/CBMS.2018.00050.

[37] G. H. K. Bosmans, “CT-PET imaging of lung cancer patients for radiotherapy,” Jan. 2007, doi: 10.26481/DIS.20071220GB.

[38] and V. K. A. C. K. S, R. V Patil, “SEGEMENTATION AND PREDICTION FROM CT IMAGES FOR DETECTING LUNG CANCER”.