Report Generation of Lungs Diseases from Chest X-ray using NLP

Iqra Naz1, Shagufta Iftikhar1, Anmol Zahra1, Syeda Zainab Yousuf Zaidi1

1 NUML, National University of Modern Languages, Islamabad. Pakistan

* Correspondence Author’s Iqra Naz Email: bscs-ms18-id019@numls.edu.pk.

Citation | Naz. I, Iftikhar. S, Zahra. A, Zaidi. S. Z. Y, “Report Generation of Lungs Diseases from Chest X-ray using NLP”. International Journal of Innovations in Science and Technology. Vol 3, Special Issue, pp: 223-233, 2022.

Received | Jan 26, 2022; Revised | Feb 10, 2022 Accepted | Feb 24, 2022; Published | Feb 26, 2022.

________________________________________________________________________

Abstract.

Pulmonary diseases are very severe health complications in the world that impose a massive worldwide health burden. These diseases comprise of pneumonia, asthma, tuberculosis, Covid-19, cancer, etc. The pieces of evidence show that around 65 million people undergo the chronic obstructive pulmonary disease and nearly 3 million people pass away from it each year that makes it the third prominent reason of death worldwide. To decrease the burden of lungs diseases timely diagnosis is very essential. Computer-aided diagnostic, are systems that support doctors in the analysis of medical images. This study showcases that Report Generation System has automated the Chest X-Ray interpretation procedure and lessen human effort, consequently helped the people for timely diagnoses of chronic lungs diseases to decrease the death rate. This system provides great relief for people in rural areas where the doctor-to-patient ratio is only 1 doctor per 1300 people. As a result, after utilizing this application, the affected individual can seek further therapy for the ailment they have been diagnosed with. The proposed system is supposed to be used in the distinct architecture of deep learning (Deep Convolution Neural Network), this is fine tuned to CNN-RNN trainable end-to-end architecture. By using the patient-wise official split of the OpenI dataset we have trained a CNN-RNN model with attention. Our model achieved an accuracy of 94%, which is the highest performance.

Keywords: Attention, chest X-rays, classification, convolutional neural network, deep learning, natural language processing, pulmonary diseases, recurrent neural network, report generation.

INTRODUCTION

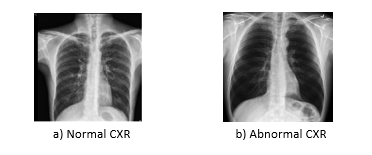

During past few years, the health of human beings has been brutally threatened by pulmonary diseases. Covid-19, Pneumonia, asthma and tuberculosis are the diseases from which millions of people suffer every year. These diseases are not often detected at early stages that leads patients towards the death. There are several diagnostic imaging modalities, for instance Chest X-ray (CXR) shown in Figure 1.

Computed tomography (CT) and magnetic resonance imaging (MRI), are executable nowadays to help in the clinical diagnosis of pulmonary diseases. So, for an examination at early stages chest X-rays are used because they are economical and easily accessible [1].

Figure 1. Representation of Normal and Abnormal chest radiographs

Now a days massive amount of CXRs are produced worldwide [1]. Nevertheless, deficiency of diagnostic resources for instance trained radiologists are major hindrance for robust pulmonary diagnostics [2]. For the diagnosis of pulmonary diseases with massive authenticity is the tough duty even for radiologists. Moreover, there is a shortage of expert radiologists in underdeveloped countries who are competent to read chest radiographs. As opposed to diagnosis with the help of humans, more convincing results with less diagnostic errors can be achieved by computer aided design (CAD) tools [3]. Therefore, researchers proposed different lungs disease detection systems and developing the programmed algorithms by using chest radiography for the detection of pulmonary diseases.

Many lungs disease detection and report generation systems exist like Thorax-Net [1] and Tie Net [4] is briefly discussed in related work section. However, there are still some weaknesses in those existing system. Previous researches have predominantly been enthused by the aspiration to create an automated diagnostic system that can boost the survival rate of patients. However, correctly interpreting the information is always a major challenge. The number of publications and researches in this field are more but progress toward the performance of systems is slow.

Recently, pre-trained language models are getting across-the-board achievement which inspired researchers to concentrate generating multimodal representation using Transformer-based pre-trained models [5]. To our best knowledge, developing an automated report generation system for pulmonary diseases is still a challenging task. This system will be a deep convolutional neural network with regularized attention that will classify the pulmonary diseases on the basis of CXR. Numerous revolutions in the applications of computer vision have been attained by Deep Learning methodologies. The tremendous success in the field of deep learning has provoked many investigators/researchers to employ DCNN. This model contains the branch of classification that determines the image representations and an attention branch works for acquiring distinguished attention maps for better performance of classification. We have used an attention branch, CheXNet [6] is used as classification branch then outputs of mutual branches are aggregated for identification of individual input. Conversely, an automatic radiological diagnosis and reporting system like this system is a crucial framework to build.

This paper enlightens a study assessing the viability of a CNN-RNN with attention-based report generation system to diagnosed disease in CXR. We estimated sensitivity, specificity, and positive predictive value by comparing results of the automated report generation system with other related studies.

There have been recent efforts on pulmonary diseases that we discovered in this section. On the basis of Machine Learning and deep learning there are multiple pulmonary diagnosis schemes i.e. transformers, LSTM and CNN-RNN. Identification of lung disease predominantly depends on patterns of radiology [7]. Timely recognition of Lungs diseases can lead to its cure and stoppage of infectious development.

Tie Net is the model proposed by Xiaosong Wang et al [4]. It classifies the CXRs via image features and text embedding from associated reports. Their [4] auto-annotation framework achieved great accuracy that was over 0.9 in allocation of disease labels for their evaluation dataset that was hand-label [4]. In addition, they transformed the Tie Net into a CXR reporting system. However, it is noted that there are false predictions that can be distinguished in the image that were verified by radiologist but in the original report were not prominent in some way, specifies that to some extent this proposed network can associate the appearance of image with the description of text.

Mendonca, Eneida presented an examination evaluating the achievability of a Natural Language Processing (NLP) [8] based observing framework to screen for medical care related pneumonia in neonates. For the evidence of radiographic abnormalities, this detection system examined radiology reports. They evaluated affectability, explicitness, and positive prescient incentive by different outcomes and clinician’s decisions. Affectability was 71% and particularity was 99% [8]. Their results showed that the computerized strategy was efficient, as their framework was NLP- based so there were few limitations as well 1) Reports that contains incorrect punctuation leads to the errors in recognition of sentence boundaries and poor grammar. 2) A number of occurrences that missed abbreviations. 3) Misspelling of terms. Concisely, it does not assure that system will be worthwhile in a clinical setting because many steps were necessary before such a monitoring system which can actually be used.

Horry, Michael J [9] worked on Automated Lung Cancer Diagnosis, separated an independent lung nodule dataset into benign or malignant classes with good results, and deep learning techniques were applied. Maximum accuracy achieved with positive prediction was greater than 81%.

Wang, Hongyu proposed a model Thorax-Net Attention Regularized Deep Neural Network techniques were used in it [1]. By using the patient-wise official split of the Chest X-ray14 dataset Thorax -Net assessed contrary to three state-of-the-art deep learning models and attained maximum per-class accuracy (0.7876, 0.896) without using external training data. But still certain limitations were there like 1) Training dataset was imbalanced. 2) Deficiency of the annotations of pathological abnormalities, leads to the inaccuracy. 3) Many images contained one or more disease labels.

Lovelace et al. [10] developed a radiology report generation model that generates superior reports as measured by both standard language generation and clinical coherence metrics compared to competitive baselines and it utilizes the transformer architecture. Assessing radiological report is difficult as typically language generation metrics that assess image captioning systems cannot be easily examined. They extracted clinical information differentially obtained by their generated reports. By using standard natural language generation (NLG) objective LNLG they first train a report generation model and then further fine-tune the model to make it extra medically clear by presenting a further medical clear objective. Though these metrics estimate language resemblances among produced and actual reports, they cannot calculate proficiency of clinically correct reports are the produced by the models.

Table 1. Comparison of both approaches

|

|

Authors |

Year |

Dataset |

Methodology |

Results |

|

1. |

Wang, Xiaosong et al. |

2018 |

Three datasets naming: ChestX-ray14, Hand-labeled, OpenI |

Multi-level attention models integrated into an end-to-end trainable CNN-RNN architecture |

90 % accuracy |

|

2. |

Wang, Hongyu et al. |

2019 |

Chest X-ray 14 dataset |

Attention Regularized Deep Neural Network |

(78%, 89%) accuracy in both experiments

|

|

3. |

Horry, Michael J et al. |

2020 |

LIDC-IDRI dataset |

Deep learning techniques

|

greater than 81% accuracy |

|

4. |

Mendonça et al.

|

2005 |

Dataset from the National Institute of Nursing Research |

NLP-based observing framework

|

Sensitivity was 71% and specificity was 99% |

|

5. |

Lovelace et al. |

2020

|

MIMIC-CXR dataset |

transformer architecture and natural language generation (NLG) objective LNLG |

(90%, 97%) accuracy |

Material and methods. In the following Section, a powerful pulmonary diagnostic strategy has been described on the basis of CNN-RNN architecture with attention mechanism. We have explored two methodologies that are trained on standard dataset OpenI [11] by Indiana University. We have predicted the results of our model trained on OpenI dataset for the predictability and responsiveness.

The proposed model comprises of the classification branch which learns the image representation and extracts the features and an attention branch pays much attention to probable abnormal regions. As a final point, to produce the results of diagnosis both branches are joined.

Dataset

Machine learning projects require a dataset to train and test the model and for that purpose we used OpenI (It is freely available dataset by Indiana University) [11]. Indiana University has assembled radiography dataset from multiple institutes that is publicly accessible. We acquired 3955 distinctive CXRS and related 3820 frontal and 3646 lateral images. These images were marked up with key concepts that comprises findings, diagnoses and body parts from the OpenI dataset. In our evaluation, we have selected 3820 distinctive images of front view and their parallel reports.

Initially, portable network graphics (PNG) format images were saved in dataset. Afterwards, rescaling of images to 1024 × 1024 size feature vector was performed. The dataset was divided into a training, testing and validation subset on the basis of patient ID. Identical patients’ information appears only just once in any of the training or testing set. The training set contained 2506 images, testing set was comprised of 200 images and validation set consisted of 500 images.

Preliminaries of Deep Learning

For the proposed system we tested two different methodologies, CNN-RNN and CNN-RNN with attention mechanism.

Basic architecture of CNN

CNN key architecture comprises of the layers listed below:

CONVOLUTION LAYER

The convolution layer [12] units were connected with corresponding local patch units achieved by filtered prior layer. Above the locally weighted sums, feature map triggers the units which was done by Rectified Linear Unit (ReLU) [13].

POOLING LAYER

Features and patterns were integrated semantically with private feature map by this layer. From the former layer extreme or average of input features were calculated and used like feature map output.

FULLY-CONNECTED LAYER

A mesh was created by attaching each single unit with preceding layer. Generally, to extract features 2 or 3 stacks of the pooling and convolution layers were positioned before fully-connected layer [14].

SOFTMAX LAYER

For exhibiting non-linearity along with attaining quicker learning convergence [13] as an activation function ReLU can be used. To reduce inaccurate classification weight optimization of units is considered at learning stage. Stochastic gradient descent optimizer is usually used for Figured block propagation algorithm weights for applying gradient.

Basic architecture of RNN

Basic architecture of RNN contains following layers.

EMBEDDING LAYER

Embedding Layer allows a static form vector to be translated from each word. The resulting dense vector consumes real values as a substitute of just 0’s and 1’s. The fixed length of word vectors supports us to denote words in an improved way accompanied by reduced dimensions.

LSTM LAYER

LSTM layer [16] allows to state the several memory units inside the layer. Every unit or cell inside the layer has an inner cell state, and outputs a hidden state.

DENSE LAYER

It is used for outputting a prediction and is called Dense Layer [17]. It is a fully connected layer that usually follows LSTM layers.

Attention Mechanism

This proposed mechanism is an enhancement of the CNN-RNN models. The issue of context vector was bottleneck for encoder-decoder models which made it difficult to deal with long sentences. The solution to this problem was “Attention”, which has greatly enhanced the quality of translation systems, presented by Bahdanau et al. [18] And Luong et al [19]. This technique lets the model concentrate on the appropriate regions where desired. Specifically, it forces an extra step in the reasoning procedure, by allocating different importance to features from different image regions it identifies salient regions. The model will find the values of alpha from the data. For this reason, the function is defined as:

Results and Discussion

In this section, the techniques we’ve implemented are presented. The first approach is encoder-decoder, encoder in our case is CNN and decoder is a RNN, and second approach is CNN-RNN with attention mechanism. Firstly, the entire OpenI dataset was cleaned and prepared in which we cleaned the text by converting all characters into lowercase, performed simple decontractions, removed punctuation from text except full stop because findings contain multiple sentences), eliminated numbers from findings, words that are ≤ 2 in length (e.g, ‘is’, ‘to’ etc), But the word ‘no’ is not be removed since it adds value) and multiple spaces or full stops or ‘X’ frequently existing in findings.

After preprocessing the entire dataset was split into training, testing and validation, with the observation of multiple data points of an individual. As, if there are 4 images of an individual with corresponding patient report. As we have to deal with pairs of CXRs, various records related to particular person will be created. So, it’s compulsory to divide the dataset on the basis of entities to keep away the problem of data outflow. By means of the distinctive ‘Patient Id’ feature dataset is split and then model is trained. The training, testing and validation set consists of 2506, 200 and 500 images respectively.

Our model generates a report containing one word at a time providing a combination of two images. Before feeding the text data into our model we applied Tokenizer on it. Model inputs are partial reports with Images as shown in Figure 2. Block diagram. Every image is converted into a static sized vector and then sent to the model as an input. We are doing this by means of transfer learning. About 121 number of layers’ convolutional neural network CheXNet were trained with ChestX-ray 14 (biggest openly available dataset of chest radiography), which contains frontal X-ray of 100,000 images along with 14 diseases. For each image, to get the bottleneck features is mainly the goal here.

Figure 2. Flow of study

Encoder-Decoder model:

In both approaches we used different layers of CheXNet [6] for output but we have taken ReLU (avg_pooling) layer as output in our first approach CNN-RNN. We rescaled every CXR to (224,224,3) and then delivered it to the CheXNet through which we obtained 1024 size feature vector. For acquiring 2048 features both these features are concatenated. For training and validation, we formed two data generators (at a time produces BATCH SIZE no of data points).

As stated previously our encoder-decoder model is a word-by-word model (sequence-to-sequence). Image features and the partial sequences are taken as an input by the model for generation of following word in series. To train the model the input sequence is split into 2 pairs of input-output. And also, we have used the merge architecture in which at any point RNN is not opened to image vector. Instead, once the encoding of prefix is done through RNN in the entireness then image was presented to language model.

After that we used embedding layer, where each word was mapped on a 300 dimension demonstration via pre-trained GLOVE model [20] and made the parameter ‘trainable=False’ so while training weights never change.

To mask the zeros in order we created Masked Loss Function so model only acquires the desired words from report and the attention of model will not be given to them. We have used Categorical Cross entropy as our loss function as output words are One-Hot-Encoded. With a learning rate of 0.001, Adam optimizer was utilized also to examine the predictions of model two techniques were used that include beam and greedy search.

Encoder-Decoder with Attention:

After training the CNN-RNN model we did not achieve up to mark accuracy so we extended our model with attention mechanism. For the betterment of encoder-decoder models attention mechanism was proposed. The related chunks of input sequence are focused. We have used output of any of convolution layers (transfer learning) containing spatial facts as opposed to fully connected representation.

This model used same dataset as well split and tokenizer just like the CNN-RNN model as discussed previously. Teacher forcing method was implemented to train the model but we did not converted text into a sequence-to-sequence model this time. CheXNet was used but in our first approach CNN-RNN we have taken ReLU (avg_pooling) layer as output and here the output was bn (ReLU Activation) that was the final convolutional CheXNet layer. After merging the 2 images, the final output shape was (None, 7, 14, 1024). So encoder’s input was (None, 98, 1024) after reshaping.

There are 2 parts, an encoder and a decoder of the model but now the decoder consists of an additional attention module, for instance one-step attention decoder. The sub-classing API of keras [21] was used that provided us an additional customizability and control over our architecture. Same loss function, learning rate of 0.001 and optimizer was used. Like previous model same prediction techniques (Beam and greedy search) and BLEU score [22] are utilized for evaluating the predictions. After training the model we achieved up to mark accuracy.

Table 2. Comparison of both approaches

|

Models |

Activation Function |

Loss Function |

Optimizer |

Learning Rate |

No of epochs |

Batch Size |

|

CNN-RNN |

softmax

|

Categorical Cross entropy |

Adam |

0.001 |

40 |

1 |

|

CNN-RNN with Attention mechanism |

softmax

|

Sparse Categorical Cross entropy |

Adam |

0.001 |

10 |

14 |

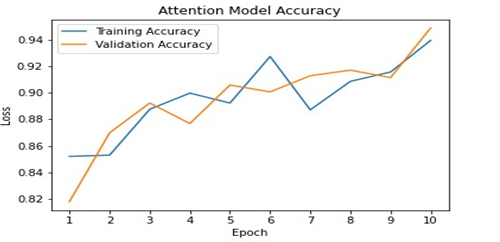

Furthermore, In this section, we explored and analyzed both approaches based on CNN-RNN and CNN-RNN with attention. In this study, benchmark dataset OpenI is used for evaluating the efficacy of proposed methodology. Google Colab having 16 GB of RAM with GPU is used for training and the testing purpose. The experiments are performed on two architectures presented in Table 2. The Figure below shows the training and validation accuracies respectively.

Figure 3. Accuracy Curves of Attention model

While training CNN-RNN with Attention mechanism we have achieved upto mark accuracy which was 96% with validation accuracy of 94% which can be seen in Figure 3. To examine these methods, beam and greedy search methods were used. The most possible word in each time-step is the output by Greedy search. On the other hand, at each time step the most probable sentence by multiplying probability of each word and getting the sentence that has the highest probability is showed by beam search. As compared to beam search greedy search is much rapid despite the fact that beam search generates correct sentences. To evaluate the prediction, we have used the BLEU score as the metric.

Conclusion. We have proposed the model designed for CAD for report generation of pulmonary diseases using CXR. By using the patient-wise official split of the OpenI dataset we have assessed this model in contrast to 2 state-of-the-art models of deep learning. Our model achieved an accuracy of 94%, which is the highest performance.

Acknowledgement. Acknowledgements are considered necessary.

Author’s Contribution. Syeda Zainab Yousuf Zaidi supervised this paper. Anmol Zahra, Shagufta Iftikhar and Iqra Naz did the implementation and write up. All authors read and approved the final manuscript.

Conflict of interest. All authors declare that they have no conflicts of interest.

Project details. This paper is based on a project that is deployed on localhost and completed in November 2021.

REFERENCES

[1] H. Wang, H. Jia, L. Lu, and Y. Xia, “Thorax-Net: An Attention Regularized Deep Neural Network for Classification of Thoracic Diseases on Chest Radiography,” IEEE J. Biomed. Heal. informatics, vol. 24, no. 2, pp. 475–485, Feb. 2020, doi: 10.1109/JBHI.2019.2928369.

[2] Z. Ul Abideen et al., “Uncertainty Assisted Robust Tuberculosis Identification With Bayesian Convolutional Neural Networks,” IEEE access Pract. Innov. open Solut., vol. 8, pp. 22812–22825, 2020, doi: 10.1109/ACCESS.2020.2970023.

[3] Z. Huo et al., “Quality assurance and training procedures for computer-aided detection and diagnosis systems in clinical use,” Med. Phys., vol. 40, no. 7, 2013, doi: 10.1118/1.4807642.

[4] X. Wang, Y. Peng, L. Lu, Z. Lu, and R. M. Summers, “TieNet: Text-Image Embedding Network for Common Thorax Disease Classification and Reporting in Chest X-rays.”

[5] J. Shi, C. Liu, C. T. Ishi, and H. Ishiguro, “3D skeletal movement-enhanced emotion recognition networks,” APSIPA Trans. Signal Inf. Process., vol. 10, pp. 1060–1066, Dec. 2021, doi: 10.1017/ATSIP.2021.11.

[6] M. Almuhayar, H. H. S. Lu, and N. Iriawan, “Classification of Abnormality in Chest X-Ray Images by Transfer Learning of CheXNet,” ICICOS 2019 - 3rd Int. Conf. Informatics Comput. Sci. Accel. Informatics Comput. Res. Smarter Soc. Era Ind. 4.0, Proc., p. 8982455, Oct. 2019, doi: 10.1109/ICICOS48119.2019.8982455.

[7] “Fundamentals of Diagnostic Radiology | William E. Brant, Clyde A. Helms | download.” https://en.1lib.ae/book/2738744/017b45 (accessed Feb. 26, 2022).

[8] E. A. Mendonça, J. Haas, L. Shagina, E. Larson, and C. Friedman, “Extracting information on pneumonia in infants using natural language processing of radiology reports,” J. Biomed. Inform., vol. 38, no. 4, pp. 314–321, Aug. 2005, doi: 10.1016/J.JBI.2005.02.003.

[9] M. Horry et al., “Deep mining generation of lung cancer malignancy models from chest x-ray images,” Sensors, vol. 21, no. 19, Oct. 2021, doi: 10.3390/S21196655.

[10] J. Lovelace and B. Mortazavi, “Learning to Generate Clinically Coherent Chest X-Ray Reports,” Find. Assoc. Comput. Linguist. Find. ACL EMNLP 2020, pp. 1235–1243, 2020, doi: 10.18653/V1/2020.FINDINGS-EMNLP.110.

[11] D. Demner-Fushman et al., “Preparing a collection of radiology examinations for distribution and retrieval.,” J. Am. Med. Inform. Assoc., vol. 23, no. 2, pp. 304–310, Jul. 2015, doi: 10.1093/JAMIA/OCV080.

[12] Y. Jeon and J. Kim, “Active Convolution: Learning the Shape of Convolution for Image Classification,” Accessed: Feb. 26, 2022. [Online]. Available: https://github.com/.

[13] V. Nair and G. E. Hinton, “Rectified Linear Units Improve Restricted Boltzmann Machines.”

[14] S. H. S. Basha, S. R. Dubey, V. Pulabaigari, and S. Mukherjee, “Impact of fully connected layers on performance of convolutional neural networks for image classification,” Neurocomputing, vol. 378, pp. 112–119, Feb. 2020, doi: 10.1016/J.NEUCOM.2019.10.008.

[15] N. M. Nasrabadi, “Book Review: Pattern Recognition and Machine Learning,” https://doi.org/10.1117/1.2819119, vol. 16, no. 4, p. 049901, Oct. 2007, doi: 10.1117/1.2819119.

[16] K. Greff, R. K. Srivastava, J. Koutník, B. R. Steunebrink, and J. Schmidhuber, “TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS 1 LSTM: A Search Space Odyssey,” doi: 10.1109/TNNLS.2016.2582924.

[17] S. Wu, S. Zhao, Q. Zhang, L. Chen, and C. Wu, “Steel surface defect classification based on small sample learning,” Appl. Sci., vol. 11, no. 23, 2021, doi: 10.3390/app112311459.

[18] D. Bahdanau, K. H. Cho, and Y. Bengio, “Neural Machine Translation by Jointly Learning to Align and Translate,” 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., Sep. 2014, Accessed: Feb. 26, 2022. [Online]. Available: https://arxiv.org/abs/1409.0473v7.

[19] M. T. Luong, H. Pham, and C. D. Manning, “Effective Approaches to Attention-based Neural Machine Translation,” Conf. Proc. - EMNLP 2015 Conf. Empir. Methods Nat. Lang. Process., pp. 1412–1421, Aug. 2015, doi: 10.18653/v1/d15-1166.

[20] J. Pennington, R. Socher, and C. D. Manning, “GloVe: Global Vectors for Word Representation,” EMNLP 2014 - 2014 Conf. Empir. Methods Nat. Lang. Process. Proc. Conf., pp. 1532–1543, 2014, doi: 10.3115/V1/D14-1162.

[21] A. Gulli, A. Kapoor, S. Pal, O’Reilly for Higher Education (Firm), and an O. M. C. Safari, “Deep Learning with TensorFlow 2 and Keras - Second Edition,” p. 646.

[22] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a Method for Automatic Evaluation of Machine Translation,” Proc. 40th Annu. Meet. Assoc. Comput. Linguist., pp. 311–318, 2002, doi: 10.3115/1073083.1073135.