Analysis of MLP, CNN, and Transfer Learning Using VGG-16 for CIFAR-10 Dataset

Keywords:

Artificial Neural Networks (ANN), Convolutional Neural Network (CNN), Multi-Layer Perceptron (MLP), CIFAR-10 Dataset, VGG-16.Abstract

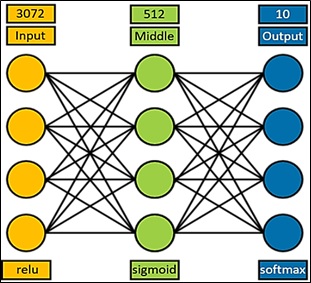

Artificial Neural Networks (ANN) are becoming the core domain of Artificial Intelligence. Generally, Machine learning and specifically, deep learning gained popularity in problem-solving by virtue of Multi-Layer Perceptron (MLP), Convolutional Neural Networks (CNN), and transfer learning approach. Transfer learning is becoming a powerful and successful technique for a variety of computer vision and image analysis applications due to its capability of reusing well-known proven architectures and their weights. Identification of optimum architecture and classifier along with pre-trained architectures is one of the challenging tasks in achieving optimum accuracy in various image analysis tasks. This paper investigates the performance of MLP, CNN, and transfer learning approaches using VGG-16 by tweaking hyperparameters and classifier architecture. The investigations and critical analysis revealed that MLP and CNN architectures have achieved about 55 % and 80 % validation accuracy on test data. Further experiments using VGG-16 architecture with MLP as a classifier have achieved more than 93 % accuracy on standard specification hardware for image classification on the CIFAR-10 dataset.

References

Q. Jiang, L. Zhu, C. Shu, and V. Sekar, “Multilayer perceptron neural network activated by adaptive Gaussian radial basis function and its application to predict lid-driven cavity flow,” Acta Mech. Sin. Xuebao, vol. 37, no. 12, pp. 1757–1772, Dec. 2021, doi: 10.1007/S10409-021-01144-5/TABLES/3.

E. Avuçlu and F. Başçiftçi, “New approaches to determine age and gender in image processing techniques using multilayer perceptron neural network,” Appl. Soft Comput., vol. 70, pp. 157–168, Sep. 2018, doi: 10.1016/J.ASOC.2018.05.033.

R. Román-Godínez, E. Zamora, H. Sossa, and A. Info, “A Comparative Study of Dendrite Neural Networks for Pattern Classification,” Int. J. Comb. Optim. Probl. Informatics, vol. 12, no. 3, pp. 8–19, Sep. 2021, Accessed: Oct. 30, 2024. [Online]. Available: https://ijcopi.org/ojs/article/view/252

M. Lin, W. Jing, D. Di, G. Chen, and H. Song, “Multi-Scale U-Shape MLP for Hyperspectral Image Classification,” IEEE Geosci. Remote Sens. Lett., vol. 19, 2022, doi: 10.1109/LGRS.2022.3141547.

C. Shah, Q. Du, and Y. Xu, “Enhanced TabNet: Attentive Interpretable Tabular Learning for Hyperspectral Image Classification,” Remote Sens. 2022, Vol. 14, Page 716, vol. 14, no. 3, p. 716, Feb. 2022, doi: 10.3390/RS14030716.

S. Ajala, “Artificial neural network implementation for image classification using cifar-10 dataset,” vol. 10, 2021.

T. Yu, X. Li, Y. Cai, M. Sun, and P. Li, “S2-MLP: Spatial-Shift MLP Architecture for Vision,” Proc. - 2022 IEEE/CVF Winter Conf. Appl. Comput. Vision, WACV 2022, pp. 3615–3624, 2022, doi: 10.1109/WACV51458.2022.00367.

A. Farzad and T. A. Gulliver, “Log message anomaly detection with fuzzy C-means and MLP,” Appl. Intell., vol. 52, no. 15, pp. 17708–17717, Dec. 2022, doi: 10.1007/S10489-022-03300-1/METRICS.

P. Viertel and M. König, “Pattern recognition methodologies for pollen grain image classification: a survey,” Mach. Vis. Appl., vol. 33, no. 1, pp. 1–19, Jan. 2022, doi: 10.1007/S00138-021-01271-W/FIGURES/7.

R. C. Calik and M. F. Demirci, “Cifar-10 Image Classification with Convolutional Neural Networks for Embedded Systems,” Proc. IEEE/ACS Int. Conf. Comput. Syst. Appl. AICCSA, vol. 2018-November, Jul. 2018, doi: 10.1109/AICCSA.2018.8612873.

M. F. Aydogdu, V. Celik, and M. F. Demirci, “Comparison of Three Different CNN Architectures for Age Classification,” Proc. - IEEE 11th Int. Conf. Semant. Comput. ICSC 2017, pp. 372–377, Mar. 2017, doi: 10.1109/ICSC.2017.61.

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Commun. ACM, vol. 60, no. 6, pp. 84–90, Jun. 2017, doi: 10.1145/3065386.

S. Divya, B. Adepu, and P. Kamakshi, “IMAGE ENHANCEMENT AND CLASSIFICATION OF CIFAR-10 USING CONVOLUTIONAL NEURAL NETWORKS,” Proc. - 4th Int. Conf. Smart Syst. Inven. Technol. ICSSIT 2022, 2022, doi: 10.1109/ICSSIT53264.2022.9716555.

H. Zhang and X. Luo, “The Role of Knowledge Creation-Oriented Convolutional Neural Network in Learning Interaction,” Comput. Intell. Neurosci., vol. 2022, no. 1, p. 6493311, Jan. 2022, doi: 10.1155/2022/6493311.

O. M. Khanday, S. Dadvandipour, and M. A. Lone, “Effect of filter sizes on image classification in CNN: a case study on CFIR10 and Fashion-MNIST datasets,” IAES Int. J. Artif. Intell., vol. 10, no. 4, pp. 872–878, Dec. 2021, doi: 10.11591/ijai.v10.i4.pp872-878.

S. Liu and W. Deng, “Very deep convolutional neural network based image classification using small training sample size,” Proc. - 3rd IAPR Asian Conf. Pattern Recognition, ACPR 2015, pp. 730–734, Jun. 2016, doi: 10.1109/ACPR.2015.7486599.

R. Chitic, F. Leprévost, and N. Bernard, “Evolutionary algorithms deceive humans and machines at image classification: an extended proof of concept on two scenarios,” J. Inf. Telecommun., vol. 5, no. 1, pp. 121–143, Jan. 2021, doi: 10.1080/24751839.2020.1829388.

C. S. and S. Parikh, “Comparison of cnn and pre- trained models: A study”, [Online]. Available: https://www.researchgate.net/publication/359850786_Comparison_of_CNN_and_Pre-trained_models_A_Study?_tp=eyJjb250ZXh0Ijp7ImZpcnN0UGFnZSI6ImxvZ2luIiwicGFnZSI6InNlYXJjaCIsInBvc2l0aW9uIjoicGFnZUhlYWRlciJ9fQ

T. Alshalali and D. Josyula, “Fine-tuning of pre-trained deep learning models with extreme learning machine,” Proc. - 2018 Int. Conf. Comput. Sci. Comput. Intell. CSCI 2018, pp. 469–473, Dec. 2018, doi: 10.1109/CSCI46756.2018.00096.

C. Alippi, S. Disabato, and M. Roveri, “Moving Convolutional Neural Networks to Embedded Systems: The AlexNet and VGG-16 Case,” Proc. - 17th ACM/IEEE Int. Conf. Inf. Process. Sens. Networks, IPSN 2018, pp. 212–223, Oct. 2018, doi: 10.1109/IPSN.2018.00049.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.