Event-Based Vision for Robust SLAM: An Evaluation Using Hyper E2VID Event Reconstruction Algorithm

Keywords:

SLAM, Event Camera, Neuromorphic, Feature Detection, Computer Vision.Abstract

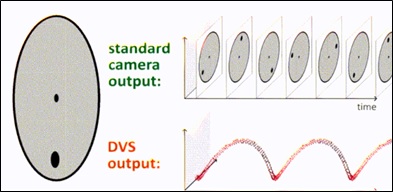

This paper investigates the limitations of traditional visual sensors in challenging environments by integrating event-based cameras with visual SLAM (Simultaneous Localization and Mapping). The work presents a novel comparison between a visual-only SLAM implementation using the state-of-the-art HyperE2VID reconstruction method and conventional frame-based SLAM. Traditional cameras struggle in low dynamic range and motion blur scenarios, limitations that are addressed by event-based cameras, which offer high temporal resolution and robustness in such conditions. The study employs the HyperE2VID algorithm to reconstruct event frames from event data, which are then processed through the SLAM pipeline and compared with conventional frames. Performance metrics, including Absolute Pose Error (APE) and feature tracking performance, were evaluated by contrasting visual SLAM implementations on reconstructed images against those from traditional cameras across three event camera dataset sequences: Dynamic-6DoF, Poster-6DoF, and Slider depth sequence. Experimental results demonstrate that event-based cameras yield higher-quality reconstructions, significantly outperforming conventional cameras, especially in scenarios marked by motion blur and low dynamic range. Among the tested sequences, the Poster-6DoF sequence exhibited the best performance due to its information-rich scenes, while the Slider depth sequence faced challenges related to drag and scaling, as it lacked rotational motion. Although the APE values for the Slider depth sequence were the lowest, it did experience trajectory drift. In contrast, the Poster-6DoF sequence displayed superior overall performance, with reconstructions closely aligning with those produced by conventional camera-based SLAM. The Dynamic-6DoF sequence showed the poorest performance, marked by high absolute pose error and trajectory drift. Overall, these findings highlight the substantial improvements that event-based cameras can bring to SLAM systems operating in challenging environments characterized by motion blur and low dynamic ranges.

References

Poladchegivara.persiangig.com. "Motion Blur." [Online; accessed Aug 3, 2022]. Aug. 2013. URL: http://poladchegivara.persiangig.com/image/picbank/Motion20Blur/.

Alex. "Low Dynamic Range." [Online; accessed Aug 3, 2022]. Nov. 2015. URL: https://www.dronetrest.com/t/fpv-cameras-for-your-drone-what-you-need-to-know- before-you-buy-one/1441.

Patrick Lichtsteiner, Christoph Posch, and Tobi Delbruck. “A 128-128 120 dB 15 us Latency Asynchronous Temporal Contrast Vision Sensor.” IEEE Journal of Solid- State Circuits, 2008, 43(2), pp. 566–576. ISSN: 0018-9200. DOI: 10.1109/JSSC.2007.914337. URL: http://ieeexplore.ieee.org/document/4444573/ (visited on 06/04/2024).

Elias Mueggler, Basil Huber, and Davide Scaramuzza. “Event-based, 6-DOF pose tracking for high-speed maneuvers.” 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2014, pp. 2761–2768. DOI: 10.1109/IROS.2014.6942940.

Andrew J Davison et al. “MonoSLAM: Real-time single camera SLAM.” IEEE transactions on pattern analysis and machine intelligence, 2007, 29(6), pp. 1052–1067.

Alex Zihao Zhu, Nikolay Atanasov, and Kostas Daniilidis. “Event-based visual inertial odometry”. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017, pp. 5391–5399.

Henri Rebecq, Timo Horstschaefer, and Davide Scaramuzza. “Real-time Visual- Inertial Odometry for Event Cameras using Keyframe-based Nonlinear Optimization.” BMVC, 2017.

Antoni Rosinol Vidal et al. “Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high-speed scenarios”. In: IEEE Robotics and Automation Letters 3.2 (2018), pp. 994–1001.

Florian Mahlknecht et al. “Exploring Event Camera-based Odometry for Planetary Robots.” arXiv preprint arXiv:2204.05880, 2022.

Jeff Delaune, David S Bayard, and Roland Brockers. “Range-visual-inertial odometry: Scale observability without excitation.” IEEE Robotics and Automation Letters, 2021, 6(2), pp. 2421–2428.

Weipeng Guan and Peng Lu. “Monocular Event Visual Inertial Odometry based on Event-corner using Sliding Windows Graph-based Optimization.” 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, pp. 2438–2445. DOI: 10.1109/IROS47612.2022.9981970.

Weipeng Guan et al. “PL-EVIO: Robust Monocular Event-based Visual Inertial Odometry with Point and Line Features.” IEEE Transactions on Automation Science and Engineering, 2023.

Weipeng Guan et al. “EVI-SAM: Robust, Real-time, Tightly-coupled Event-Visual- Inertial State Estimation and 3D Dense Mapping.” Advanced Intelligent Systems, 2024.

Henri Rebecq et al. “High Speed and High Dynamic Range Video with an Event Camera.” IEEE Trans. Pattern Anal. Mach. Intell., 2021, 43(6), pp. 1964–1980. ISSN: 0162-8828, 2160-9292, 1939-3539. DOI: 10.1109/TPAMI.2019.2963386. URL: https://ieeexplore.ieee.org/document/8946715/ (visited on 05/25/2024).

Henri Rebecq et al. “Events-To-Video: Bringing Modern Computer Vision to Event Cameras.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, June 2019, pp. 3852–3861. ISBN: 978-1-72813-293-8. DOI: 10.1109/CVPR.2019.00398. URL: https://ieeexplore.ieee.org/document/8953722/ (visited on 05/25/2024).

Burak Ercan et al. “HyperE2VID: Improving Event-Based Video Reconstruction via Hypernetworks.” IEEE Trans. on Image Process., 2024, 33, pp. 1826–1837. ISSN: 1057-7149, 1941-0042. DOI: 10.1109/TIP.2024.3372460. URL: https://ieeexplore.ieee.org/document/10462903/ (visited on 05/25/2024).

Burak Ercan et al. “EVREAL: Towards a Comprehensive Benchmark and Analysis Suite for Event-based Video Reconstruction.” 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2023, pp. 3943–3952. URL: https://api.semanticscholar.org/CorpusID:258427067.

Elias Mueggler et al. “The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM.” The International Journal of Robotics Research, 2017, 36(2), pp. 142–149. ISSN: 0278-3649, 1741-3176. DOI: 10.1177/0278364917691115. URL: http://journals.sagepub.com/doi/10.1177/0278364917691115 (visited on 06/05/2024).

Ethan Rublee et al. “ORB: an efficient alternative to SIFT or SURF.” 2011, pp. 2564–2571. DOI: 10.1109/ICCV.2011.6126544.

Raúl Mur-Artal and Juan D. Tardós. “ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras.” IEEE Transactions on Robotics, 2017, 33(5), pp. 1255–1262. DOI: 10.1109/TRO.2017.2705103.

David G. Lowe. “Distinctive Image Features from Scale-Invariant Keypoints.” Int. J. Comput. Vision, 2004, 60(2), pp. 91–110. ISSN: 0920-5691. DOI: 10.1023/B.0000029664.99615.94. URL: http://dx.doi.org/10.1023/B:VISI.0000029664.99615.94.

Herbert Bay, Tinne Tuytelaars, and Luc Van Gool. “SURF: Speeded up robust features.” Springer, 2006, vol. 3951, pp. 404–417. ISBN: 978-3-540-33832-1. DOI: 10.1007/11744023_32.

Jianbo Shi and Carlo Tomasi. “Good features to track.” 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 1994, pp. 593–600. URL: https://api.semanticscholar.org/CorpusID:778478.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.