Predictive Analysis and Email Categorization Using Large Language Models

Keywords:

BERT, Distil BERT, GPT-2, Large Language Based Models, Transformers, XL-Net/.Abstract

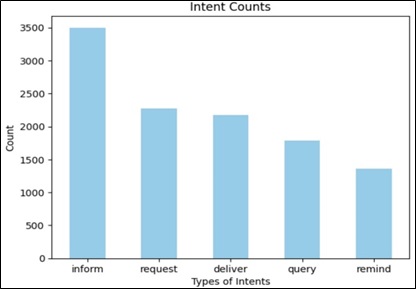

With the global rise in internet users, email communication has become an integral part of daily life. Categorizing emails based on their intent can significantly save time and boost productivity. While previous research has explored machine learning models, including neural networks, for intent classification, Large Language Models (LLMs) have yet to be applied to intent-based email categorization. In this study, a subset of 11,000 emails from the publicly available Enron dataset was used to train various LLMs, including Bidirectional Encoder Representations from Transformers (BERT), Distil BERT, XLNet, and Generative Pre-training Transformer (GPT-2) for intent classification. Among these models, Distil BERT achieved the highest accuracy at 82%, followed closely by BERT with 81%. This research demonstrates the potential of LLMs to accurately identify the intent of emails, providing a valuable tool for email classification and management.

References

S. Radicati and J. Levenstein, “Email statistics report, 2021-2025,” Radicati Group, Palo Alto, CA, USA, Tech. Rep, 2021.

S. K. Sonbhadra, S. Agarwal, M. Syafrullah, and K. Adiyarta, “Email classification via intention-based segmentation,” in 2020 7th International Conference on Electrical Engineering, Computer Sciences and Informatics (EECSI), pp. 38– 44, IEEE, 2020.

W. Wang, S. Hosseini, A. H. Awadallah, P. N. Bennett, and C. Quirk, “Context-aware intent identification in email conversations,” in Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 585–594, 2019.

Q. Chen, Z. Zhuo, and W. Wang, “Bert for joint intent classification and slot filling,” arXiv preprint arXiv:1902.10909, 2019.

“Enron Dataset.” https://www.cs.cmu.edu/~./enron/, 2015. Accessed: 2023-09-07.

C. He, S. Chen, S. Huang, J. Zhang, and X. Song, “Using convolutional neural network with bert for intent determination,” in 2019 International Conference on Asian Language Processing (IALP), pp. 65–70, IEEE, 2019.

R. Visser and M. Dunaiski, “Sentiment and intent classification of in-text citations using bert,” in Proceedings of 43rd Conference of the South African Insti, vol. 85, pp. 129–145, 2022.

S. Gibson, B. Issac, L. Zhang, and S. M. Jacob, “Detecting spam email with machine learning optimized with bioinspired metaheuristic algorithms,” IEEE Access, vol. 8, pp. 187914–187932, 2020.

S. Srinivasan, V. Ravi, M. Alazab, S. Ketha, A. M. Al-Zoubi, and S. Kotti Padannayil, “Spam emails detection based on distributed word embedding with deep learning,” Machine intelligence and big data analytics for cybersecurity applications, pp. 161–189, 2021.

A. Ghourabi, M. A. Mahmood, and Q. M. Alzubi, “A hybrid cnn-lstm model for sms spam detection in arabic and english messages,” Future Internet, vol. 12, no. 9, p. 156, 2020.

T. Sahmoud and D. M. Mikki, “Spam detection using bert,” arXiv preprint arXiv:2206.02443, 2022.

M. Labonne and S. Moran, “Spam-t5: Benchmarking large language models for few-shot email spam detection,” arXiv preprint arXiv:2304.01238, 2023.

M. A. Shah, M. J. Iqbal, N. Noreen, and I. Ahmed, “An automated text document classification framework using bert,” International Journal of Advanced Computer Science and Applications (IJACSA), vol. 14, 2023.

M. Fabien, E. Villatoro-Tello, P. Motlicek, and S. Parida, “Bertaa: Bert fine-tuning for authorship attribution,” in Proceedings of the 17th International Conference on Natural Language Processing (ICON), pp. 127–137, 2020.

S. K. Akpatsa, H. Lei, X. Li, V.-H. K. S. Obeng, E.M. Martey, P.C. Addo, and D. D. Fiawoo, “Online news sentiment classification using distil bert.,” Journal of Quantum Computing, vol. 4, no. 1, 2022.

J. Hoffmann, S. Borgeaud, A. Mensch, E. Buchatskaya, T. Cai, E. Rutherford, D. d. L. Casas, L. A. Hendricks, J. Welbl, A. Clark, et al., “Training compute-optimal large language models,” arXiv preprint arXiv:2203.15556, 2022.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

V. Sanh, L. Debut, J. Chaumond, and T. Wolf, “Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter,” arXiv preprint arXiv:1910.01108, 2019.

Z. Yang, Z. Dai, Y. Yang, J. Carbonell, R. R. Salakhutdinov, and Q. V. Le, “Xlnet: Generalized autoregressive pretraining for language understanding,” Advances in neural information processing systems, vol. 32, 2019.

A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, I. Sutskever, et al., “Language models are unsupervised multitask learners,” OpenAI blog, vol. 1, no. 8, p. 9, 2019.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.