Exploring cGANs for Urdu Alphabets and Numerical System Generation

Keywords:

Generative Adversarial Network, Structural Similarity Index, Fréchet Inception Distance, Peak Signal-to-Noise Ratio, Optical Character RecognitionAbstract

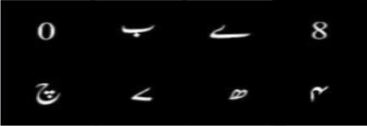

Urdu ligatures play a crucial role in text representation and processing, especially in Urdu language applications. While extensive research has been conducted on handwritten characters in various languages, there is still a significant gap in studying raster-based generated images of Urdu characters. This paper presents a generative model designed to produce high-quality samples that closely resemble yet differ from existing datasets. Utilizing the power of Generative Adversarial Networks (GANs), the model is trained on a diverse dataset comprising 40 classes of Urdu alphabets and 20 classes of numerals (both modern and Arabic-style), with each class containing 1,000 augmented images to capture variations. The generator network creates synthetic Urdu character samples based on class conditions, while the discriminator network evaluates their similarity to real datasets. The model’s effectiveness is assessed using key metrics such as the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and Fréchet Inception Distance (FID). The results confirm that the proposed GAN-based approach achieves high fidelity and structural accuracy, making it highly valuable for applications in text digitization and Optical Character Recognition (OCR).

References

Mustapha, I.B., et al., Conditional deep convolutional generative adversarial networks for isolated handwritten arabic character generation. Arabian Journal for Science and Engineering, 2022.47(2): p. 1309-1320 %@ 2193-567X.

Haque, S., et al. Onkogan: Bangla handwritten digit generation with deep convolutional generative adversarial networks. in Recent Trends in Image Processing and Pattern Recognition: Second International Conference, RTIP2R 2018, Solapur, India, December 21–22, 2018, Revised Selected Papers, Part III 2. 2019. Springer.

Chang, B., et al. Generating handwritten chinese characters using cyclegan. in 2018 IEEE winter conference on applications of computer vision (WACV). 2018. IEEE.

Bhandari, B.B., et al., Nepali Handwritten Letter Generation using GAN. Journal of Science and Engineering, 2021. 9: p. 49-55.

Sharif, M., A. Ul-Hasan, and F. Shafait. Urdu Handwritten Ligature Generation Using Generative Adversarial Networks (GANs). 2022. Springer.

Malik, M.G., C. Boitet, and P. Bhattcharyya, Analysis of NOORI Nasta’leeq for MAJOR Pakistani Languages. 2010.

Britannica. urdu language. 2022 29 November 2022]; Available from: , https://www.britannica.com/topic/Urdu-language.

Husain, S.A. and S.H. Amin. A multi-tier holistic approach for Urdu Nastaliq recognition. 2002.

Kashfi, S.M. and A. Khair, Noori Nastaliq: Revolution in Urdu Composing. 2008.

Panwar, S., M. Ahamed, and N. Nain. Ligature segmentation approach for urdu handwritten text documents. 2014.

Shafait, F., D. Keysers, and T.M. Breuel. Layout analysis of Urdu document images. 2006. IEEE.

Ifrah, G., The universal history of numbers. 2000: Harvill London.

Anderson, D., Digital Vitality for Linguistic Diversity: The Script Encoding Initiative, in Global Language Justice. 2023, Columbia University Press. p. 220-243.

Mirza, M. and S. Osindero, Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784, 2014.

Gan, J., et al., HiGAN+: Handwriting Imitation GAN with Disentangled Representations. ACM Transactions on Graphics (TOG), 2022. 42(1): p. 1- 17 %@ 0730-0301.

Zhang, H., et al., Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE transactions on pattern analysis and machine intelligence, 2018. 41(8): p. 1947-1962%@ 0162-8828.

Yu, J., et al., Vector-quantized image modeling with improved vqgan. arXiv preprint arXiv:2110.04627, 2021.

Qiao, T., et al. Mirrorgan: Learning text-to-image generation by redescription. 2019.

Zhou, Y., et al. Tigan: Text-based interactive image generation and manipulation. 2022.

Xu, T., et al. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. 2018.

Xi, Y., et al. Jointfontgan: Joint geometry-content gan for font generation via few-shot learning. 2020.

Xia, W., et al. Tedigan: Text-guided diverse face image generation and manipulation. 2021.

Goodfellow, I., et al., Generative adversarial nets. Advances in neural information processing systems, 2014. 27.

Alonso, E., B. Moysset, and R. Messina. Adversarial generation of handwritten text images conditioned on sequences. in 2019 international conference on document analysis and recognition (ICDAR). 2019. IEEE.

Wu, Q., Y. Chen, and J. Meng, DCGAN-based data augmentation for tomato leaf disease identification. IEEE Access, 2020. 8: p. 98716-98728.

Arafat, S.Y. and M.J. Iqbal, Two stream deep neural network for sequence-based Urdu ligature recognition. IEEE Access, 2019. 7: p. 159090-159099.

Yorioka, D., H. Kang, and K. Iwamura. Data augmentation for deep learning using generative adversarial networks. 2020. IEEE.

Wang, Z., et al., Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 2004. 13(4): p. 600-612.

Hore, A. and D. Ziou. Image quality metrics: PSNR vs. SSIM. in 2010 20th international conference on pattern recognition. 2010. IEEE.

Yu, Y., W. Zhang, and Y. Deng, Frechet inception distance (fid) for evaluating gans. China University of Mining Technology Beijing Graduate School: Beijing, China, 2021

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., & Ommer, B., "High-Resolution Image Synthesis with Latent Diffusion Models," arXiv preprint arXiv:2112.10752, 2022.

Ho, J., Jain, A., & Abbeel, P., "Denoising Diffusion Probabilistic Models," Advances in Neural Information Processing Systems (NeurIPS), 2020, Volume 33, Issue 1, Pages 6840-6850.

Ramesh, A., Chen, M., & Sutskever, I., "DALL·E 2: A New Generative Model for Creating Images from Text Descriptions," OpenAI, 2022.

Ho, J., Jain, A., & Abbeel, P. (2020). Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020). Curran Associates, Inc.

Kingma, D. P., & Welling, M. (2013). Auto-Encoding Variational Bayes. In International Conference on Learning Representations (ICLR) 2014.

S. Y. Arafat and M. J. Iqbal, "Urdu-Text Detection and Recognition in Natural Scene Images Using Deep Learning," in IEEE Access, vol. 8, pp. 96787-96803, 2020, doi: 10.1109/ACCESS.2020.2994214.

M. Guan, H. Ding, K. Chen and Q. Huo, "Improving Handwritten OCR with Augmented Text Line Images Synthesized from Online Handwriting Samples by Style-Conditioned GAN," 2020

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.