Efficient Region-Based Video Text Extraction Using Advanced Detection and Recognition Models

Keywords:

Optical character recognition, Scene Text Detection, Scene Text Recognition, Video Analysis, Deep Learning in LinguisticsAbstract

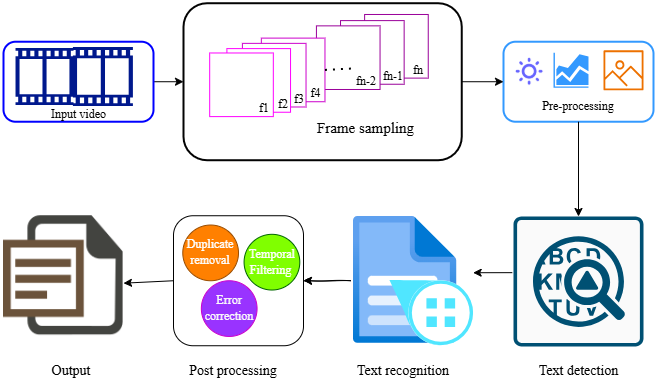

This paper presents an automated process for extracting text from video frames by specifically targeting text-rich regions, identified through advanced scene text detection methods. Unlike traditional techniques that apply OCR to entire frames—resulting in excessive computations and higher error rates—our approach focuses only on textual areas, improving both speed and accuracy. The system integrates effective preprocessing routines, cutting-edge text detectors (CRAFT, DBNet), and advanced recognition engines (CRNN, transformer-based) within a unified framework. Extensive testing on datasets such as ICDAR 2015, ICDAR 2017 MLT, and COCO-Text demonstrates consistent gains in F-scores and word recognition rates, significantly outperforming baseline methods. Additionally, detailed error analysis, ablation studies, and runtime evaluations offer deeper insights into the strengths and limitations of the proposed method. This pipeline is particularly useful for tasks like video indexing, semantic retrieval, and real-time multimedia analysis.

References

N. A. B. Z. Mehmood, M. Iqbal, M. Ali, Z. Iqbal, “A Systematic Mapping Study on OCR Techniques,” International Journal of Computer Science and Network Solutions. Accessed: Mar. 17, 2025. [Online]. Available: https://www.researchgate.net/publication/260797344_A_Systematic_Mapping_Study_on_OCR_Techniques

A. Ehsan et al., “Enhanced Anomaly Detection in Ethereum: Unveiling and Classifying Threats With Machine Learning,” IEEE Access, vol. 12, pp. 176440–176456, 2024, doi: 10.1109/ACCESS.2024.3504300.

Z. Iqbal, W. Shahzad, and M. Faiza, “A diverse clustering particle swarm optimizer for dynamic environment: To locate and track multiple optima,” Proc. 2015 10th IEEE Conf. Ind. Electron. Appl. ICIEA 2015, pp. 1755–1760, Nov. 2015, doi: 10.1109/ICIEA.2015.7334395.

H. Y. C. Z. Iqbal, “Concepts, Key Challenges and Open Problems of Federated Learning,” Int. J. Eng., vol. 34, no. 7, pp. 1667–1683, 2021, doi: 10.5829/ije.2021.34.07a.11.

N. A. Zahid Iqbal , Rafia Ilyas, Huah Yong Chan, “Effective Solution of University Course Timetabling using Particle Swarm Optimizer based Hyper Heuristic approach,” Baghdad Sci. J., vol. 18, no. 4, 2021, [Online]. Available: https://bsj.researchcommons.org/home/vol18/iss4/50/

Z. Iqbal, R. Ilyas, W. Shahzad, and I. Inayat, “A comparative study of machine learning techniques used in non-clinical systems for continuous healthcare of independent livings,” ISCAIE 2018 - 2018 IEEE Symp. Comput. Appl. Ind. Electron., pp. 406–411, Jul. 2018, doi: 10.1109/ISCAIE.2018.8405507.

R. Ilyas and Z. Iqbal, “Study of hybrid approaches used for university course timetable problem (UCTP),” Proc. 2015 10th IEEE Conf. Ind. Electron. Appl. ICIEA 2015, pp. 696–701, Nov. 2015, doi: 10.1109/ICIEA.2015.7334198.

G. A. Gauvain, Jean-Luc, Lamel, Lori, “The LIMSI Broadcast News transcription system,” Speech Commun., vol. 37, no. 1–2, p. Speech Commun., 2002, doi: https://doi.org/10.1016/S0167-6393(01)00061-9.

S. K. P. Pal, Nikhil R, “A review on image segmentation techniques,” Pattern Recognit., vol. 26, no. 9, pp. 1277–1294, 1993, doi: https://doi.org/10.1016/0031-3203(93)90135-J.

A. L. D. Xu, S.-F. Chang, J. Meng, “Event-based highlight extraction from consumer videos using multimodal contextual analysis,” IEEE Trans. Multimed., vol. 13, no. 5, pp. 1004–1015, 2011.

Y. Baek, B. Lee, D. Han, S. Yun, and H. Lee, “Character region awareness for text detection,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2019-June, pp. 9357–9366, Jun. 2019, doi: 10.1109/CVPR.2019.00959.

X. B. Minghui Liao, Zhaoyi Wan, Cong Yao, Kai Chen, “Real-Time Scene Text Detection with Differentiable Binarization,” Proc. AAAI Conf. Artif. Intell., vol. 34, no. 7, 2020, doi: https://doi.org/10.1609/aaai.v34i07.6812.

B. Shi, X. Bai, and C. Yao, “An End-to-End Trainable Neural Network for Image-Based Sequence Recognition and Its Application to Scene Text Recognition,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 11, pp. 2298–2304, Nov. 2017, doi: 10.1109/TPAMI.2016.2646371.

D. K. P. Litman, R. Guerrero, T. Veit, M. Rusiñol, “Scatter: Selective character attention for scene text recognition,” Proc. ICCV, 2019.

M. Anthimopoulos, B. Gatos, and I. Pratikakis, “A hybrid system for text detection in video frames,” DAS 2008 - Proc. 8th IAPR Int. Work. Doc. Anal. Syst., pp. 286–292, 2008, doi: 10.1109/DAS.2008.72.

X. Zhou et al., “EAST: An efficient and accurate scene text detector,” Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017, vol. 2017-January, pp. 2642–2651, Nov. 2017, doi: 10.1109/CVPR.2017.283.

R. Smith, “An overview of the tesseract OCR engine,” Proc. Int. Conf. Doc. Anal. Recognition, ICDAR, vol. 2, pp. 629–633, 2007, doi: 10.1109/ICDAR.2007.4376991.

Rowel Atienza, “Vision Transformer for Fast and Efficient Scene Text Recognition,” arXiv:2105.08582, 2021, doi: https://doi.org/10.48550/arXiv.2105.08582.

Y. Liu, H. Chen, C. Shen, T. He, L. Jin, and L. Wang, “ABCNet: Real-Time Scene Text Spotting with Adaptive Bezier-Curve Network,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., pp. 9806–9815, 2020, doi: 10.1109/CVPR42600.2020.00983.

Z. Qiao, Y. Zhou, D. Yang, Y. Zhou, and W. Wang, “Seed: Semantics enhanced encoder-decoder framework for scene text recognition,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., pp. 13525–13534, 2020, doi: 10.1109/CVPR42600.2020.01354.

D. Karatzas et al., “ICDAR 2015 competition on Robust Reading,” Proc. Int. Conf. Doc. Anal. Recognition, ICDAR, vol. 2015-November, pp. 1156–1160, Nov. 2015, doi: 10.1109/ICDAR.2015.7333942.

N. Nayef et al., “ICDAR2017 Robust Reading Challenge on Multi-Lingual Scene Text Detection and Script Identification - RRC-MLT,” Proc. Int. Conf. Doc. Anal. Recognition, ICDAR, vol. 1, pp. 1454–1459, Jul. 2017, doi: 10.1109/ICDAR.2017.237.

S. B. Andreas Veit, Tomas Matera, Lukas Neumann, Jiri Matas, “COCO-Text: Dataset and Benchmark for Text Detection and Recognition in Natural Images,” arXiv:1601.07140, 2016, doi: https://doi.org/10.48550/arXiv.1601.07140.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.