Object Detection in High Resolution Aerial Imagery Using Detection Transformer

Keywords:

Object Detection, Aerial Imagery, Detection Transformer (DETR), CNN, Hybrid Model, Remote Sensing, Deep Learning, Autonomous Surveillance.Abstract

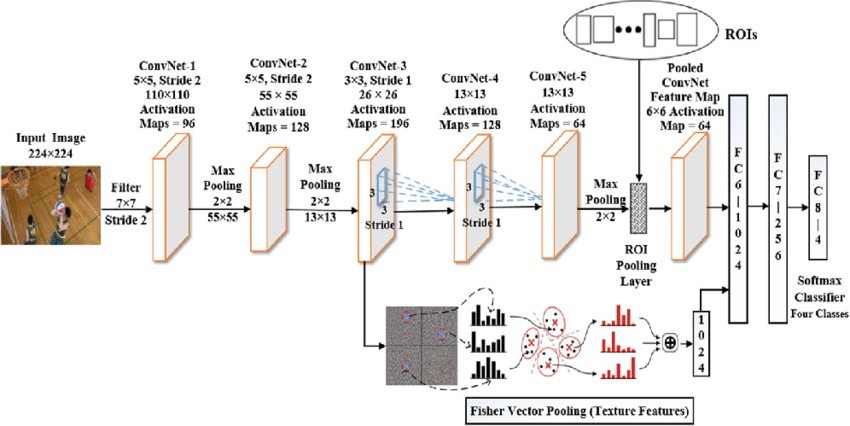

Object detection in high-resolution aerial imagery has received much attention nowadays due to its applications in geosciences, urban planning, disaster management, and surveil- lance. However, there exist challenges such as scale variation, cluttered backgrounds, occlusions, and less annotated datasets. Traditional CNNs have shown great promise, yet they fail to detect long-distance dependencies and complicated spatial relationships. This paper evaluates the function of DETR for object detection in aerial images. Unlike CNN-based detectors that depend on region proposal networks and anchor-based methods, DETR depends on a full end-to-end transformer architecture along with a direct set prediction method that removes the requirement for hand-designed priors. With extensive experiments carried out on datasets like Airbus Aircraft, Rare Planes, and DOTA, observations show that DETR performs better with mAP scores that are as much as 18% higher than ResNet-based architectures. Fur- Furthermore, we propose a hybrid model that is DETR-CNN, which partners both the strength of feature extraction from CNNs and the global attention mechanisms in DETR, thereby improving the accuracy of detection on both Horizontal and Oriented Bounding Box detections. Our results show that transformer-based models are most effective in aerial object detection, which bodes well for remote sensing, autonomous surveillance, and disaster response applications. This study presents an end-to-end DETR-based method for object detection in aerial imagery, demonstrating improvements in accuracy and simplicity over traditional methods.

References

D. L. Ziyi Chen, Huayou Wang, Xinyuan Wu, Jing Wang, Xinrui Lin, Cheng Wang, Kyle Gao, Michael Chapman, “Object detection in aerial images using DOTA dataset: A survey,” Int. J. Appl. Earth Obs. Geoinf., vol. 134, p. 104208, 2024, doi: https://doi.org/10.1016/j.jag.2024.104208.

Z. Liu et al., “Swin Transformer: Hierarchical Vision Transformer using Shifted Windows,” Proc. IEEE Int. Conf. Comput. Vis., pp. 9992–10002, 2021, doi: 10.1109/ICCV48922.2021.00986.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2016-December, pp. 770–778, Dec. 2016, doi: 10.1109/CVPR.2016.90.

T. Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017, vol. 2017-January, pp. 936–944, Nov. 2017, doi: 10.1109/CVPR.2017.106.

L. S. D. Peng Zhou, Xintong Han, Vlad I. Morariu, “Learning Rich Features for Image Manipulation Detection,” arXiv:1805.04953, 2018, doi: https://doi.org/10.48550/arXiv.1805.04953.

et al X. Zhu, W. Su, L. Lu, “Deformable DETR: Deformable Trans- formers for End- to-End Object Detection,” arXiv:2010.04159, 2021, doi: https://doi.org/10.48550/arXiv.2010.04159.

N. H. Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale,” arXiv Prepr. arXiv2010.11929, 2020, doi: https://doi.org/10.48550/arXiv.2010.11929.

K. He, X. Chen, S. Xie, Y. Li, P. Dollar, and R. Girshick, “Masked Autoencoders Are Scalable Vision Learners,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2022-June, pp. 15979–15988, 2022, doi: 10.1109/CVPR52688.2022.01553.

L. Wang and A. Tien, “Aerial Image Object Detection With Vi- sion Transformer Detector (ViTDet),” MITRE Corp. McLean,VA,USA, 2023, doi: https://doi.org/10.48550/arXiv.2301.12058.

N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-End Object Detection with Transformers,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12346 LNCS, pp. 213–229, 2020, doi: 10.1007/978-3-030-58452-8_13.

J. Redmon and A. Farhadi, “YOLOv3: An Incremental Improvement,” Apr. 2018, Accessed: Nov. 15, 2023. [Online]. Available: https://arxiv.org/abs/1804.02767v1

et al X. Chen, H. Fang, T. Wang, “PaDiM: A Patch Distribution Modeling Framework for Anomaly Detection,” Int. Conf. Learn. Represent., 2021.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.