Adaptive Student Assessment Method for Teaching Programming Course

Keywords:

Assessment Systems, Programming Fundamentals, Automatic Question Generation, Interactive Learning, Skill-based LearningAbstract

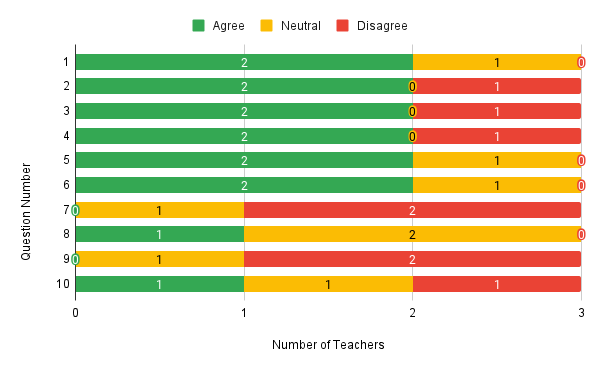

Computer programming is a core component of computer science education and is widely recognized as a vital skill for aspiring professionals. Repetitive coding assessments help students improve their programming abilities, but the manual creation and evaluation of these assessments can be time-consuming and challenging for instructors. To address this, we developed an Adaptive Student Assessment System (ASAS) that automatically generates subjective programming questions aligned with Course Learning Objectives (CLOs) and assists in evaluating student responses. The system was evaluated using a controlled study involving two groups: a test group and a control group. Results demonstrated that the test group consistently outperformed the control group across cognitive assessments, with overall performance improvements of 13.5%. Affective feedback collected through a post-term survey showed a 48.20% higher agreement rate in the test group regarding motivation, clarity, and satisfaction with the assessment process. Teacher evaluations further confirmed the system's effectiveness, with improvements of 23.33% in assessment creation, 26.67% in assessment conduction, and 43.33% in result compilation compared to traditional methods. Teachers reported reduced workload, increased efficiency, and a positive attitude toward long-term adoption of the system. These findings highlight that ASAS not only enhances student engagement and academic performance but also improves instructional efficiency, making it a scalable and effective solution for programming education.

References

D. P. C. & M. M. S. Mary Webb, Niki Davis, Tim Bell, Yaacov J. Katz, Nicholas Reynolds, “Computer science in K-12 school curricula of the 2lst century: Why, what and when?,” Educ. Inf. Technol., vol. 22, pp. 445–468, 2016, doi: https://doi.org/10.1007/s10639-016-9493-x.

Z. O. G. of S. E. in T. P. Radošević, D., Orehovački, T., Stapić, “Automatic On-Line Generation of Student’s Exercises in Teaching Programming,” Cent. Eur. Conf. Inf. Intell. Syst., pp. 22–24, 2010, [Online]. Available: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2505722

M. Karakus, S. Uludag, E. Guler, S. W. Turner, and A. Ugur, “Teaching computing and programming fundamentals via App Inventor for Android,” 2012 Int. Conf. Inf. Technol. Based High. Educ. Training, ITHET 2012, 2012, doi: 10.1109/ITHET.2012.6246020.

P. Tuomi, J. Multisilta, P. Saarikoski, and J. Suominen, “Coding skills as a success factor for a society,” Educ. Inf. Technol., vol. 23, no. 1, pp. 419–434, Jan. 2018, doi: 10.1007/S10639-017-9611-4/METRICS.

M. G. S. Prof. Dr/ Eid Abdel Wahid Ali, Hanan Mohammad Maher, “Thinking out of the box: Educational Applications,” Clin. J. Am. Soc. Nephrol., vol. 37, no. 1, 2022, [Online]. Available: https://mathj.journals.ekb.eg/article_216941_10a3b2592ffe6be3ea05528438ac2b5f.pdf

Marlborough, “Why is Problem Solving Important in Child Development?,” 2020, [Online]. Available: https://www.marlborough.org/news/~board/health-and-wellness/post/why-is-problem-solving-important-in-child-development

Raad A. ALTURKI, “Measuring and Improving Student Performance in an Introductory Programming Course,” Informatics Educ., vol. 15, no. 2, pp. 183–204, 2016, doi: https://doi.org/10.15388/infedu.2016.10.

S. Fincher, “What are we doing when we teach programming?,” Proc. - Front. Educ. Conf., vol. 1, 1999, doi: 10.1109/FIE.1999.839268.

H. U. Sezer Kanbul, “Importance of Coding Education and Robotic Applications For Achieving 21st-Century Skills in North Cyprus,” Int. J. Emerg. Technol. Learn., vol. 12, no. 1, 2017, [Online]. Available: https://online-journals.org/index.php/i-jet/article/view/6097

Morningside College, “Advantages and Disadvantages of Various Assessment Methods”, [Online]. Available: https://www.clark.edu/tlc/outcome_assessment/documents/AssessMethods.pdf

“What are traditional methods of teaching? | ResearchGate.” Accessed: Aug. 06, 2025. [Online]. Available: https://www.researchgate.net/post/What-are-traditional-methods-of-teaching

C. Ahn et al., “Stanford Memory Trends,” 2018. Accessed: Aug. 06, 2025. [Online]. Available: https://purl.stanford.edu/nj167fx1481

A. Sutherland, “Grades don’t correlate with a student’s intelligence,” State Press, 2017, [Online]. Available: https://www.statepress.com/article/2017/04/spopinion-grades-do-not-correlate-with-a-students-intelligence

H. Hagger, K. Burn, T. Mutton, and S. Brindley, “Practice makes perfect? Learning to learn as a teacher,” Oxford Rev. Educ., vol. 34, no. 2, pp. 159–178, Apr. 2008, doi: 10.1080/03054980701614978.

K. Koh and A. Luke, “Authentic and conventional assessment in Singapore schools: an empirical study of teacher assignments and student work,” Assess. Educ. Princ. Policy Pract., vol. 16, no. 3, pp. 291–318, 2009, doi: 10.1080/09695940903319703;WGROUP:STRING:PUBLICATION.

National University of Learning Disabilities, “Transforming Assessments and Learning from the Ground up.” Accessed: Aug. 06, 2025. [Online]. Available: https://www.education-first.com/wp-content/uploads/2019/12/Education-First-State-of-Flux-Part-Two-Assessment-and-Accountability-Landscape-Scan-July-2019-2.pdf

I. Mekterović, L. Brkić, B. Milašinović and M. Baranović, “Building a Comprehensive Automated Programming Assessment System,” IEEE Access, vol. 8, pp. 81154–81172, 2020, doi: 10.1109/ACCESS.2020.2990980.

J. Hollingsworth, “Automatic graders for programming classes,” Commun. ACM, vol. 3, no. 10, pp. 528–529, 1960, doi: https://doi.org/10.1145/367415.367422.

Atiq Ur Rehman, “MOST FREQUENT TEACHING STYLES AND STUDENTS’ LEARNING STRATEGIES IN PUBLIC HIGH SCHOOLS OF LAHORE, PAKISTAN,” Academia, 2016, [Online]. Available: https://www.academia.edu/26149922/MOST_FREQUENT_TEACHING_STYLES_AND_STUDENTS_LEARNING_STRATEGIES_IN_PUBLIC_HIGH_SCHOOLS_OF_LAHORE_PAKISTAN

N. W. George E. Forsythe, “Automatic grading programs,” Commun. ACM, vol. 8, no. 5, pp. 275–278, 1965, doi: https://doi.org/10.1145/364914.36493.

P. Naur, “Automatic grading of students’ ALGOL programming,” BIT, vol. 4, no. 3, pp. 177–188, Sep. 1964, doi: 10.1007/BF01956028/METRICS.

N. T. Le, T. Kojiri, and N. Pinkwart, “Automatic Question Generation for Educational Applications – The State of Art,” Adv. Intell. Syst. Comput., vol. 282, pp. 325–338, 2014, doi: 10.1007/978-3-319-06569-4_24.

R. A. C. Ming Liu, “G-Asks: An Intelligent Automatic Question Generation System for Academic Writing Support,” Dialogue & Discourse, vol. 3, no. 2, p. 3, 2012, [Online]. Available: https://journals.uic.edu/ojs/index.php/dad/article/view/10724

P. Armstrong, “Bloom’s Taxonomy,” Vanderbilt Univ. Cent. Teach., 2010, [Online]. Available: https://cft.vanderbilt.edu/wp-content/uploads/sites/59/Blooms-Taxonomy.pdf

B. P. Tahani Alsubait, “Automatic generation of analogy questions for student assessment: an Ontology-based approach,” Res. Learn. Technol., vol. 20, no. 8, 2012, [Online]. Available: https://journal.alt.ac.uk/index.php/rlt/article/view/1366

E. O’Rourke, E. Butler, A. Díaz Tolentino, and Z. Popović, “Automatic Generation of Problems and Explanations for an Intelligent Algebra Tutor,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 11625 LNAI, pp. 383–395, 2019, doi: 10.1007/978-3-030-23204-7_32.

U. S. & S. A.-E. Ghader Kurdi, Jared Leo, Bijan Parsia, “A Systematic Review of Automatic Question Generation for Educational Purposes,” Int. J. Artif. Intell. Educ., vol. 30, pp. 121–204, 2020, doi: https://doi.org/10.1007/s40593-019-00186-y.

K. Terada and Y. Watanobe, “Automatic generation of fill-in-The-blank programming problems,” Proc. - 2019 IEEE 13th Int. Symp. Embed. Multicore/Many-Core Syst. MCSoC 2019, pp. 187–193, Oct. 2019, doi: 10.1109/MCSOC.2019.00034.

T. Wang, X. Su, P. Ma, Y. Wang, and Kuanquan Wang, “Ability-training-oriented automated assessment in introductory programming course,” Comput. Educ., vol. 56, no. 1, pp. 220–226, 2011, doi: https://doi.org/10.1016/j.compedu.2010.08.003.

A. E. Fatima Al Shamsi, “An Intelligent Assessment Tool for Students’ Java Submissions in Introductory Programming Courses,” J. Intell. Learn. Syst. Appl., vol. 4, no. 1, p. 2, 2012, [Online]. Available: https://www.scirp.org/journal/paperinformation?paperid=17557

D. Insa and J. Silva, “Automatic assessment of Java code,” Comput. Lang. Syst. Struct., vol. 53, pp. 59–72, 2018, doi: https://doi.org/10.1016/j.cl.2018.01.004.

A. Ettles, A. Luxton-Reilly, and P. Denny, “Common Logic Errors Made By Novice Programmers,” ACM Int. Conf. Proceeding Ser., pp. 83–89, Jan. 2018, doi: 10.1145/3160489.3160493;JOURNAL:JOURNAL:ACMCONFERENCES;PAGEGROUP:STRING:PUBLICATION.

B. Cole, D. Hakim, D. Hovemeyer, R. Lazarus, W. Pugh, and K. Stephens, “Improving your software using static analysis to find bugs,” Proc. Conf. Object-Oriented Program. Syst. Lang. Appl. OOPSLA, vol. 2006, pp. 673–674, 2006, doi: 10.1145/1176617.1176667.

M. L. D. S. Lynnette Noblitt, Diane E. Vanc, “A Comparison of Case Study and Traditional Teaching Methods for Improvement of Oral Communication and Critical-Thinking Skills,” J. Coll. Sci. Teach., vol. 39, no. 5, 2010, [Online]. Available: https://www.researchgate.net/publication/234678040_A_Comparison_of_Case_Study_and_Traditional_Teaching_Methods_for_Improvement_of_Oral_Communication_and_Critical-Thinking_Skills

Y. A. et al Ters-Yüz Edilmiş Öğrenme, “The Effect of Flipped Learning Approach on Academic Achievement: A Meta-Analysis Study,” Hacettepe Üniversitesi Eğitim Fakültesi Derg. (H. U. J. Educ., vol. 34, no. 3, pp. 708–727, 2019, doi: 10.16986/HUJE.2018046755.

N. K. Stephen H. Edwards, “Investigating Static Analysis Errors in Student Java Programs,” ICER ’17 Proc. 2017 ACM Conf. Int. Comput. Educ. Res., pp. 65–73, 2017, doi: https://doi.org/10.1145/3105726.3106182.

Y. W. Yuto Yoshizawa, “Logic Error Detection System based on Structure Pattern and Error Degree,” Adv. Sci. Technol. Eng. Syst., vol. 4, no. 5, 2019, [Online]. Available: https://www.astesj.com/v04/i05/p01/

P. L. S. Barbosa, R. A. F. do Carmo, J. P. P. Gomes, and W. Viana, “Adaptive learning in computer science education: A scoping review,” Educ. Inf. Technol., vol. 29, no. 8, pp. 9139–9188, Jun. 2024, doi: 10.1007/S10639-023-12066-Z/METRICS.

Ö. Aydın and E. Karaarslan, “Is ChatGPT Leading Generative AI? What is Beyond Expectations?,” SSRN Electron. J., Jan. 2023, doi: 10.2139/SSRN.4341500.

F. de O. S. Rodrigo Elias Francisco, “Intelligent Tutoring System for Computer Science Education and the Use of Artificial Intelligence: A Literature Review,” CSEDU, 2022, [Online]. Available: https://www.scitepress.org/Papers/2022/110844/110844.pdf

S. Vamsi, V. Balamurali, K. S. Teja, and P. Mallela, “Classifying Difficulty Levels of Programming Questions on HackerRank,” Learn. Anal. Intell. Syst., vol. 3, pp. 301–308, 2020, doi: 10.1007/978-3-030-24322-7_39.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.