Action Recognition of Human Skeletal Data Using CNN and LSTM

Keywords:

Action Recognition, Skeletal data, Convolutional Neural Network, Long Short Term Memory, Machine Learning.Abstract

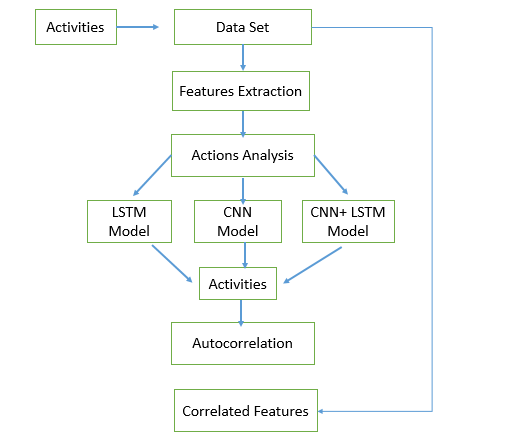

Human action recognition recognizes an action performed by human beings in order to witness the type of action being performed. A lot of technologies have been developed in order to perform this task like GRN, KNN, SVM, depth maps, and two-stream maps. We have used 3 different methods in our research first method is a 2D CNN model, the second method uses an LSTM model and the third method is a combination of CNN+LSTM. With the help of ReLu as an activation function for hidden and input layers. Softmax is an activation function for output training of a neural network. After performing some epochs the results of the recognition of activity are declared. Our dataset is WISDM which recognizes 6 activities e.g., Running, Walking, Sitting, Standing, Downstairs, and Upstairs. After the model is done training the accuracy and loss of recognition of action are described. We achieved to increase in the accuracy of our LSTM model by tuning the hyperparameter by 1.5%. The accuracy of recognition of action is now 98.5% with a decrease in a loss that is 0.09% on the LSTM model, the accuracy of 0.92% and loss of 0.24% is achieved on our 2D CNN model while the CNN+LSTM model gave us an accuracy of 0.90% with the loss of 0.46% that is a stupendous achievement in the path of recognizing actions of a human. Then we introduced autocorrelation for our models. After that, the features of our models and their correlations with each other are also introduced in our research.

References

H. B. Zhang, Y. X. Zhang, B. Zhong, Q. Lei, L. Yang, J. X. Du and D. S. Chen, "A comprehensive survey of vision-based human action recognition methods," Sensors, vol. 19(5), no. 1005, 2019.

. Staff, in 5th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), 2020.

J. Xiong, L. Lu, H. Wang, J. Yang and G. Gui, "Object-level trajectories based fine-grained action recognition in visual IoT applications," IEEE Access, vol. 7, pp. 103629-103638, 2019.

A. Jalal, S. Kamal and C. A. Azurdia-Meza, "Depth maps-based human segmentation and action recognition using full-body plus body color cues via recognizer engine," Journal of Electrical Engineering & Technology, vol. 14(1), pp. 455-461, 2019.

X. Gao, W. Hu, J. Tang, J. Liu and Z. Guo, " Optimized skeleton-based action recognition via sparsified graph regression," In Proceedings of the (pp.27th ACM International Conference on Multimedia, 2019 (october).

Y. Ji, F. Xu, Y. Yang, N. Xie, H. T. Shen and T. Harada, "Attention transfer (ANT) network for view-invariant action recognition," In Proceedings of the 27th ACM International Conference on Multimedia, pp. 574-582, 2019 (October).

I. Vernikos, E. Mathe, A. Papadakis, E. Spyrou and P. Mylonas, "An image representation of skeletal data for action recognition using convolutional neural networks," 2019.

G. Lin and W. Shen, "Research on convolutional neural network based on improved Relu piecewise activation function," Procedia computer science, vol. 131, pp. 977-984, 2018.

X. Weiyao, W. Muqing, Z. Min, L. Yifeng, L. Bo and X. Ting, "Human action recognition using multilevel depth motion maps," IEEE Access, vol. 7, pp. 41811-41822, 2019.

G. Yao, T. Lei and J. Zhong, " A review of convolutional-neural-network-based action recognition.," Pattern Recognition Letters, vol. 118, pp. 14-22, 2019.

L. Wang, D. Q. Huynh and P. Koniusz, "A comparative review of recent kinect-based action recognition algorithms," IEEE Transactions on Image Processing, vol. 29, pp. 15-28, 2019.

N. Hussein, E. Gavves and A. W. Smeulders, "Timeception for complex action recognition.," In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 254-263, 2019.

C. Yang, Y. Xu, J. Shi, B. Dai and B. Zhou, "Temporal pyramid network for action recognition," In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 591-600, 2020.

B. Jiang, M. Wang, W. Gan, W. Wu and J. Yan, "Stm:Spatiotemporal and motion encoding for action recognition.," In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2000-2009, 2019.

Y. Li, B. Ji, X. Shi, J. Zhang, B. Kang and L. Wang, "Tea:Temporal excitation and aggregation for action recognition," In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 909-918, 2020.

N. Jaouedi, N. Boujnah and M. S. Bouhlel, "A new hybrid deep learning model for human action recognition," Journal of King Saud University-Computer and Information Sciences, vol. 32(4), pp. 447-453, 2020.

Z. Wang, Q. She and A. Smolic, "Action-net: Multipath excitation for action recognition," In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 13214-13223, 2021.

M. Li, S. Chen, X. Chen, Y. Zhang, Y. Wang and Q. Tian, "Actional-structural graph convolutional networks for skeleton-based action recognition," In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 3595-3603, 2019.

K. Gedamu, Y. Ji, Y. Yang, L. Gao and H. T. Shen, "Arbitrary-view human action recognition via novel-view action generation," Pattern Recognition, vol. 118, p. 108043, 2021.

J. Li, X. Liu, M. Zhang and D. Wang, " Spatio-temporal deformable 3d convnets with attention for action recognition," Pattern Recognition, vol. 98, p. 107037, 2020.

K. Cheng, Y. Zhang, X. He, W. Chen, J. Cheng and H. Lu, "Skeleton-based action recognition with shift graph convolutional network," In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 183-192, 2020.

L. Shi, Y. Zhang, J. Cheng and H. Lu, "Skeleton-based action recognition with directed graph neural networks," In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7912-7921, 2019.

D. Li, Z. Qiu, Y. Pan, T. Yao, H. Li and T. Mei, "Representing videos as discriminative sub-graphs for action recognition," In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3310-3319, 2021.

P. Koniusz, L. Wang and A. Cherian, "Tensor representations for action recognition," IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44(2), pp. 648-665, 2021.

W. Hackbusch and S. Kühn, "A new scheme for the tensor representation," Journal of Fourier analysis and applications, vol. 15(5), pp. 706-722, 2019.

H. Yang, C. Yuan, B. Li, Y. Du, J. Xing, W. Hu and S. J. Maybank, "Asymmetric 3d convolutional neural networks for action recognition," Pattern recognition, vol. 85, pp. 1-12, 2019.

Y. Li, Y. Chen, X. Dai, D. Chen, M. Liu, L. Yuan and N. Vasconcelos, "MicroNet: Towards image recognition with extremely low FLOPs," arXiv preprint, p. 2011.12289, 2020.

T. Özyer, D. S. Ak and R. Alhajj, "Human action recognition approaches with video dataset," A survey. Knowledge-Based Systems, vol. 222, p. 106995, 2021.

D. Ghadiyaram, D. Tran and D. Mahajan, "Large-scale weakly-supervised pre-training for video action recognition," In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 12046-12055, 2019.

Y. Song, J. Vallmitjana, A. Stent and A. Jaimes, "Tvsum: Summarizing web videos using titles," In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5179-5187.

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.