Towards End-to-End Speech Recognition System for Pashto Language Using Transformer Model

Keywords:

Hidden Markov Models (HMMs), Gaussian Mixture Models (GMMs), End-to-End (E2E), Character Error Rate (CER)Abstract

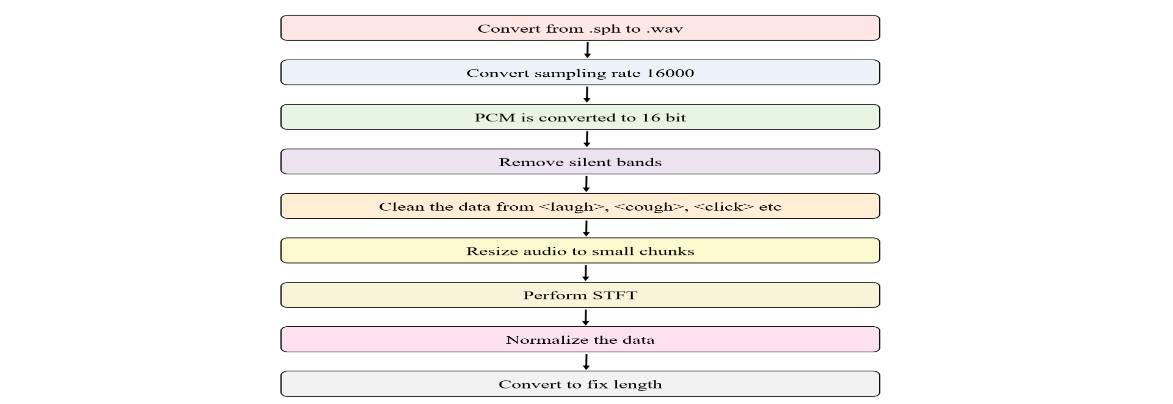

The conventional use of Hidden Markov Models (HMMs), and Gaussian Mixture Models (GMMs) for speech recognition posed setup challenges and inefficiency. This paper adopts the Transformer model for Pashto continuous speech recognition, offering an End-to-End (E2E) system that directly represents acoustic signals in the label sequence, simplifying implementation. This study introduces a Transformer model leveraging its state-of-the-art capabilities, including parallelization and self-attention mechanisms. With limited data for Pashto, the Transformer is chosen for its proficiency in handling constraints. The objective is to develop an accurate Pashto speech recognition system. Through 200 hours of conversational data, the study achieves a Word Error Rate (WER) of up to 51% and a Character Error Rate (CER) of up to 29%. The model's parameters are fine-tuned, and the dataset size increased, leading to significant improvements. Results demonstrate the Transformer's effectiveness, showcasing its prowess in limited data scenarios. The study attains notable WER and CER metrics, affirming the model's ability to recognize Pashto speech accurately. In conclusion, the study establishes the Transformer as a robust choice for Pashto speech recognition, emphasizing its adaptability to limited data conditions. It fills a gap in ASR research for the Pashto language, contributing to the advancement of speech recognition technology in under-resourced languages. The study highlights the potential for further improvement with increased training data. The findings underscore the importance of fine-tuning and dataset augmentation in enhancing model performance and reducing error rates.

References

A. P. Singh, R. Nath, and S. Kumar, “A Survey: Speech Recognition Approaches and Techniques,” 2018 5th IEEE Uttar Pradesh Sect. Int. Conf. Electr. Electron. Comput. Eng. UPCON 2018, Dec. 2018, doi: 10.1109/UPCON.2018.8596954.

C. Kim et al., “End-To-End Training of a Large Vocabulary End-To-End Speech Recognition System,” 2019 IEEE Autom. Speech Recognit. Underst. Work. ASRU 2019 - Proc., pp. 562–569, Dec. 2019, doi: 10.1109/ASRU46091.2019.9003976.

D. Wang, X. Wang, and S. Lv, “An Overview of End-to-End Automatic Speech Recognition,” Symmetry 2019, Vol. 11, Page 1018, vol. 11, no. 8, p. 1018, Aug. 2019, doi: 10.3390/SYM11081018.

S. Khare, A. Mittal, A. Diwan, S. Sarawagi, P. Jyothi, and S. Bharadwaj, “Low Resource ASR: The Surprising Effectiveness of High Resource Transliteration,” Proc. Annu. Conf. Int. Speech Commun. Assoc. INTERSPEECH, vol. 2, pp. 1529–1533, 2021, doi: 10.21437/INTERSPEECH.2021-2062.

S. P. Rath, K. M. Knill, A. Ragni, and M. J. F. Gales, “Combining tandem and hybrid systems for improved speech recognition and keyword spotting on low resource languages,” Proc. Annu. Conf. Int. Speech Commun. Assoc. INTERSPEECH, pp. 835–839, 2014, doi: 10.21437/INTERSPEECH.2014-212.

O. Scharenborg et al., “Building an ASR System for a Low-research Language Through the Adaptation of a High-resource Language ASR System: Preliminary Results”.

Z. Tüske, P. Golik, D. Nolden, R. Schlüter, and H. Ney, “Data augmentation, feature combination, and multilingual neural networks to improve ASR and KWS performance for low-resource languages,” Proc. Annu. Conf. Int. Speech Commun. Assoc. INTERSPEECH, pp. 1420–1424, 2014, doi: 10.21437/INTERSPEECH.2014-348.

M. Y. Tachbelie and L. Besacier, “Using different acoustic, lexical and language modeling units for ASR of an under-resourced language – Amharic,” Speech Commun., vol. 56, no. 1, pp. 181–194, Jan. 2014, doi: 10.1016/J.SPECOM.2013.01.008.

D. Rudolph Van Niekerk, “AUTOMATIC SPEECH SEGMENTATION WITH LIMITED DATA”.

P. Swietojanski, A. Ghoshal, and S. Renals, “Revisiting hybrid and GMM-HMM system combination techniques,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., pp. 6744–6748, Oct. 2013, doi: 10.1109/ICASSP.2013.6638967.

P. Motlicek, D. Imseng, B. Potard, P. N. Garner, and I. Himawan, “Exploiting foreign resources for DNN-based ASR,” Eurasip J. Audio, Speech, Music Process., vol. 2015, no. 1, pp. 1–10, Dec. 2015, doi: 10.1186/S13636-015-0058-5/TABLES/5.

S. Kim, T. Hori, and S. Watanabe, “Joint CTC-attention based end-to-end speech recognition using multi-task learning,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., pp. 4835–4839, Jun. 2017, doi: 10.1109/ICASSP.2017.7953075.

S. Ding, S. Qu, Y. Xi, A. K. Sangaiah, and S. Wan, “Image caption generation with high-level image features,” Pattern Recognit. Lett., vol. 123, pp. 89–95, May 2019, doi: 10.1016/J.PATREC.2019.03.021.

H. Tsaniya, C. Fatichah, and N. Suciati, “Transformer Approaches in Image Captioning: A Literature Review,” ICITEE 2022 - Proc. 14th Int. Conf. Inf. Technol. Electr. Eng., pp. 280–285, 2022, doi: 10.1109/ICITEE56407.2022.9954086.

G. Liu and J. Guo, “Bidirectional LSTM with attention mechanism and convolutional layer for text classification,” Neurocomputing, vol. 337, pp. 325–338, Apr. 2019, doi: 10.1016/J.NEUCOM.2019.01.078.

Y. Li, L. Yang, B. Xu, J. Wang, and H. Lin, “Improving User Attribute Classification with Text and Social Network Attention,” Cognit. Comput., vol. 11, no. 4, pp. 459–468, Aug. 2019, doi: 10.1007/S12559-019-9624-Y/METRICS.

M. A. Di Gangi, M. Negri, and M. Turchi, “Adapting Transformer to End-to-End Spoken Language Translation,” Proc. Annu. Conf. Int. Speech Commun. Assoc. INTERSPEECH, vol. 2019-September, pp. 1133–1137, 2019, doi: 10.21437/INTERSPEECH.2019-3045.

D. Britz, A. Goldie, M. T. Luong, and Q. V. Le, “Massive Exploration of Neural Machine Translation Architectures,” EMNLP 2017 - Conf. Empir. Methods Nat. Lang. Process. Proc., pp. 1442–1451, 2017, doi: 10.18653/V1/D17-1151.

A. Rahali and M. A. Akhloufi, “End-to-End Transformer-Based Models in Textual-Based NLP,” AI 2023, Vol. 4, Pages 54-110, vol. 4, no. 1, pp. 54–110, Jan. 2023, doi: 10.3390/AI4010004.

Y. Zhang, P. Wu, H. Li, Y. Liu, F. E. Alsaadi, and N. Zeng, “DPF-S2S: A novel dual-pathway-fusion-based sequence-to-sequence text recognition model,” Neurocomputing, vol. 523, pp. 182–190, Feb. 2023, doi: 10.1016/J.NEUCOM.2022.12.034.

S. Song, C. Lan, J. Xing, W. Zeng, and J. Liu, “An End-to-End Spatio-Temporal Attention Model for Human Action Recognition from Skeleton Data,” Proc. AAAI Conf. Artif. Intell., vol. 31, no. 1, pp. 4263–4270, Feb. 2017, doi: 10.1609/AAAI.V31I1.11212.

X. Yan, S. Hu, Y. Mao, Y. Ye, and H. Yu, “Deep multi-view learning methods: A review,” Neurocomputing, vol. 448, pp. 106–129, Aug. 2021, doi: 10.1016/J.NEUCOM.2021.03.090.

K. Song, T. Yao, Q. Ling, and T. Mei, “Boosting image sentiment analysis with visual attention,” Neurocomputing, vol. 312, pp. 218–228, Oct. 2018, doi: 10.1016/J.NEUCOM.2018.05.104.

S. Ahmadian, M. Ahmadian, and M. Jalili, “A deep learning based trust- and tag-aware recommender system,” Neurocomputing, vol. 488, pp. 557–571, Jun. 2022, doi: 10.1016/J.NEUCOM.2021.11.064.

R. Wang, Z. Wu, J. Lou, and Y. Jiang, “Attention-based dynamic user modeling and Deep Collaborative filtering recommendation,” Expert Syst. Appl., vol. 188, p. 116036, Feb. 2022, doi: 10.1016/J.ESWA.2021.116036.

J. Feng et al., “Crowd Flow Prediction for Irregular Regions with Semantic Graph Attention Network,” ACM Trans. Intell. Syst. Technol., vol. 13, no. 5, Jun. 2022, doi: 10.1145/3501805.

S. Liang, A. Zhu, J. Zhang, and J. Shao, “Hyper-node Relational Graph Attention Network for Multi-modal Knowledge Graph Completion,” ACM Trans. Multimed. Comput. Commun. Appl., vol. 19, no. 2, Feb. 2023, doi: 10.1145/3545573.

X. Yan, Y. Guo, G. Wang, Y. Kuang, Y. Li, and Z. Zheng, “Fake News Detection Based on Dual Graph Attention Networks,” Lect. Notes Data Eng. Commun. Technol., vol. 89, pp. 655–666, 2022, doi: 10.1007/978-3-030-89698-0_67/COVER.

L. Besacier, E. Barnard, A. Karpov, and T. Schultz, “Automatic speech recognition for under-resourced languages: A survey,” Speech Commun., vol. 56, no. 1, pp. 85–100, Jan. 2014, doi: 10.1016/J.SPECOM.2013.07.008.

I. Ahmed, H. Ali, N. Ahmad, and G. Ahmad, “The development of isolated words corpus of Pashto for the automatic speech recognition research,” 2012 Int. Conf. Robot. Artif. Intell. ICRAI 2012, pp. 139–143, 2012, doi: 10.1109/ICRAI.2012.6413380.

Z. Ali, A. W. Abbas, T. M. Thasleema, B. Uddin, T. Raaz, and S. A. R. Abid, “Database development and automatic speech recognition of isolated Pashto spoken digits using MFCC and K-NN,” Int. J. Speech Technol., vol. 18, no. 2, pp. 271–275, Jun. 2015, doi: 10.1007/S10772-014-9267-Z/METRICS.

B. Zada and R. Ullah, “Pashto isolated digits recognition using deep convolutional neural network,” Heliyon, vol. 6, no. 2, p. e03372, Feb. 2020, doi: 10.1016/j.heliyon.2020.e03372.

Z. Alyafeai, M. S. AlShaibani, and I. Ahmad, “A Survey on Transfer Learning in Natural Language Processing,” arXiv, pp. 6523–6541, May 2020, Accessed: Feb. 16, 2024. [Online]. Available: https://arxiv.org/abs/2007.04239v1

Y. Li, S. Si, G. Li, C. J. Hsieh, and S. Bengio, “Learnable Fourier Features for Multi-Dimensional Spatial Positional Encoding,” Adv. Neural Inf. Process. Syst., vol. 19, pp. 15816–15829, Jun. 2021, Accessed: Feb. 16, 2024. [Online]. Available: https://arxiv.org/abs/2106.02795v3

M. Cai et al., “High-performance Swahili keyword search with very limited language pack: The THUEE system for the OpenKWS15 evaluation,” 2015 IEEE Work. Autom. Speech Recognit. Understanding, ASRU 2015 - Proc., pp. 215–222, Feb. 2016, doi: 10.1109/ASRU.2015.7404797.

P. E. Solberg, P. Beauguitte, P. E. Kummervold, and F. Wetjen, “A Large Norwegian Dataset for Weak Supervision ASR.” pp. 48–52, 2023. Accessed: Feb. 16, 2024. [Online]. Available: https://aclanthology.org/2023.resourceful-1.7

P. Janbakhshi and I. Kodrasi, “EXPERIMENTAL INVESTIGATION ON STFT PHASE REPRESENTATIONS FOR DEEP LEARNING-BASED DYSARTHRIC SPEECH DETECTION,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., vol. 2022-May, pp. 6477–6481, 2022, doi: 10.1109/ICASSP43922.2022.9747205.

W. Chan, N. Jaitly, Q. V Le, and V. Google Brain, “Listen, Attend and Spell,” Aug. 2015, Accessed: Feb. 16, 2024. [Online]. Available: https://arxiv.org/abs/1508.01211v2

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.