On Evaluation of discrete RL agents for Traffic Scheduling and Trajectory Optimization of UAV-based IoT Network with multiple RIS unit

Abstract

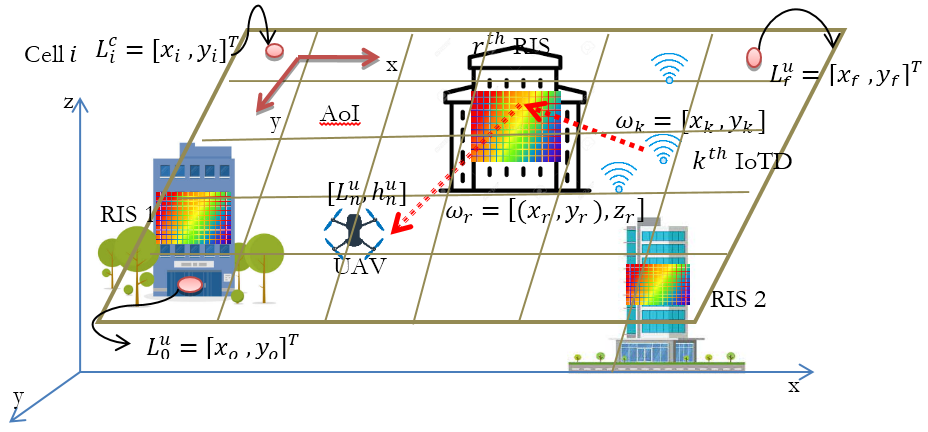

Unmanned Aerial Vehicles (UAVs) have been very effective for data collection from widely spread Internet of Things Devices (IoTDs). However, in case of obstacles, the Line of Sight (LoS) link between the UAV and IoTDs will be blocked. To address this issue, the Reconfigurable Intelligent Surface (RIS) has been used, especially in urban areas, to extend communication beyond the obstacles, thus enabling efficient data transfer in situations where the LoS link does not exist. In this work, the goal is to jointly optimize the trajectory and minimize the energy consumption of UAVs on one hand and satisfy the data throughput requirement of each IoTD on the other hand. As it is a mixed integer non-convex problem, Reinforcement Learning (RL); a class of Machine Learning (ML), is used to solve it, which has proven to be computationally faster than the conventional techniques to solve such problems. In this paper, three discrete RL agents i.e. Double Deep Q Network (DDQN), Proximal Policy Optimization (PPO), and PPO with Recurrent Neural Network (PPOwRNN) are tested with multiple RISs to enhance the data transfer and trajectory optimization in an Internet of Things (IoT) network. The results show that DDQN with multiple RIS is more efficient in saving communication-related energy, while a single RIS system with the PPO agent provides more reduction in the UAV’s propulsion energy consumption when compared to other agents.

References

M. Samir, S. Sharafeddine, C. M. Assi, T. M. Nguyen, and A. Ghrayeb, “UAV Trajectory Planning for Data Collection from Time-Constrained IoT Devices,” IEEE Trans. Wirel. Commun., vol. 19, no. 1, pp. 34–46, Jan. 2020, doi: 10.1109/TWC.2019.2940447.

“6G Internet of Things: A Comprehensive Survey,.” Accessed: May 04, 2024. [Online]. Available: https://arxiv.org/pdf/2108.04973

H. Tataria, M. Shafi, A. F. Molisch, M. Dohler, H. Sjoland, and F. Tufvesson, “6G Wireless Systems: Vision, Requirements, Challenges, Insights, and Opportunities,” Proc. IEEE, vol. 109, no. 7, pp. 1166–1199, Jul. 2021, doi: 10.1109/JPROC.2021.3061701.

Y. Zhao, J. Zhao, W. Zhai, S. Sun, D. Niyato, and K. Y. Lam, “A Survey of 6G Wireless Communications: Emerging Technologies,” Adv. Intell. Syst. Comput., vol. 1363 AISC, pp. 150–170, Apr. 2020, doi: 10.1007/978-3-030-73100-7_12.

A. Al-Hilo, M. Samir, M. Elhattab, C. Assi, and S. Sharafeddine, “RIS-Assisted UAV for Timely Data Collection in IoT Networks,” IEEE Syst. J., vol. 17, no. 1, pp. 431–442, Mar. 2023, doi: 10.1109/JSYST.2022.3215279.

H. Mei, K. Yang, Q. Liu, and K. Wang, “3D-Trajectory and Phase-Shift Design for RIS-Assisted UAV Systems Using Deep Reinforcement Learning,” IEEE Trans. Veh. Technol., vol. 71, no. 3, pp. 3020–3029, Mar. 2022, doi: 10.1109/TVT.2022.3143839.

L. Wang, K. Wang, C. Pan, and N. Aslam, “Joint Trajectory and Passive Beamforming Design for Intelligent Reflecting Surface-Aided UAV Communications: A Deep Reinforcement Learning Approach,” IEEE Trans. Mob. Comput., vol. 22, no. 11, pp. 6543–6553, Nov. 2023, doi: 10.1109/TMC.2022.3200998.

M. Samir, D. Ebrahimi, C. Assi, S. Sharafeddine, and A. Ghrayeb, “Leveraging UAVs for Coverage in Cell-Free Vehicular Networks: A Deep Reinforcement Learning Approach,” IEEE Trans. Mob. Comput., vol. 20, no. 9, pp. 2835–2847, Sep. 2021, doi: 10.1109/TMC.2020.2991326.

“Simulation code for DDQN”, [Online]. Available: https://github.com/HaiboMei/UAVRIS-DRL.git

“Welcome to Spinning Up in Deep RL! — Spinning Up documentation.” Accessed: May 04, 2024. [Online]. Available: https://spinningup.openai.com/en/latest/

J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal Policy Optimization Algorithms,” Jul. 2017, Accessed: May 04, 2024. [Online]. Available: https://arxiv.org/abs/1707.06347v2.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.