Beyond CNNs: Encoded Context for Image Inpainting with LSTMs and Pixel CNNs

Keywords:

Index Terms—Image Inpainting, GAN, Pixel CNN, LSTMAbstract

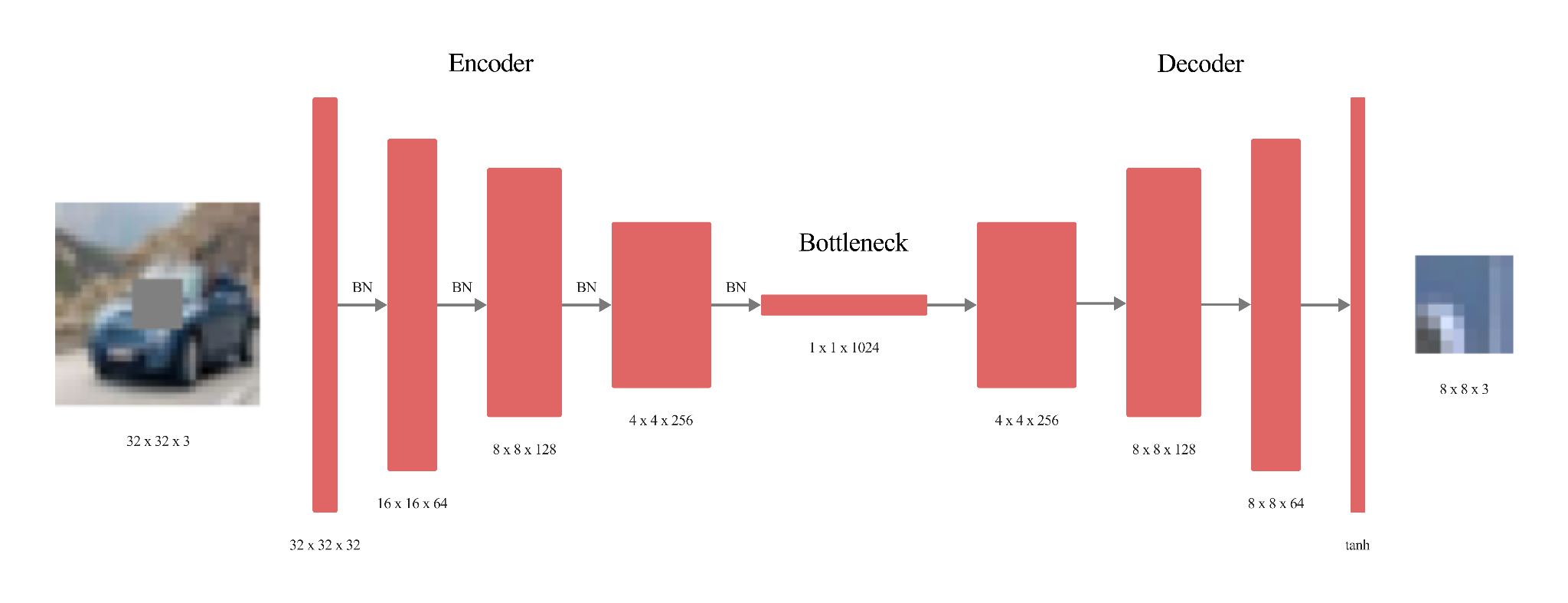

ur paper presents some creative advancements in the image in-painting techniques for small, simple images for example from the CIFAR10 dataset. This study primarily targeted on improving the performance of the context encoders through the utilization of several major training methods on Generative Adversarial Networks (GANs). To achieve this, we upscaled the network Wasserstein GAN (WGAN) and compared the discriminators and encoders with the current state-of-the-art models, alongside standard Convolutional Neural Network (CNN) architectures. Side by side to this, we also explored methods of Latent Variable Models and developed several different models, namely Pixel CNN, Row Long Short Term Memory (LSTM), and Diagonal Bidirectional Long Short-Term Memory (BiLSTM). Moreover, we proposed a model based on the Pixel CNN architectures and developed a faster yet easy approach called Row-wise Flat Pixel LSTM. Our experiments demonstrate that the proposed models generate high-quality images on CIFAR10 while conforming the L2 loss and visual quality measurement.

References

O. Russakovsky et al., “ImageNet Large Scale Visual Recognition Challenge,” Int. J. Comput. Vis., vol. 115, no. 3, pp. 211–252, Dec. 2015, doi: 10.1007/S11263-015-0816-Y/FIGURES/16.

T. Ružić and A. Pižurica, “Context-aware patch-based image inpainting using Markov random field modeling,” IEEE Trans. Image Process., vol. 24, no. 1, pp. 444–456, Jan. 2015, doi: 10.1109/TIP.2014.2372479.

K. H. Jin and J. C. Ye, “Annihilating Filter-Based Low-Rank Hankel Matrix Approach for Image Inpainting,” IEEE Trans. Image Process., vol. 24, no. 11, pp. 3498–3511, Nov. 2015, doi: 10.1109/TIP.2015.2446943.

Z. Yan, X. Li, M. Li, W. Zuo, and S. Shan, “Shift-Net: Image Inpainting via Deep Feature Rearrangement,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 11218 LNCS, pp. 3–19, Jan. 2018, doi: 10.1007/978-3-030-01264-9_1.

C. S. Weerasekera, T. Dharmasiri, R. Garg, T. Drummond, and I. Reid, “Just-in-Time Reconstruction: Inpainting Sparse Maps using Single View Depth Predictors as Priors,” Proc. - IEEE Int. Conf. Robot. Autom., pp. 4977–4984, May 2018, doi: 10.1109/ICRA.2018.8460549.

J. Zhao, Z. Chen, L. Zhang, and X. Jin, “Unsupervised Learnable Sinogram Inpainting Network (SIN) for Limited Angle CT reconstruction,” Nov. 2018, Accessed: May 06, 2024. [Online]. Available: https://arxiv.org/abs/1811.03911v1

A. Krizhevsky, “Learning Multiple Layers of Features from Tiny Images,” 2009, [Online]. Available: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf

C. Cortes, M. Mohri, and A. Rostamizadeh, “L2 Regularization for Learning Kernels,” Proc. 25th Conf. Uncertain. Artif. Intell. UAI 2009, pp. 109–116, May 2012, Accessed: May 06, 2024. [Online]. Available: https://arxiv.org/abs/1205.2653v1

S. Gu, J. Bao, D. Chen, and F. Wen, “GIQA: Generated Image Quality Assessment,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12356 LNCS, pp. 369–385, 2020, doi: 10.1007/978-3-030-58621-8_22.

Y. Wang, Y. C. Chen, X. Tao, and J. Jia, “VCNet: A Robust Approach to Blind Image Inpainting,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12370 LNCS, pp. 752–768, 2020, doi: 10.1007/978-3-030-58595-2_45.

Y. Liu, J. Pan, and Z. Su, “Deep Blind Image Inpainting,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 11935 LNCS, pp. 128–141, 2019, doi: 10.1007/978-3-030-36189-1_11.

C. J. Schuler, H. C. Burger, S. Harmeling, and B. Scholkopf, “A machine learning approach for non-blind image deconvolution,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., pp. 1067–1074, 2013, doi: 10.1109/CVPR.2013.142.

D. Pathak, P. Krahenbuhl, J. Donahue, T. Darrell, and A. A. Efros, “Context Encoders: Feature Learning by Inpainting,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2016-December, pp. 2536–2544, Dec. 2016, doi: 10.1109/CVPR.2016.278.

I. J. Goodfellow et al., “Generative Adversarial Nets,” Adv. Neural Inf. Process. Syst., vol. 27, 2014, Accessed: Oct. 02, 2023. [Online]. Available: http://www.github.com/goodfeli/adversarial

B. Xu, N. Wang, H. Kong, T. Chen, and M. Li, “Empirical Evaluation of Rectified Activations in Convolution Network”, Accessed: May 06, 2024. [Online]. Available: https://github.com/

K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., Sep. 2014, Accessed: May 06, 2024. [Online]. Available: https://arxiv.org/abs/1409.1556v6

C. Szegedy et al., “Going deeper with convolutions,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 07-12-June-2015, pp. 1–9, Oct. 2015, doi: 10.1109/CVPR.2015.7298594.

A. Van Den Oord, N. Kalchbrenner, O. Vinyals, L. Espeholt, A. Graves, and K. Kavukcuoglu, “Conditional Image Generation with PixelCNN Decoders,” Adv. Neural Inf. Process. Syst., pp. 4797–4805, Jun. 2016, Accessed: May 06, 2024. [Online]. Available: https://arxiv.org/abs/1606.05328v2

C. Yang, X. Lu, Z. Lin, E. Shechtman, O. Wang, and H. Li, “High-resolution image inpainting using multi-scale neural patch synthesis,” Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017, vol. 2017-January, pp. 4076–4084, Nov. 2017, doi: 10.1109/CVPR.2017.434.

“Wasserstein generative adversarial networks | Proceedings of the 34th International Conference on Machine Learning - Volume 70.” Accessed: May 06, 2024. [Online]. Available: https://dl.acm.org/doi/10.5555/3305381.3305404

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2016-December, pp. 770–778, Dec. 2016, doi: 10.1109/CVPR.2016.90.

A. Birhane and V. U. Prabhu, “Large image datasets: A pyrrhic win for computer vision?,” Proc. - 2021 IEEE Winter Conf. Appl. Comput. Vision, WACV 2021, pp. 1536–1546, Jun. 2020, doi: 10.1109/WACV48630.2021.00158.

T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen, “Improved Techniques for Training GANs,” Adv. Neural Inf. Process. Syst., pp. 2234–2242, Jun. 2016, Accessed: May 06, 2024. [Online]. Available: https://arxiv.org/abs/1606.03498v1

“(PDF) The Earth Mover’s Distance as a Metric for Image Retrieval.” Accessed: May 06, 2024. [Online]. Available: https://www.researchgate.net/publication/220659330_The_Earth_Mover’s_Distance_as_a_Metric_for_Image_Retrieval

A. van den Oord, N. Kalchbrenner, and K. Kavukcuoglu, “Pixel Recurrent Neural Networks.” PMLR, pp. 1747–1756, Jun. 11, 2016. Accessed: May 06, 2024. [Online]. Available: https://proceedings.mlr.press/v48/oord16.html

A. Radford, L. Metz, and S. Chintala, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks,” Int. Conf. Learn. Represent., 2015.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.