AI Vision for Health Care: Virtual Keyboard and Mouse Empowering Partially Disabled Patients

Keywords:

Virtual Keyboard, Virtual Mouse, YOLOv8, PyAutoGUI, Gesture Recognition, Computer Vision, CNN, OpenCV, TkinterAbstract

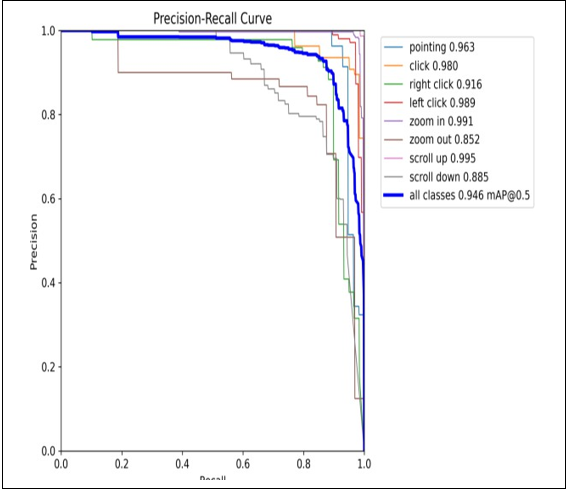

This paper introduces a machine-learning-based virtual keyboard and mouse system designed to assist individuals with physical disabilities. The system recognizes hand gestures using computer vision techniques and translates them into keyboard inputs and mouse controls. By utilizing Convolutional Neural Networks (CNNs) and the YOLOv8 model, the system achieves real-time performance with an average accuracy of 92%, enabling touchless interaction with computers. The solution uses widely available hardware like standard webcams, making it accessible, affordable, and easy to deploy. This system improves the usability of computing devices for people with motor impairments, offering an innovative, touchless alternative to traditional input methods. It also supports essential tasks such as scrolling, clicking, and zooming through simple gestures. The framework is adaptable to various environments, ensuring it is easy to use in different settings. Our system offers a complete virtual keyboard and mouse solution using a common webcam and real-time gesture recognition, making computer use easier and more affordable for users with motor impairment.

References

A. L. D. K. Michael Roberts, “Accessibility Challenges in Input Devices,” IEEE Trans. Human-Machine Syst., 2020.

P. D. M. K. Tomasz Bednarz, “OpenPose: Real-Time Gesture Tracking,” IEEE Conf. Comput. Vis. Pattern Recognit., 2019.

H. L. J. G. Guangjun Jiang, “YOLO Architectures for Edge Devices,” Springer Nat. Mach. Intell., 2023.

Y. L. Z. Z. Hao Wang, “Adaptive Gesture Recognition Under Dynamic Lighting,” IEEE Int. Conf. Image Process., 2022.

Q. C. W. Z. Yan Li, “Robust Gesture Recognition in Variable Lighting,” IEEE Trans. Pattern Anal. Mach. Intell., 2020.

J. C. M. L. Kai Sun, “Deep CNNs for Hand Gesture Classification,” IEEE Trans. Neural Networks Learn. Syst., 2021.

C. G. L. S. Patricia Martinez, “Gaze-Gesture Hybrid Systems,” IEEE Trans. Syst. Man, Cybern., 2019.

L. W. N. X. Zhiqiang Zhang, “YOLOv5 in Assistive Robotics,” Springer J. Intell. Robot. Syst., 2022.

R. Szeliski, “Computer Vision Algorithms Using OpenCV,” in Springer, 2021.

R. M. S. C. James Davis, “Efficient ROI Extraction for Gesture Recognition,” IEEE Sensors J., 2022.

J. E. R. T. Lisa Clark, “User-Centric Evaluation of Assistive Technologies,” Springer J. Access. Des. All, 2021.

M. B. E. D. Sarah Green, “Low-Cost Gesture Recognition Using Webcams,” IEEE Sensors J., 2021.

S. D. R. G. A. F. Joseph Redmon, “YOLOv8: Real-Time Object Detection,” IEEE Trans. Pattern Anal. Mach. Intell., 2023.

M. G. T. W. Alex Brown, “YOLOv8 Performance Benchmarking,” IEEE Trans. Intell. Transp. Syst., 2023.

J. D. E. W. Mark Smith, “PyAutoGUI for Assistive Automation,” IEEE Int. Conf. Robot. Autom., 2020.

K. S. B. J. Steven Thompson, “Click Gesture Design for Motor- Impaired Users,” Springer Univers. Access Inf. Soc., 2022.

A. G. M. H. David Brown, “Scroll Gesture Recognition Using CNNs,” IEEE Conf. Hum. Factors Comput. Syst., 2023.

D. T. E. M. Laura Perez, “Zoom Gestures for Touchless Control,” IEEE Trans. Vis. Comput. Graph., 2022.

L. M. P. T. Andrew King, “Predictive Text for Assistive Systems,” IEEE Trans. Neural Networks Learn. Syst., 2023.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.