Voice Cloning and Synthesis Using Deep Learning: A Comprehensive Study

Keywords:

Voice Cloning, Speech Synthesis, Deep Learning, Multilingual Zero-shot Multi-Speaker TTS (XTTS), Speaker Adaptation, Cross-Lingual TTS, Whisper, Llama 8BAbstract

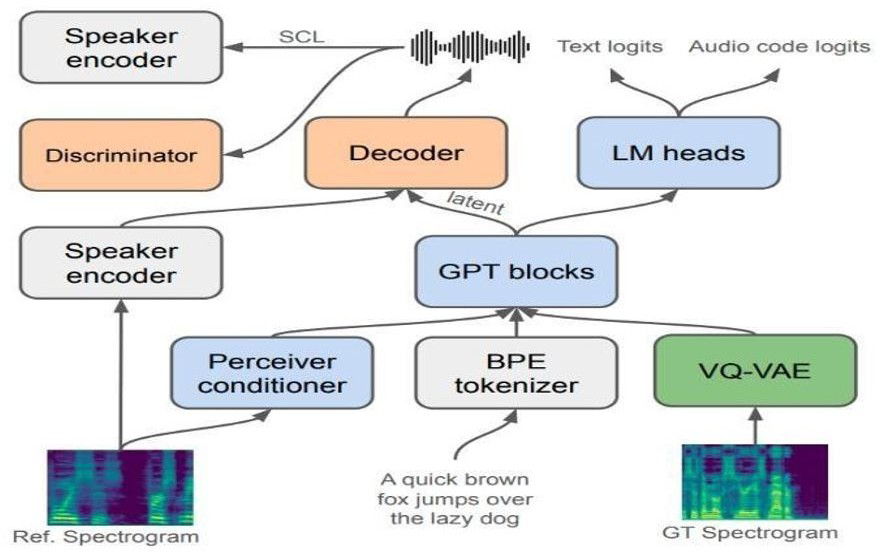

This paper reviews current voice cloning and speech synthesis methods. It focuses on the way that deep learning enhances AI-generated voice synthesis in terms of quality, flexibility, and efficiency. We analyze the top AI models in terms of their significance to virtual assistants, dubbing, and accessibility tools: XTTS_v2, Whisper, and Llama 8B. Voice cloning and TTS efforts in Tortoise are improved by XTTs_v2. Based on the multilingual creative transfer, it has a higher speed and shorter time of a computational process, and generates synthetic speech closer to naturalness. Whisper is a transcription model that goes from an audio waveform to text. It simplifies access to audio data. Llama 8B focuses on user question answering for enhancing AI and human interaction. Other related work includes fastSpeech2 [1], Neural Voice Cloning with few Samples [2], and Deep Learning-Based Expressive Speech Synthesis [3], which also contribute to these advancements. This progress enhances machines' ability to communicate in an emotional and human-like way, leading to more sophisticated technology.

References

K. S. Aaron van den Oord, Sander Dieleman, Heiga Zen, “WaveNet: A Generative Model for Raw Audio,” arXiv:1609.03499, 2016, doi: https://doi.org/10.48550/arXiv.1609.03499.

L. H. Edresson Casanova, Kelly Davis, Eren Gölge, Görkem Göknar, Iulian Gulea, “XTTS: a Massively Multilingual Zero-Shot Text-to-Speech Model,” arXiv:2406.04904, 2024, doi: https://doi.org/10.48550/arXiv.2406.04904.

X. M. Hugo Touvron, Thibaut Lavril, Gautier Izacard, “LLaMA: Open and Efficient Foundation Language Models,” arXiv:2302.13971, 2023, doi: https://doi.org/10.48550/arXiv.2302.13971.

Y. Wang et al., “Tacotron: Towards End-to-End Speech Synthesis,” Proc. Annu. Conf. Int. Speech Commun. Assoc. INTERSPEECH, vol. 2017-August, pp. 4006–4010, 2017, doi: 10.21437/INTERSPEECH.2017-1452.

T.-Y. L. Yi Ren, Chenxu Hu, Xu Tan, Tao Qin, Sheng Zhao, Zhou Zhao, “FastSpeech 2: Fast and High-Quality End-to-End Text to Speech,” arXiv:2006.04558, 2020, doi: https://doi.org/10.48550/arXiv.2006.04558.

H. Ze, A. Senior, and M. Schuster, “Statistical parametric speech synthesis using deep neural networks,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., pp. 7962–7966, Oct. 2013, doi: 10.1109/ICASSP.2013.6639215.

Y. W. Ye Jia, Yu Zhang, Ron J. Weiss, Quan Wang, Jonathan Shen, Fei Ren, Zhifeng Chen, Patrick Nguyen, Ruoming Pang, Ignacio Lopez Moreno, “Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis,” Adv. Neural Inf. Process. Syst., vol. 31, pp. 4485–4495, 2018, doi: https://doi.org/10.48550/arXiv.1806.04558.

J. Latorre et al., “Effect of Data Reduction on Sequence-to-sequence Neural TTS,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., vol. 2019-May, pp. 7075–7079, May 2019, doi: 10.1109/ICASSP.2019.8682168.

N. S. Suparna De, Ionut Bostan, “Making Social Platforms Accessible: Emotion-Aware Speech Generation with Integrated Text Analysis,” 16th Int. Conf. Adv. Soc. Networks Anal. Min. -ASONAM-2024, 2024, doi: https://doi.org/10.48550/arXiv.2410.19199.

I. S. Alec Radford, Jong Wook Kim, Tao Xu, Greg Brockman, Christine McLeavey, “Robust Speech Recognition via Large-Scale Weak Supervision,” arXiv:2212.04356, 2022, doi: https://doi.org/10.48550/arXiv.2212.04356.

Y. Z. Zuen Cen, “Investigating the Impact of AI-Driven Voice Assistants on User Productivity and Satisfaction in Smart Homes,” J. Econ. Theory Bus. Manag., vol. 1, no. 6, pp. 8–14, 2024, doi: 10.70393/6a6574626d.323333.

Y. Z. Sercan Arik, Gregory Diamos, Andrew Gibiansky, John Miller, Kainan Peng, Wei Ping, Jonathan Raiman, “Deep Voice 2: Multi-Speaker Neural Text-to-Speech,” arXiv:1705.08947, 2017, doi: https://doi.org/10.48550/arXiv.1705.08947.

Y. Z. Sercan O. Arik, Jitong Chen, Kainan Peng, Wei Ping, “Neural Voice Cloning with a Few Samples,” arXiv:1802.06006, 2018, doi: https://doi.org/10.48550/arXiv.1802.06006.

I. Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, “Attention is all you need,” Adv. Neural Inf. Process. Syst., p. 30, 2017.

H. B. Chandran, “Deep learning-based expressive speech synthesis,” EURASIP J. Audio, Speech, Music Process., 2024, doi: https://doi.org/10.1186/s13636-024-00329-7.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.