Non-Manual Gesture Recognition using Transfer Learning Approach

Keywords:

Face Expressions, Non-Manual Gestures, Pakistan Sign Language, Region of Interest, YOLO-FaceAbstract

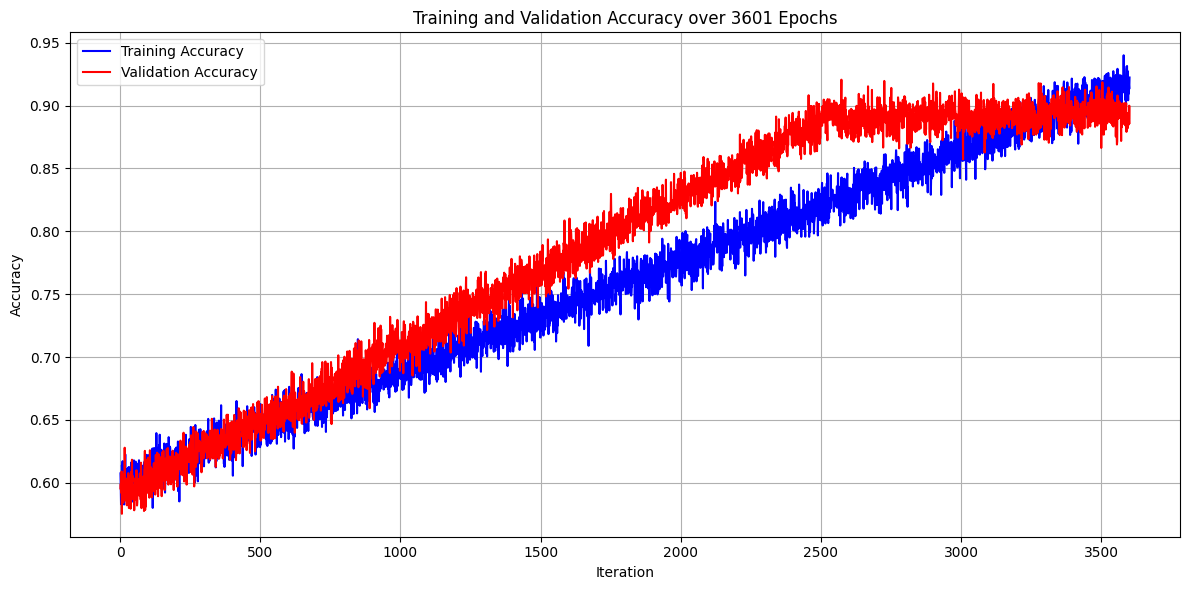

Individuals with limited hearing and speech rely on sign language as a fundamental nonverbal mode of communication. It communicates using hand signs, yet the complexity of this mode of expression extends beyond hand movements. Body language and facial expressions are also important in delivering the entire information. While manual (hand movements) and non-manual (facial expressions and body movements) gestures in sign language are important for communication, this field of research has not been substantially investigated, owing to a lack of comprehensive datasets. The current study presents a novel dataset that includes both manual and non-manual gestures in the context of Pakistan Sign Language (PSL). This newly produced dataset consists of. MP4 format films containing seven unique motions involving emotive facial expressions and accompanying hand signs. The dataset was recorded by 100 people. Aside from sign language identification, the dataset opens up possibilities for other applications such as facial expressions, facial feature detection, gender and age classification. In this current study, we evaluated our newly developed dataset for facial expression assessment (non-manual gestures) by YOLO-Face detection methodology successfully extracts faces as Regions of Interest (RoI), with an astounding 90.89% accuracy and an average loss of 0.34. Furthermore, we have used Transfer Learning (TL) using VGG16 architecture to classify seven basic facial expressions and succeeded with 100% accuracy. In summary, our study produced two different datasets, one with manual and non-manual sign language gestures, the second with Asian faces to find seven basic facial expressions. With both the dataset, our validation techniques found promising results.

References

M. Al-Qurishi, T. Khalid, and R. Souissi, “Deep Learning for Sign Language Recognition: Current Techniques, Benchmarks, and Open Issues,” IEEE Access, vol. 9, pp. 126917-126951, 2021.

J. Zheng, Y. Chen, C. Wu, X. Shi, and S. M. Kamal, “Enhancing Neural Sign Language Translation by Highlighting the Facial Expression Information,” Neurocomputing, vol. 464, pp. 462–472, 2021.

A. Singh, S. K. Singh, and A. Mittal, “A Review on Dataset Acquisition Techniques in Gesture Recognition from Indian Sign Language,” in Advances in Data Computing, Communication and Security, Springer, vol. 106, pp. 305–313, 2021.

S. Sharma and S. Singh, “Vision-based hand gesture recognition using deep learning for the interpretation of sign language,” Expert Systems and Applications, vol. 182, p. 115657, 2021.

I. Rodríguez-Moreno, J. M. Martínez-Otzeta, I. Goienetxea, and B. Sierra, “Sign Language Recognition by means of Common Spatial Patterns,” in 2021 The 5th International Conference on Machine Learning and Soft Computing, Da Nang Viet Nam, 2021, pp. 96–102.

H. Zahid, M. Rashid, S. Hussain, F. Azim, S. A. Syed, and A. Saad, “Recognition of Urdu Sign Language: A Systematic Review of the Machine Learning Classification,” PeerJ Computer Science, vol. 8, p. e883, 2022.

“Deaf Reach Schools and Training Centers in Pakistan.” https://www.deafreach.com/ [Online] (accessed May 19, 2022).

“PSL.” https://psl.org.pk/ [Online] (accessed May 19, 2022).

A. Imran, A. Razzaq, I. A. Baig, A. Hussain, S. Shahid, and T.-U. Rehman, “Dataset of Pakistan Sign Language and Automatic Recognition of Hand Configuration of Urdu Alphabet through Machine Learning,” Data in Brief, vol. 36, p. 107021, Jun. 2021, doi: 10.1016/j.dib.2021.107021.

“Pakistan Sign Language Dataset - Open Data Pakistan.” [Online] https://opendata.com.pk/dataset/pakistan-sign-language-dataset (accessed May 19, 2022).

S. Javaid, S. Rizvi, M. T. Ubaid, A. Darboe and S. M. Mayo, “Interpretation of Expressions through Hand Signs Using Deep Learning Techniques”, International Journal of Innovations in Science and Technology, vol. 4, no. 2, pp. 596-611, 2022.

S. Javaid and S. Rizvi, "A novel action transformer network for hybrid multimodal sign language recognition," Computers, Materials & Continua, vol. 74, no.1, pp. 523–537, 2023.

R. Gavrilescu, C. Zet, C. Foșalău, M. Skoczylas, and D. Cotovanu, “Faster R-CNN: An Approach to Real-Time Object Detection,” in 2018 International Conference and Exposition on Electrical and Power Engineering (EPE), Iasi, Romania, 2018, pp. 0165–0168.

J. Redmon and A. Farhadi, “YOLOv3: An Incremental Improvement,” ArXiv Prepr. ArXiv180402767, 2018.

W. Chen, H. Huang, S. Peng, C. Zhou, and C. Zhang, “YOLO-Face: A Real-Time Face Detector,” The Visual Computer, vol. 37, no. 4, pp. 805–813, 2021.

S.-W. Kim, H.-K. Kook, J.-Y. Sun, M.-C. Kang, and S.-J. Ko, “Parallel Feature Pyramid Network for Object Detection,” in Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 2018, pp. 234–250.

W. I. D. Mining, “Data mining: Concepts and techniques,” Morgan Kaufinann, vol. 10, pp. 559–569, 2006.

F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A Unified Embedding for Face Recognition and Clustering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 2015, pp. 815–823.

A. Jain, K. Nandakumar, and A. Ross, “Score Normalization in Multimodal Biometric Systems,” Pattern Recognition, vol. 38, no. 12, pp. 2270–2285, 2005.

Javaid, Sameena and Rizvi, Safdar. ‘Manual and Non-manual Sign Language Recognition Framework Using Hybrid Deep Learning Techniques’. 1 Jan. 2023: 3823 – 3833.

H. Mohabeer, K. S. Soyjaudah, and N. Pavaday, “Enhancing The Performance Of Neural Network Classifiers Using Selected Biometric Features”, SENSORCOMM 2011: The Fifth International Conference on Sensor Technologies and Applications, Saint Laurent du Var, France, 2011.

S. Khan, H. Rahmani, S. A. A. Shah, and M. Bennamoun, “A Guide to Convolutional Neural Networks for Computer Vision,” Synthesis Lectures on Computer Vision, vol. 8, no. 1, pp. 1–207, 2018.

E. Kremic and A. Subasi, “Performance of Random Forest and SVM in Face Recognition.” Int Arab Journal of Information Technology, vol. 13, no. 2, pp. 287–293, 2016.

E. Setiawan and A. Muttaqin, “Implementation of k-Nearest Neighbors Face Recognition on Low-Power Processor,” Telecommunication Computing Electronics and Control, vol. 13, no. 3, pp. 949–954, 2015.

M. T. Ubaid, A. Kiran, M. T. Raja, U. A. Asim, A. Darboe and M. A. Arshed, "Automatic Helmet Detection using EfficientDet," 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 2021, pp. 1-9, doi: 10.1109/ICIC53490.2021.9693093.

M. A. Arshed, H. Ghassan, M. Hussain, M. Hassan, A. Kanwal, and R. Fayyaz, “A Light Weight Deep Learning Model for Real World Plant Identification,” 2022 2nd Int. Conf. Distrib. Comput. High Perform. Comput. DCHPC 2022, pp. 40–45, 2022, doi: 10.1109/DCHPC55044.2022.9731841.

M. T. Ubaid, M. Z. Khan, M. Rumaan, M. A. Arshed, M. U. G. Khan and A. Darboe, "COVID-19 SOP’s Violations Detection in Terms of Face Mask Using Deep Learning," 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 2021, pp. 1-8, doi: 10.1109/ICIC53490.2021.9692999.

M. A. Arshed, H. Ghassan, M. Hussain, M. Hassan, A. Kanwal and R. Fayyaz, "A Light Weight Deep Learning Model for Real World Plant Identification," 2022 Second International Conference on Distributed Computing and High Performance Computing (DCHPC), Qom, Iran, Islamic Republic of, 2022, pp. 40-45, doi: 10.1109/DCHPC55044.2022.9731841.

M. A. Arshed, A. Shahzad, K. Arshad, D. Karim, S. Mumtaz, and M. Tanveer, “Multiclass Brain Tumor Classification from MRI Images using Pre-Trained CNN Model”, VFAST trans. softw. eng., vol. 10, no. 4, pp. 22–28, Nov. 2022.

A. Shahzad, M. A. Arshed, F. Liaquat, M. Tanveer, M. Hussain, and R. Alamdar, “Pneumonia Classification from Chest X-ray Images Using Pre-Trained Network Architectures”, VAWKUM trans. comput. sci., vol. 10, no. 2, pp. 34–44, Dec. 2022.

H. Younis, Muhammad Asad Arshed, Fawad ul Hassan, Maryam Khurshid, and Hadia Ghassan, “Tomato Disease Classification using Fine-Tuned Convolutional Neural Network”, IJIST, vol. 4, no. 1, pp. 123–134, Feb. 2022.

M. Mubeen, M. A. Arshed, and H. A. Rehman, “DeepFireNet - A Light-Weight Neural Network for Fire-Smoke Detection,” Commun. Comput. Inf. Sci., vol. 1616 CCIS, pp. 171–181, 2022, doi: 10.1007/978-3-031-10525-8_14/COVER.

Q. Zhu, Z. He, T. Zhang, and W. Cui, “Improving Classification Performance of Softmax Loss Function based on Scalable Batch-Normalization,” Applied Sciences, vol. 10, no. 8, p. 2950, 2020.

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.