Salat Postures Detection Using a Hybrid Deep Learning Architecture

Keywords:

Salat Posture Recogntiion, Namaz Posture, MediaPipe, HCI, 3DCNNAbstract

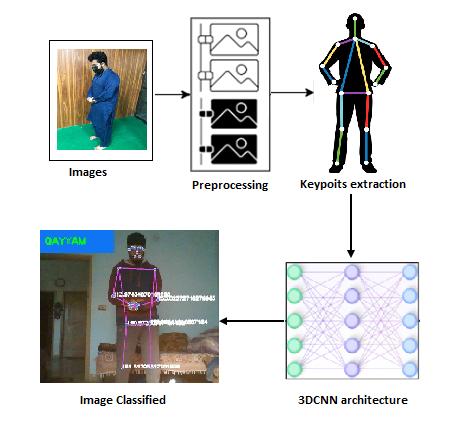

Salat, a fundamental act of worship in Islam, is performed five times daily. It entails a specific set of postures and has both spiritual and bodily advantages. Many people, notably novices and the elderly, may trouble with maintaining proper posture and remembering the sequence. Resources, instruction, and practice assist in addressing these issues, emphasizing the need of prayer sincerity. Our contribution in the research is two-fold as we have developed a new dataset for Salat posture detection and further a hybrid model Media Pipe+3DCNN. Dataset is developed of 46 individuals performing each of the three compulsory Salat postures of Qayyam, Rukku and Sajdah and model was trained and tested with 14019 images. Our current research is a solution for correct posture detection which can be used for all ages. We examined the Media Pipe library design as a methodology, which leverages a multistep detector machine learning pipeline that has been proven to work in our research. Using a detector, the pipeline first locates the person's region-of-interest (ROI) within the frame. The tracker then forecasts the pose landmarks and division mask in between the ROIs using the ROI cropped frame as input. A 3D convolutional neural network (3DCNN) was also utilized to extract features and classification from key-points retrieved from the Media Pipe architecture. With real-time evaluation, the newly built model provided 100% accuracy and a promising result. We analyzed different evaluation matrices such as Loss, Precision, Recall, F1-Score, and area under the curve (AUC) to give validation process authenticity; the results are 0.03, 1.00, 0.01, 0.99, 1.00 and 0.95. accordingly.

References

K. Zeissler, “Gesture recognition gets an update,” Nat. Electron. 2023 64, vol. 6, no. 4, pp. 272–272, Apr. 2023, doi: 10.1038/s41928-023-00962-8.

Z. Li, “Radar-based human gesture recognition,” 2023, Accessed: Nov. 15, 2023. [Online]. Available: https://dr.ntu.edu.sg/handle/10356/166731

I. Jahan, N. A. Al-Nabhan, J. Noor, M. Rahaman, and A. B. M. A. A. Islam, “Leveraging A Smartwatch for Activity Recognition in Salat,” IEEE Access, 2023, doi: 10.1109/ACCESS.2023.3311261.

S. Javaid and S. Rizvi, “A Novel Action Transformer Network for Hybrid Multimodal Sign Language Recognition,” Comput. Mater. Contin., vol. 74, no. 1, pp. 523–537, Sep. 2022, doi: 10.32604/CMC.2023.031924.

M. T. Ubaid, A. Darboe, F. S. Uche, A. Daffeh, and M. U. G. Khan, “Kett Mangoes Detection in the Gambia using Deep Learning Techniques,” 4th Int. Conf. Innov. Comput. ICIC 2021, 2021, doi: 10.1109/ICIC53490.2021.9693082.

“Interpretation of Expressions through Hand Signs Using Deep Learning Techniques | International Journal of Innovations in Science & Technology.” Accessed: Nov. 15, 2023. [Online]. Available: https://journal.50sea.com/index.php/IJIST/article/view/344

N. Alfarizal et al., “Moslem Prayer Monitoring System Based on Image Processing,” pp. 483–492, Jun. 2023, doi: 10.2991/978-94-6463-118-0_50.

K. Ghazal, “Physical benefits of (Salah) prayer - Strengthen the faith & fitness,” J. Nov. Physiother. Rehabil., pp. 043–053, 2018, doi: 10.29328/JOURNAL.JNPR.1001020.

“Amazing Facts About Salah and Why Salah is Important.” Accessed: Nov. 15, 2023. [Online]. Available: https://simplyislam.academy/blog/facts-about-salah-and-why-salah-is-important

S. Alizadeh et al., “Resistance Training Induces Improvements in Range of Motion: A Systematic Review and Meta-Analysis,” Sports Med., vol. 53, no. 3, pp. 707–722, Mar. 2023, doi: 10.1007/S40279-022-01804-X.

A. Sharif, S. Mehmood, B. Mahmood, A. Siddiqa, M. A. A. Hassan, and M. Afzal, “Comparison of Hamstrings Flexibility among Regular and Irregular Muslim Prayer Offerers,” Heal. J. Physiother. Rehabil. Sci., vol. 3, no. 1, pp. 329–333, Feb. 2023, doi: 10.55735/HJPRS.V3I1.126.

S. Javaid and S. Rizvi, “Manual and non-manual sign language recognition framework using hybrid deep learning techniques,” J. Intell. Fuzzy Syst., vol. 45, no. 3, pp. 3823–3833, Jan. 2023, doi: 10.3233/JIFS-230560.

“Prayer Activity Recognition Using an Accelerometer Sensor - ProQuest.” Accessed: Nov. 15, 2023. [Online]. Available: https://www.proquest.com/openview/10db654dd4adaeec6670c9fa791bb8d8/1?pq-origsite=gscholar&cbl=1976349

O. Alobaid, “Identifying Action with Non-Repetitive Movements Using Wearable Sensors: Challenges, Approaches and Empirical Evaluation.” Accessed: Nov. 15, 2023. [Online]. Available: https://esploro.libs.uga.edu/esploro/outputs/doctoral/Identifying-Action-with-Non-Repetitive-Movements-Using/9949366058202959

H. A. Hassan, H. A. Qassas, B. S. Alqarni, R. I. Alghuraibi, K. F. Alghannam, and O. M. Mirza, “Istaqim: An Assistant Application to Correct Prayer for Arab Muslims,” Proc. 2022 5th Natl. Conf. Saudi Comput. Coll. NCCC 2022, pp. 52–57, 2022, doi: 10.1109/NCCC57165.2022.10067581.

M. M. Rahman, R. A. A. Alharazi, and M. K. I. B. Z. Badri, “Intelligent system for Islamic prayer (salat) posture monitoring,” IAES Int. J. Artif. Intell., vol. 12, no. 1, pp. 220–231, Mar. 2023, doi: 10.11591/IJAI.V12.I1.PP220-231.

Y. A. Y. N. A. Jaafar, N. A. Ismail, K. A. Jasmi, “Optimal dual cameras setup for motion recognition in salat activity,” Int. Arab J. Inf. Technol., vol. 16, no. 6, pp. 1082–1089, 2019.

R. O. Ogundokun, R. Maskeliunas, and R. Damasevicius, “Human Posture Detection on Lightweight DCNN and SVM in a Digitalized Healthcare System,” 2023 3rd Int. Conf. Appl. Artif. Intell. ICAPAI 2023, 2023, doi: 10.1109/ICAPAI58366.2023.10194156.

S. H. Mohiuddin, T. Syed, and B. Khan, “Salat Activity Recognition on Smartphones using Convolutional Network,” 2022 Int. Conf. Emerg. Trends Smart Technol. ICETST 2022, 2022, doi: 10.1109/ICETST55735.2022.9922933.

A. Koubaa et al., “Activity Monitoring of Islamic Prayer (Salat) Postures using Deep Learning,” Proc. - 2020 6th Conf. Data Sci. Mach. Learn. Appl. CDMA 2020, pp. 106–111, Nov. 2019, doi: 10.1109/CDMA47397.2020.00024.

W. C. Huang, C. L. Shih, I. T. Anggraini, N. Funabiki, and C. P. Fan, “OpenPose Technology Based Yoga Exercise Guidance Functions by Hint Messages and Scores Evaluation for Dynamic and Static Yoga Postures,” J. Adv. Inf. Technol., vol. 14, no. 5, pp. 1029–1036, 2023, doi: 10.12720/JAIT.14.5.1029-1036.

Y. Lin, X. Jiao, L. Zhao, Y. Lin, X. Jiao, and L. Zhao, “Detection of 3D Human Posture Based on Improved Mediapipe,” J. Comput. Commun., vol. 11, no. 2, pp. 102–121, Feb. 2023, doi: 10.4236/JCC.2023.112008.

C. Lugaresi et al., “MediaPipe: A Framework for Building Perception Pipelines,” Jun. 2019, Accessed: Nov. 15, 2023. [Online]. Available: https://arxiv.org/abs/1906.08172v1

A. K. Singh, V. A. Kumbhare, and K. Arthi, “Real-Time Human Pose Detection and Recognition Using MediaPipe,” pp. 145–154, 2022, doi: 10.1007/978-981-16-7088-6_12.

J. W. Kim, J. Y. Choi, E. J. Ha, and J. H. Choi, “Human Pose Estimation Using MediaPipe Pose and Optimization Method Based on a Humanoid Model,” Appl. Sci. 2023, Vol. 13, Page 2700, vol. 13, no. 4, p. 2700, Feb. 2023, doi: 10.3390/APP13042700.

S. Suherman, A. Suhendra, and E. Ernastuti, “Method Development Through Landmark Point Extraction for Gesture Classification With Computer Vision and MediaPipe,” TEM J., pp. 1677–1686, Aug. 2023, doi: 10.18421/TEM123-49.

M. Al-Hammadi, G. Muhammad, W. Abdul, M. Alsulaiman, M. A. Bencherif, and M. A. Mekhtiche, “Hand Gesture Recognition for Sign Language Using 3DCNN,” IEEE Access, vol. 8, pp. 79491–79509, 2020, doi: 10.1109/ACCESS.2020.2990434.

M. A. Arshed, H. Ghassan, M. Hussain, M. Hassan, A. Kanwal, and R. Fayyaz, “A Light Weight Deep Learning Model for Real World Plant Identification,” 2022 2nd Int. Conf. Distrib. Comput. High Perform. Comput. DCHPC 2022, pp. 40–45, 2022, doi: 10.1109/DCHPC55044.2022.9731841.

M. T. Ubaid, M. Z. Khan, M. Rumaan, M. A. Arshed, M. U. G. Khan and A. Darboe, "COVID-19 SOP’s Violations Detection in Terms of Face Mask Using Deep Learning," 2021 International Conference on Innovative Computing (ICIC), Lahore, Pakistan, 2021, pp. 1-8, doi: 10.1109/ICIC53490.2021.9692999.

M. A. Arshed, H. Ghassan, M. Hussain, M. Hassan, A. Kanwal and R. Fayyaz, "A Light Weight Deep Learning Model for Real World Plant Identification," 2022 Second International Conference on Distributed Computing and High Performance Computing (DCHPC), Qom, Iran, Islamic Republic of, 2022, pp. 40-45, doi: 10.1109/DCHPC55044.2022.9731841.

A. Shahzad, M. A. Arshed, F. Liaquat, M. Tanveer, M. Hussain, and R. Alamdar, “Pneumonia Classification from Chest X-ray Images Using Pre-Trained Network Architectures”, VAWKUM trans. comput. sci., vol. 10, no. 2, pp. 34–44, Dec. 2022.

H. Younis, Muhammad Asad Arshed, Fawad ul Hassan, Maryam Khurshid, and Hadia Ghassan, “Tomato Disease Classification using Fine-Tuned Convolutional Neural Network”, IJIST, vol. 4, no. 1, pp. 123–134, Feb. 2022.

M. Mubeen, M. A. Arshed, and H. A. Rehman, “DeepFireNet - A Light-Weight Neural Network for Fire-Smoke Detection,” Commun. Comput. Inf. Sci., vol. 1616 CCIS, pp. 171–181, 2022, doi: 10.1007/978-3-031-10525-8_14/COVER.

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.