Alex Net-Based Speech Emotion Recognition Using 3D Mel-Spectrograms

DOI:

https://doi.org/10.33411/ijist/202462426433Keywords:

AlexNet, Convolution Neural Network, Mel-Spectrogram, Speech Emotion RecognitionAbstract

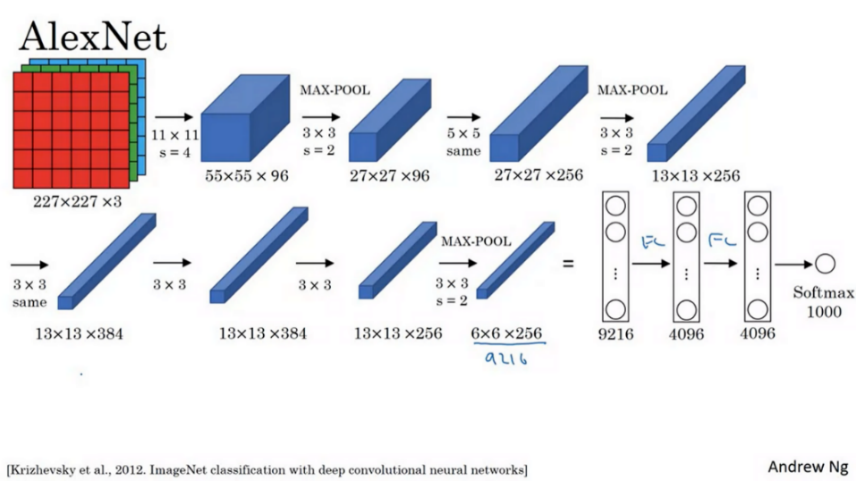

Speech Emotion Recognition (SER) is considered a challenging task in the domain of Human-Computer Interaction (HCI) due to the complex nature of audio signals. To overcome this challenge, we devised a novel method to fine-tune Convolutional Neural Networks (CNNs) for accurate recognition of speech emotion. This research utilized the spectrogram representation of audio signals as input to train a modified Alex Net model capable of processing signals of varying lengths. The IEMOCAP dataset was utilized to identify multiple emotional states such as happy, sad, angry, and neutral from the speech. The audio signal was preprocessed to extract a 3D spectrogram that represents time, frequencies, and color amplitudes as key features. The output of the modified Alex Net model is a 256-dimensional vector. The model achieved adequate accuracy, highlighting the effectiveness of CNNs and 3D Mel-Spectrograms in achieving precise and efficient speech emotion recognition, thus paving the way for significant advancements in this domain.

References

T. M. Wani, T. S. Gunawan, S. A. A. Qadri, M. Kartiwi, and E. Ambikairajah, “A Comprehensive Review of Speech Emotion Recognition Systems,” IEEE Access, vol. 9, pp. 47795–47814, 2021, doi: 10.1109/ACCESS.2021.3068045.

Mustaqeem and S. Kwon, “CLSTM: Deep Feature-Based Speech Emotion Recognition Using the Hierarchical ConvLSTM Network,” Math. 2020, Vol. 8, Page 2133, vol. 8, no. 12, p. 2133, Nov. 2020, doi: 10.3390/MATH8122133.

M. M. N. Bieńkiewicz et al., “Bridging the gap between emotion and joint action,” Neurosci. Biobehav. Rev., vol. 131, pp. 806–833, Dec. 2021, doi: 10.1016/J.NEUBIOREV.2021.08.014.

V. Chaturvedi, A. B. Kaur, V. Varshney, A. Garg, G. S. Chhabra, and M. Kumar, “Music mood and human emotion recognition based on physiological signals: a systematic review,” Multimed. Syst., vol. 28, no. 1, pp. 21–44, Feb. 2022, doi: 10.1007/S00530-021-00786-6/METRICS.

“Is happier music groovier?: The influence of emotional characteristics of musical chord progressions on groove.” Accessed: Apr. 14, 2024. [Online]. Available: https://osf.io/preprints/osf/h3wrm

A. I. Iliev and A. I. Iliev, “Perspective Chapter: Emotion Detection Using Speech Analysis and Deep Learning,” Emot. Recognit. - Recent Adv. New Perspect. Appl., May 2023, doi: 10.5772/INTECHOPEN.110730.

S. Islam, M. M. Haque, and A. J. M. Sadat, “Capturing Spectral and Long-term Contextual Information for Speech Emotion Recognition Using Deep Learning Techniques,” Aug. 2023, Accessed: Apr. 14, 2024. [Online]. Available: https://arxiv.org/abs/2308.04517v1

M. Dong, L. Peng, Q. Nie, and W. Li, “Speech Signal Processing of Industrial Speech Recognition,” J. Phys. Conf. Ser., vol. 2508, no. 1, p. 012039, May 2023, doi: 10.1088/1742-6596/2508/1/012039.

N. Bashir et al., “A Machine Learning Framework for Major Depressive Disorder (MDD) Detection Using Non-invasive EEG Signals,” Wirel. Pers. Commun., pp. 1–23, May 2023, doi: 10.1007/S11277-023-10445-W/METRICS.

R. Jahangir, Y. W. Teh, G. Mujtaba, R. Alroobaea, Z. H. Shaikh, and I. Ali, “Convolutional neural network-based cross-corpus speech emotion recognition with data augmentation and features fusion,” Mach. Vis. Appl., vol. 33, no. 3, pp. 1–16, May 2022, doi: 10.1007/S00138-022-01294-X/METRICS.

R. H. Aljuhani, A. Alshutayri, and S. Alahdal, “Arabic Speech Emotion Recognition from Saudi Dialect Corpus,” IEEE Access, vol. 9, pp. 127081–127085, 2021, doi: 10.1109/ACCESS.2021.3110992.

M. B. Akçay and K. Oğuz, “Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers,” Speech Commun., vol. 116, pp. 56–76, Jan. 2020, doi: 10.1016/J.SPECOM.2019.12.001.

R. Jahangir, Y. W. Teh, F. Hanif, and G. Mujtaba, “Correction to: Deep learning approaches for speech emotion recognition: state of the art and research challenges (Multimedia Tools and Applications, (2021), 80, 16, (23745-23812), 10.1007/s11042-020-09874-7),” Multimed. Tools Appl., vol. 80, no. 16, p. 23813, Jul. 2021, doi: 10.1007/S11042-021-10967-0/METRICS.

“Decoding the Symphony of Sound: Audio Signal Processing for Musical Engineering | by Naman Agrawal | Towards Data Science.” Accessed: Apr. 14, 2024. [Online]. Available: https://towardsdatascience.com/decoding-the-symphony-of-sound-audio-signal-processing-for-musical-engineering-c66f09a4d0f5

C. Busso et al., “IEMOCAP: Interactive emotional dyadic motion capture database,” Lang. Resour. Eval., vol. 42, no. 4, pp. 335–359, Dec. 2008, doi: 10.1007/S10579-008-9076-6/METRICS.

Y. Wang, H. Zhang, and W. Huang, “Fast ship radiated noise recognition using three-dimensional mel-spectrograms with an additive attention based transformer,” Front. Mar. Sci., vol. 10, no. November, pp. 1–14, 2023, doi: 10.3389/fmars.2023.1280708.

A. Rodriguez, Y. L. Chen, and C. Argueta, “FADOHS: Framework for Detection and Integration of Unstructured Data of Hate Speech on Facebook Using Sentiment and Emotion Analysis,” IEEE Access, vol. 10, pp. 22400–22419, 2022, doi: 10.1109/ACCESS.2022.3151098.

H. Meng, T. Yan, F. Yuan, and H. Wei, “Speech Emotion Recognition from 3D Log-Mel Spectrograms with Deep Learning Network,” IEEE Access, vol. 7, pp. 125868–125881, 2019, doi: 10.1109/ACCESS.2019.2938007.

S. Madanian et al., “Speech emotion recognition using machine learning — A systematic review,” Intell. Syst. with Appl., vol. 20, p. 200266, Nov. 2023, doi: 10.1016/J.ISWA.2023.200266.

I. Pulatov, R. Oteniyazov, F. Makhmudov, and Y. I. Cho, “Enhancing Speech Emotion Recognition Using Dual Feature Extraction Encoders,” Sensors 2023, Vol. 23, Page 6640, vol. 23, no. 14, p. 6640, Jul. 2023, doi: 10.3390/S23146640.

A. K. Dubey and V. Jain, “Comparative Study of Convolution Neural Network’s Relu and Leaky-Relu Activation Functions,” Lect. Notes Electr. Eng., vol. 553, pp. 873–880, 2019, doi: 10.1007/978-981-13-6772-4_76/COVER.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.