Deep Learning-Based Image Captioning for Visual Impairment Using a VGG16 and LSTM Approach

Keywords:

Image Captioning, Visually Impaired, Convolutional Neural Networks (CNN), Long Short-Term Memory (LSTM), Text-to-Speech, Bilingual Evaluation Understudy (BLEU) Score.Abstract

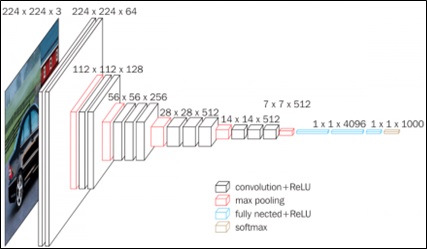

Visually impaired persons frequently have trouble understanding their environment. which affects regular tasks like reading signs, navigating their surroundings and recognizing things. Providing precise and timely image descriptions is crucial to improving their comprehension of their surroundings. Even if they work, traditional image captioning techniques frequently fail to provide clear, understandable explanations. Recent developments in deep learning present fresh chance to enhances to enhance picture captioning. In this sector, long short-Term Memory (LSTM) networks and Convolutional Neural Networks (CNNs) have become indispensable instruments. This research focuses on applications like VGG16 and Resnet models, data augmentation and transfer learning with a custom dataset to create such kind of captioning system providing original setup for precise context retrieval. Additionally, the system utilizes a text-to-speech functionality so users can listen to their responses if they are visually impaired. The highest accuracy obtained by the model is 0.9106 and validation loss was 0.1766. On randomly chosen set data experiments are conducted, there were significant differences between the BLEU scores we observed, ranging from 0.7788, to a perfect score of 0.1, indicating a diverse range of captions accuracy. This research shows how the adopted more sophisticated CNN models along with text-to-speech can improve image captioning systems by offering visually impaired detailed and meaningful descriptions.

References

R. Faurina, A. Jelita, A. Vatresia, and I. Agustian, “Image captioning to aid blind and visually impaired outdoor navigation,” IAES Int. J. Artif. Intell., vol. 12, no. 3, pp. 1104–1117, Sep. 2023, doi: 10.11591/ijai.v12.i3.pp1104-1117.

K. Pesudovs et al., “Global estimates on the number of people blind or visually impaired by cataract: a meta-analysis from 2000 to 2020,” Eye 2024 3811, vol. 38, no. 11, pp. 2156–2172, Mar. 2024, doi: 10.1038/s41433-024-02961-1.

S. K. West, G. S. Rubin, A. T. Broman, B. Muñoz, K. Bandeen-Roche, and K. Turano, “How Does Visual Impairment Affect Performance on Tasks of Everyday Life?: The SEE Project,” Arch. Ophthalmol., vol. 120, no. 6, pp. 774–780, Jun. 2002, doi: 10.1001/ARCHOPHT.120.6.774.

N. Awoke et al., “Visual impairment in Ethiopia: Systematic review and meta-analysis,” https://doi.org/10.1177/02646196221145358, vol. 42, no. 2, pp. 486–504, Dec. 2022, doi: 10.1177/02646196221145358.

M. Soori, B. Arezoo, and R. Dastres, “Artificial intelligence, machine learning and deep learning in advanced robotics, a review,” Cogn. Robot., vol. 3, pp. 54–70, Jan. 2023, doi: 10.1016/J.COGR.2023.04.001.

R. Ratheesh, S. R. Sri Rakshaga, A. Asan Fathima, S. Dhanusha, and A. K. Harini, “AI-Based Smart Visual Assistance System for Navigation, Guidance, and Monitoring of Visually Impaired People,” Proc. 9th Int. Conf. Sci. Technol. Eng. Math. Role Emerg. Technol. Digit. Transform. ICONSTEM 2024, 2024, doi: 10.1109/ICONSTEM60960.2024.10568710.

R. Gonzalez, J. Collins, C. Bennett, and S. Azenkot, “Investigating Use Cases of AI-Powered Scene Description Applications for Blind and Low Vision People,” Conf. Hum. Factors Comput. Syst. - Proc., May 2024, doi: 10.1145/3613904.3642211/SUPPL_FILE/PN8235-SUPPLEMENTAL-MATERIAL-1.XLSX.

R. M. Silva et al., “Vulnerable Road User Detection and Safety Enhancement: A Comprehensive Survey,” May 2024, Accessed: Oct. 24, 2024. [Online]. Available: https://arxiv.org/abs/2405.19202v3

R. N. Giri, R. R. Janghel, S. K. Pandey, H. Govil, and A. Sinha, “Enhanced Hyperspectral Image Classification Through Pretrained CNN Model for Robust Spatial Feature Extraction,” J. Opt., vol. 53, no. 3, pp. 2287–2300, Jul. 2024, doi: 10.1007/S12596-023-01473-7/METRICS.

O. Vinyals, A. Toshev, S. Bengio, and D. Erhan, “Show and tell: A neural image caption generator,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 07-12-June-2015, pp. 3156–3164, Oct. 2015, doi: 10.1109/CVPR.2015.7298935.

D. K. Kumar, A., Nagar, “AI-Based Language Translation and Interpretation Services: Improving Accessibility for Visually Impaired Students,” As Ed. Transform. Learn. Power Educ., 2024.

N. Thakur, E. Bhattacharjee, R. Jain, B. Acharya, and Y. C. Hu, “Deep learning-based parking occupancy detection framework using ResNet and VGG-16,” Multimed. Tools Appl., vol. 83, no. 1, pp. 1941–1964, Jan. 2024, doi: 10.1007/S11042-023-15654-W/METRICS.

J. H. Huang, H. Zhu, Y. Shen, S. Rudinac, A. M. Pacces, and E. Kanoulas, “A Novel Evaluation Framework for Image2Text Generation,” CEUR Workshop Proc., vol. 3752, pp. 51–65, Aug. 2024, Accessed: Oct. 24, 2024. [Online]. Available: https://arxiv.org/abs/2408.01723v1

V. Gorokhovatskyi, I. Tvoroshenko, and O. Yakovleva, “Transforming image descriptions as a set of descriptors to construct classification features,” Indones. J. Electr. Eng. Comput. Sci., vol. 33, no. 1, pp. 113–125, Jan. 2024, doi: 10.11591/ijeecs.v33.i1.pp113-125.

J. R. B. Da Silva, J. V. B. Soares, L. R. Gomes, and J. R. Sicchar, “An approach to the use of stereo vision system and AI for the accessibility of the visually impaired,” 2024 Int. Conf. Control. Autom. Diagnosis, ICCAD 2024, 2024, doi: 10.1109/ICCAD60883.2024.10553945.

T. Nandhini, P. Kalyanasundaram, R. M. Vasanth, S. H. Raj, and K. S. Keerthi, “Deep Learning Enabled Novel Blind Assistance System for Enhanced Accessibility,” Proc. - 2024 4th Int. Conf. Pervasive Comput. Soc. Networking, ICPCSN 2024, pp. 153–158, 2024, doi: 10.1109/ICPCSN62568.2024.00034.

A. Bhattacharyya, M. Palmer, and C. Heckman, “ReCAP: Semantic Role Enhanced Caption Generation.” pp. 13633–13649, 2024. Accessed: Oct. 24, 2024. [Online]. Available: https://aclanthology.org/2024.lrec-main.1191

W. Zeng and W. Zeng, “Image data augmentation techniques based on deep learning: A survey,” Math. Biosci. Eng. 2024 66190, vol. 21, no. 6, pp. 6190–6224, 2024, doi: 10.3934/MBE.2024272.

Z. Wen and L. Guo, “Efficient Higher-order Convolution for Small Kernels in Deep Learning,” Apr. 2024, Accessed: Oct. 24, 2024. [Online]. Available: https://arxiv.org/abs/2404.16380v1

O. Kolovou, “MACHINE TRANSLATION FROM ANCIENT GREEK TO ENGLISH: EXPERIMENTS WITH OPENNMT,” Jun. 2024, Accessed: Oct. 24, 2024. [Online]. Available: https://gupea.ub.gu.se/handle/2077/81765

B. Pydala, M. K. Reddy, T. Swetha, V. Ramavath, P. Siddartha, and V. S. Kumar, “A Smart Stick for Visually Impaired Individuals through AIoT Integration with Power Enhancement,” pp. 409–419, Jul. 2024, doi: 10.2991/978-94-6463-471-6_40.

N. M. Upadhyay, A. P. Singh, and A. Perti, “eyeRoad – An App that Helps Visually Impaired Peoples,” SSRN Electron. J., May 2024, doi: 10.2139/SSRN.4825671.

B. Kuriakose, R. Shrestha, and F. E. Sandnes, “DeepNAVI: A deep learning based smartphone navigation assistant for people with visual impairments,” Expert Syst. Appl., vol. 212, p. 118720, Feb. 2023, doi: 10.1016/J.ESWA.2022.118720.

S. Kumar et al., “Artificial Intelligence Solutions for the Visually Impaired: A Review,” https://services.igi-global.com/resolvedoi/resolve.aspx?doi=10.4018/978-1-6684-6519-6.ch013, pp. 198–207, Jan. 1AD, doi: 10.4018/978-1-6684-6519-6.CH013.

A. Ajina, R. Lochan, M. Saha, R. B. K. Showghi, and S. Harini, “Vision beyond Sight: An AI-Assisted Navigation System in Indoor Environments for the Visually Impaired,” Int. Conf. Emerg. Technol. Comput. Sci. Interdiscip. Appl. ICETCS 2024, 2024, doi: 10.1109/ICETCS61022.2024.10543550.

J. M. Parenreng, A. B. Kaswar, and I. F. Syahputra, “Visual Impaired Assistance for Object and Distance Detection Using Convolutional Neural Networks,” J. RESTI (Rekayasa Sist. dan Teknol. Informasi), vol. 8, no. 1, pp. 26–32, Jan. 2024, doi: 10.29207/RESTI.V8I1.5491.

“BLIND VISION-USING AI.” Accessed: Oct. 24, 2024. [Online]. Available: https://www.researchgate.net/publication/378304881_BLIND_VISION-USING_AI

C. Xie, Z. Zhang, Y. Wu, F. Zhu, R. Zhao, and S. Liang, “Described Object Detection: Liberating Object Detection with Flexible Expressions,” Adv. Neural Inf. Process. Syst., vol. 36, Jul. 2023, Accessed: Oct. 24, 2024. [Online]. Available: https://arxiv.org/abs/2307.12813v2

“VGG16 - Convolutional Network for Classification and Detection.” Accessed: Oct. 24, 2024. [Online]. Available: https://neurohive.io/en/popular-networks/vgg16/

“The Annotated ResNet-50. Explaining how ResNet-50 works and why… | by Suvaditya Mukherjee | Towards Data Science.” Accessed: Oct. 24, 2024. [Online]. Available: https://towardsdatascience.com/the-annotated-resnet-50-a6c536034758

“Image Captioning for Visually Impaired people.” Accessed: Oct. 24, 2024. [Online]. Available: https://www.kaggle.com/datasets/aishrules25/automatic-image-captioning-for-visually-impaired/data

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.