An Enhanced Similarity Measure–Driven K-Nearest Neighbor Framework for Categorical Data Classification

Keywords:

Machine Learning, Classification, KNN, DKNN, OKNN, SMKNNAbstract

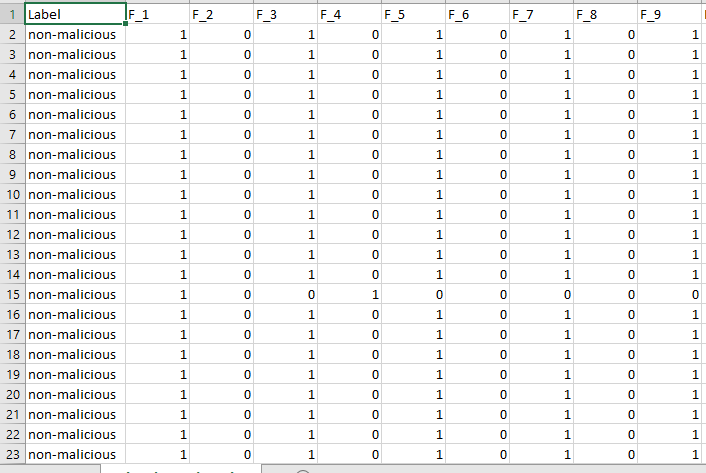

Machine learning provides effective answers to real-world classification issues by combining supervised approaches (e.g., regression, SVMs, decision trees, neural networks) and unsupervised techniques (e.g., clustering, PCA). Comparing categorical data to numerical data reveals that the former is still understudied. This study compares three variations of the K-Nearest Neighbors (KNN) algorithm, Dice Coefficient KNN (DKNN), Overlap Coefficient KNN (OKNN), and Simple Match Coefficient KNN (SMKNN) on three categorical datasets: Malware Detection, Hospital Readmission (Kaggle) and Mushroom (UCI Repository). Each variation improves classification performance by incorporating a unique similarity metric. Recall, accuracy, precision, and F1-score were used to evaluate the models. According to experimental results, SMKNN consistently performed better than the other variations, obtaining an average F1-score of 93.3%, accuracy of 88.29%, precision of 89.33%, and recall of 98%. With an F1-score of 91% and an average accuracy of 83.89%, OKNN came in second, while DKNN did worse with an accuracy of 73.74%. These results demonstrate the stability and promise of SMKNN as a dependable model for categorical data classification, highlighting its exceptional and flexible performance across a variety of datasets. The study gives useful information for identifying the best KNN variations for data-driven applications.

References

V. K. Varun Chandola, Shyam Boriah, “A Framework for Exploring Categorical Data,” Proc. 2009 SIAM Int. Conf. Data Min., pp. 187–198, 2009, doi: https://doi.org/10.1137/1.9781611972795.17.

Roy Thomas, “A Novel Ensemble Method for Detecting Outliers in Categorical Data,” Int. J. Adv. Trends Comput. Sci. Eng., vol. 9, no. 4, pp. 4947–4953, 2021, doi: 10.30534/ijatcse/2020/108942020.

Mohammad Bolandraftar, “Application of K-Nearest Neighbor (KNN) Approach for Predicting Economic Events: Theoretical Background,” Academia, 2013, [Online]. Available: https://www.academia.edu/82354140/Application_of_K_Nearest_Neighbor_KNN_Approach_for_Predicting_Economic_Events_Theoretical_Background

O. L. V. B. Surya Prasath, Haneen Arafat Abu Alfeilat, Ahmad B. A. Hassanat, “Distance and Similarity Measures Effect on the Performance of K-Nearest Neighbor Classifier -- A Review,” Big Data, vol. 7, no. 4, pp. 221–248, 2019, doi: https://doi.org/10.48550/arXiv.1708.04321.

B. Guindon and Y. Zhang, “Application of the Dice Coefficient to Accuracy Assessment of Object-Based Image Classification,” Can. J. Remote Sens., vol. 43, no. 1, pp. 48–61, Jan. 2017, doi: 10.1080/07038992.2017.1259557.

Q. Zheng, “From Whole to Part: Reference-Based Representation for Clustering Categorical Data,” IEEE Trans. Neural Networks Learn. Syst., vol. 31, no. 3, pp. 927–37, 2020.

Z. C. Semeh Ben Salem, Sami Naouali, “A fast and effective partitional clustering algorithm for large categorical datasets using a k-means based approach,” Comput. Electr. Eng., vol. 68, pp. 463–483, 2018, doi: https://doi.org/10.1016/j.compeleceng.2018.04.023.

V. T. Jaglan, Vivek, “Comparison of Jaccard, Dice, Cosine Similarity Coefficient To Find Best Fitness Value for Web Retrieved Documents Using Genetic Algorithm,” Int. J. Innov. Eng. Technol., vol. 2, no. 4, pp. 202–205, 2013, [Online]. Available: https://www.researchgate.net/publication/306204167_Comparison_of_Jaccard_Dice_Cosine_Similarity_Coefficient_To_Find_Best_Fitness_Value_for_Web_Retrieved_Documents_Using_Genetic_Algorithm

M. Tay and A. Senturk, “A New Energy-Aware Cluster Head Selection Algorithm for Wireless Sensor Networks,” Wirel. Pers. Commun., vol. 122, no. 3, pp. 2235–2251, Feb. 2022, doi: 10.1007/S11277-021-08990-3/METRICS.

L. D. Amir Ahmad, “A k-means type clustering algorithm for subspace clustering of mixed numeric and categorical datasets,” Pattern Recognit. Lett., vol. 32, no. 7, pp. 1062–1069, 2011, doi: https://doi.org/10.1016/j.patrec.2011.02.017.

R. S. R. Minho Kim, “Projected clustering for categorical datasets,” Pattern Recognit. Lett., vol. 27, no. 12, pp. 1405–1417, 2006, doi: https://doi.org/10.1016/j.patrec.2006.01.011.

T. S. Mohammed J. Zaki, Markus Peters, Ira Assent, “Clicks: An effective algorithm for mining subspace clusters in categorical datasets,” Data Knowl. Eng., vol. 60, no. 1, pp. 51–70, 2007, doi: https://doi.org/10.1016/j.datak.2006.01.005.

S. Sharma and M. Singh, “Generalized similarity measure for categorical data clustering,” 2016 Int. Conf. Adv. Comput. Commun. Informatics, ICACCI 2016, pp. 765–769, Nov. 2016, doi: 10.1109/ICACCI.2016.7732138.

H. L. Chen, K. T. Chuang, and M. S. Chen, “On data labeling for clustering categorical data,” IEEE Trans. Knowl. Data Eng., vol. 20, no. 11, pp. 1458–1471, Nov. 2008, doi: 10.1109/TKDE.2008.81.

D. T. Nguyen, L. Chen, and C. K. Chan, “Clustering with multiviewpoint-based similarity measure,” IEEE Trans. Knowl. Data Eng., vol. 24, no. 6, pp. 988–1001, 2012, doi: 10.1109/TKDE.2011.86.

S. Mumtaz and M. Giese, “Frequency-Based vs. Knowledge-Based Similarity Measures for Categorical Data,” AAAI Spring Symp. Comb. Mach. Learn. with Knowl. Eng., 2020.

X. Zeng, Y. Liao, Y. Liu, and Q. Zou, “Prediction and validation of disease genes using HeteSim scores,” IEEE/ACM Trans. Comput. Biol. Bioinforma., vol. 14, no. 3, pp. 687–695, May 2017, doi: 10.1109/TCBB.2016.2520947.

R. Devika, S. V. Avilala, and V. Subramaniyaswamy, “Comparative study of classifier for chronic kidney disease prediction using naive bayes, KNN and random forest,” Proc. 3rd Int. Conf. Comput. Methodol. Commun. ICCMC 2019, pp. 679–684, Mar. 2019, doi: 10.1109/ICCMC.2019.8819654.

H. C. Sayali D. Jadhav, “Comparative Study of K-NN , Naive Bayes and Decision Tree Classification Techniques,” Int. J. Sci. Res., vol. 5, no. 1, pp. 1842–1845, 2016, [Online]. Available: https://www.semanticscholar.org/paper/Comparative-Study-of-K-NN-%2C-Naive-Bayes-and-Tree-Jadhav-Channe/51c068c263ee197a292df5b74b58c8c55df9f9ca

Kedar Potdar, Taher S. Pardawala, Chinmay D. Pai, “A Comparative Study of Categorical Variable Encoding Techniques for Neural Network Classifiers,” Int. J. Comput. Appl., vol. 175, no. 4, 2017, [Online]. Available: https://www.ijcaonline.org/archives/volume175/number4/potdar-2017-ijca-915495.pdf

K. Das, J. Schneider, and D. B. Neill, “Anomaly pattern detection in categorical datasets,” Proc. ACM SIGKDD Int. Conf. Knowl. Discov. Data Min., pp. 169–176, 2008, doi: 10.1145/1401890.1401915.

B. R. Parida and S. P. Mandal, “Polarimetric decomposition methods for LULC mapping using ALOS L-band PolSAR data in Western parts of Mizoram, Northeast India,” SN Appl. Sci., vol. 2, no. 6, pp. 1–15, 2020, doi: 10.1007/s42452-020-2866-1.

A. A. Nair, T. D. Tran, A. Reiter, and M. A. Lediju Bell, “A Deep Learning Based Alternative to Beamforming Ultrasound Images,” ICASSP, IEEE Int. Conf. Acoust. Speech Signal Process. - Proc., vol. 2018-April, pp. 3359–3363, Sep. 2018, doi: 10.1109/ICASSP.2018.8461575.

M. C. Rahim Taheri, Meysam Ghahramani, Reza Javidan, Mohammad Shojafar, Zahra Pooranian, “Similarity-based Android malware detection using Hamming distance of static binary features,” Futur. Gener. Comput. Syst., vol. 105, pp. 230–247, 2020, doi: https://doi.org/10.1016/j.future.2019.11.034.

L. E. Tiago R.L. dos Santos, Zárate, “Categorical data clustering: What similarity measure to recommend?,” Expert Syst. Appl., vol. 42, no. 3, pp. 1247–1260, 2015, doi: https://doi.org/10.1016/j.eswa.2014.09.012.

C. W. Yean et al., “Analysis of the Distance Metrics of KNN Classifier for EEG Signal in Stroke Patients,” 2018 Int. Conf. Comput. Approach Smart Syst. Des. Appl. ICASSDA 2018, Sep. 2018, doi: 10.1109/ICASSDA.2018.8477601.

S. Naseer, “Enhanced Network Anomaly Detection Based on Deep Neural Networks,” IEEE Access, vol. 6, pp. 48231–48246, 2018, doi: 10.1109/ACCESS.2018.2863036.

R. K. Amin, Indwiarti, and Y. Sibaroni, “Implementation of decision tree using C4.5 algorithm in decision making of loan application by debtor (Case study: Bank pasar of Yogyakarta Special Region),” 2015 3rd Int. Conf. Inf. Commun. Technol. ICoICT 2015, pp. 75–80, Aug. 2015, doi: 10.1109/ICOICT.2015.7231400.

V. S. Tseng and C. P. Kao, “Efficiently mining gene expression data via a novel parameterless clustering method,” IEEE/ACM Trans. Comput. Biol. Bioinforma., vol. 2, no. 4, pp. 355–365, Oct. 2005, doi: 10.1109/TCBB.2005.56.

C. D. Cao, Fuyuan, Jiye Lian, Deyu Li, Liang Bai, “A dissimilarity measure for the k-Modes clustering algorithm,” Knowledge-Based Syst., vol. 26, pp. 120–127, 2012, doi: https://doi.org/10.1016/j.knosys.2011.07.011.

V. Minkevics and J. Kampars, “Methods, models and techniques to improve information system’s security in large organizations,” ICEIS 2020 - Proc. 22nd Int. Conf. Enterp. Inf. Syst., vol. 1, pp. 632–639, 2020, doi: 10.5220/0009572406320639.

H. N. Pham et al., “Predicting hospital readmission patterns of diabetic patients using ensemble model and cluster analysis,” Proc. 2019 Int. Conf. Syst. Sci. Eng. ICSSE 2019, pp. 273–278, Jul. 2019, doi: 10.1109/ICSSE.2019.8823441.

M. M. Eyad Alkronz, Khaled A. Moghayer, “Prediction of Whether Mushroom is Edible or Poisonous Using Back-propagation Neural Network.,” Int. J. Corpus Linguist., vol. 3, no. 2, pp. 1–8, 2019, [Online]. Available: https://www.researchgate.net/publication/331464780_Prediction_of_Whether_Mushroom_is_Edible_or_Poisonous_Using_Back-propagation_Neural_Network

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 50sea

This work is licensed under a Creative Commons Attribution 4.0 International License.