Contrasting Impact of Start State on Performance of a Reinforcement Learning Recommender System

Keywords:

Recommender Systems, Reinforcement Learning, Collaborative Filtering, Similarity Measures, Start State, Q-Learning.Abstract

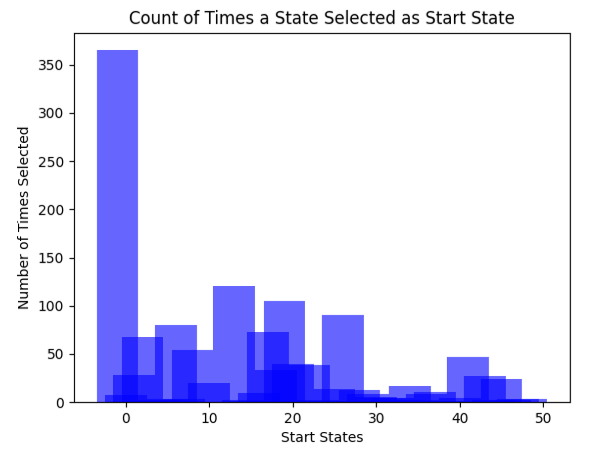

A recommendation problem and RL problem are very similar, as both try to increase user satisfaction in a certain environment. Typical recommender systems mainly rely on history of the user to give future recommendations and doesn’t adapt well to current changing user demands. RL can be used to evolve with currently changing user demands by considering a reward function as feedback. In this paper, recommendation problem is modeled as an RL problem using a squared grid environment, with each grid cell representing a unique state generated by a biclustering algorithm Bibit. These biclusters are sorted according to their overlapping and then mapped to a squared grid. An RL agent then moves on this grid to obtain recommendations. However, the agent has to decide the most pertinent start state that can give best recommendations. To decide the start state of the agent, a contrasting impact of different start states on the performance of RL agent-based RSs is required. For this purpose, we applied seven different similarity measures to determine the start state of the RL agent. These similarity measures are diverse, attributed to the fact that some may not use rating values, some may only use rating values, or some may use global parameters like average rating value or standard deviation in rating values. Evaluation is performed on ML-100K and FilmTrust datasets under different environment settings. Results proved that careful selection of start state can greatly improve the performance of RL-based recommender systems.

References

M. Ahmadian, S. Ahmadian, and M. Ahmadi, “RDERL: Reliable deep ensemble reinforcement learning-based recommender system,” Knowledge-Based Syst., vol. 263, p. 110289, Mar. 2023, doi: 10.1016/J.KNOSYS.2023.110289.

F. Tahmasebi, M. Meghdadi, S. Ahmadian, and K. Valiallahi, “A hybrid recommendation system based on profile expansion technique to alleviate cold start problem,” Multimed. Tools Appl., vol. 80, no. 2, pp. 2339–2354, Jan. 2021, doi: 10.1007/S11042-020-09768-8/METRICS.

K. Sivamayil, E. Rajasekar, B. Aljafari, S. Nikolovski, S. Vairavasundaram, and I. Vairavasundaram, “A Systematic Study on Reinforcement Learning Based Applications,” Energies 2023, Vol. 16, Page 1512, vol. 16, no. 3, p. 1512, Feb. 2023, doi: 10.3390/EN16031512.

A. Iftikhar, M. A. Ghazanfar, M. Ayub, S. Ali Alahmari, N. Qazi, and J. Wall, “A reinforcement learning recommender system using bi-clustering and Markov Decision Process,” Expert Syst. Appl., vol. 237, p. 121541, Mar. 2024, doi: 10.1016/J.ESWA.2023.121541.

Y. Ge et al., “Toward pareto efficient fairness-utility trade-off in recommendation through reinforcement learning,” WSDM 2022 - Proc. 15th ACM Int. Conf. Web Search Data Min., pp. 316–324, Feb. 2022, doi: 10.1145/3488560.3498487.

F. Fkih, “Similarity measures for Collaborative Filtering-based Recommender Systems: Review and experimental comparison,” J. King Saud Univ. - Comput. Inf. Sci., vol. 34, no. 9, pp. 7645–7669, Oct. 2022, doi: 10.1016/J.JKSUCI.2021.09.014.

S. Benkessirat, N. Boustia, and R. Nachida, “A New Collaborative Filtering Approach Based on Game Theory for Recommendation Systems,” J. Web Eng., vol. 20, no. 2, pp. 303–326, Mar. 2021, doi: 10.13052/JWE1540-9589.2024.

Q. Zhang, J. Lu, and Y. Jin, “Artificial intelligence in recommender systems,” Complex Intell. Syst., vol. 7, no. 1, pp. 439–457, Feb. 2021, doi: 10.1007/S40747-020-00212-W/FIGURES/1.

M. M. Afsar, “Personalized Recommendation Using Reinforcement Learning,” 2022, doi: 10.11575/PRISM/39785.

R. . Sargar, “Recommender System using Reinforcement Learning,” Arizona State Univ., 2020.

J. Leskovec, A. Rajaraman, and J. D. Ullman, “Mining of Massive Datasets,” Min. Massive Datasets, Jan. 2020, doi: 10.1017/9781108684163.

E. Saravana Kumar, K. Vengatesan, R. P. Singh, and C. Rajan, “Biclustering of gene expression data using biclustering iterative signature algorithm and biclustering coherent column,” Int. J. Biomed. Eng. Technol., vol. 26, no. 3–4, pp. 341–352, 2018, doi: 10.1504/IJBET.2018.089968.

A. Iftikhar, M. A. Ghazanfar, M. Ayub, Z. Mehmood, and M. Maqsood, “An Improved Product Recommendation Method for Collaborative Filtering,” IEEE Access, vol. 8, pp. 123841–123857, 2020, doi: 10.1109/ACCESS.2020.3005953.

S. Choi, H. Ha, U. Hwang, C. Kim, J.-W. Ha, and S. Yoon, “Reinforcement Learning based Recommender System using Biclustering Technique,” Jan. 2018, Accessed: May 19, 2024. [Online]. Available: https://arxiv.org/abs/1801.05532v1

F. M. Harper and J. A. Konstan, “The MovieLens Datasets,” ACM Trans. Interact. Intell. Syst., vol. 5, no. 4, Dec. 2015, doi: 10.1145/2827872.

G. Guo, J. Zhang, and N. Yorke-Smith, “A Novel Bayesian Similarity Measure for Recommender Systems”.

“librec.net.” Accessed: May 19, 2024. [Online]. Available: http://ww12.librec.net/datasets.html?usid=15&utid=28471851619

V. A. Padilha and R. J. G. B. Campello, “A systematic comparative evaluation of biclustering techniques,” BMC Bioinformatics, vol. 18, no. 1, pp. 1–25, Jan. 2017, doi: 10.1186/S12859-017-1487-1/FIGURES/15.

H. Al-Bashiri, M. A. Abdulgabber, A. Romli, and H. Kahtan, “An improved memory-based collaborative filtering method based on the TOPSIS technique,” PLoS One, vol. 13, no. 10, p. e0204434, Oct. 2018, doi: 10.1371/JOURNAL.PONE.0204434.

H. Liu, Z. Hu, A. Mian, H. Tian, and X. Zhu, “A new user similarity model to improve the accuracy of collaborative filtering,” Knowledge-Based Syst., vol. 56, pp. 156–166, Jan. 2014, doi: 10.1016/J.KNOSYS.2013.11.006.

S. B. Sun et al., “Integrating Triangle and Jaccard similarities for recommendation,” PLoS One, vol. 12, no. 8, p. e0183570, Aug. 2017, doi: 10.1371/JOURNAL.PONE.0183570.

“Sutton & Barto Book: Reinforcement Learning: An Introduction.” Accessed: May 19, 2024. [Online]. Available: http://incompleteideas.net/book/the-book-2nd.html

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 50SEA

This work is licensed under a Creative Commons Attribution 4.0 International License.